Posted by Kostas Kollias and Sreenivas Gollapudi, Research Scientists, Geo Algorithms Team, Google Research

Mapping algorithms used for navigation often rely on Dijkstra’s algorithm, a fundamental textbook solution for finding shortest paths in graphs. Dijkstra’s algorithm is simple and elegant — rather than considering all possible routes (an exponential number) it iteratively improves an initial solution, and works in polynomial time. The original algorithm and practical extensions of it (such as the A* algorithm) are used millions of times per day for routing vehicles on the global road network. However, due to the fact that most vehicles are gas-powered, these algorithms ignore refueling considerations because a) gas stations are usually available everywhere at the cost of a small detour, and b) the time needed to refuel is typically only a few minutes and is negligible compared to the total travel time.

This situation is different for electric vehicles (EVs). First, EV charging stations are not as commonly available as gas stations, which can cause range anxiety, the fear that the car will run out of power before reaching a charging station. This concern is common enough that it is considered one of the barriers to the widespread adoption of EVs. Second, charging an EV’s battery is a more decision-demanding task, because the charging time can be a significant fraction of the total travel time and can vary widely by station, vehicle model, and battery level. In addition, the charging time is non-linear — e.g., it takes longer to charge a battery from 90% to 100% than from 20% to 30%.

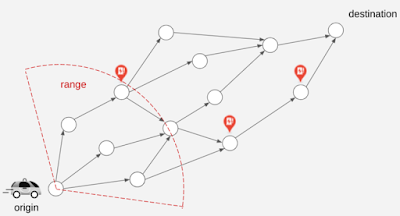

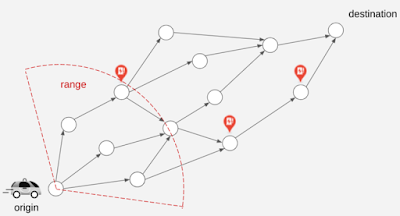

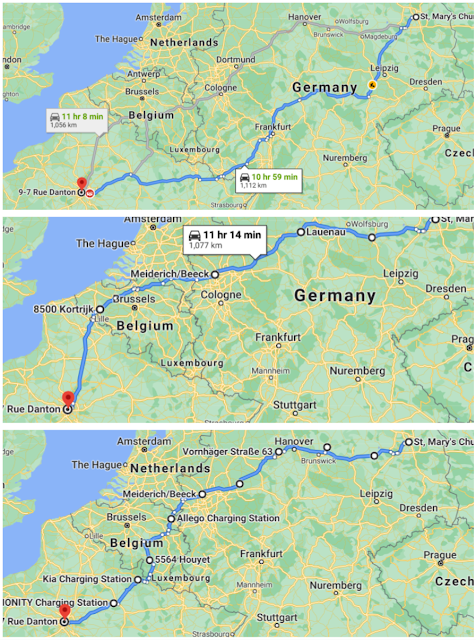

|

| The EV can only travel a distance up to the illustrated range before needing to recharge. Different roads and different stations have different time costs. The goal is to optimize for the total trip time. |

Today, we present a new approach for routing of EVs integrated into the latest release of Google Maps built into your car for participating EVs that reduces range anxiety by integrating recharging stations into the navigational route. Based on the battery level and the destination, Maps will recommend the charging stops and the corresponding charging levels that will minimize the total duration of the trip. To accomplish this we engineered a highly scalable solution for recommending efficient routes through charging stations, which optimizes the sum of the driving time and the charging time together.

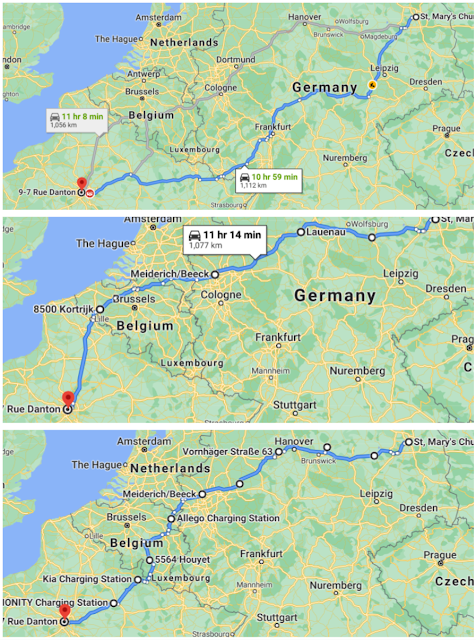

|

| The fastest route from Berlin to Paris for a gas fueled car is shown in the top figure. The middle figure shows the optimal route for a 400 km range EV (travel time indicated – charging time excluded), where the larger white circles along the route indicate charging stops. The bottom figure shows the optimal route for a 200 km range EV. |

Routing Through Charging Stations

A fundamental constraint on route selection is that the distance between recharging stops cannot be higher than what the vehicle can reach on a full charge. Consequently, the route selection model emphasizes the graph of charging stations, as opposed to the graph of road segments of the road network, where each charging station is a node and each trip between charging stations is an edge. Taking into consideration the various characteristics of each EV (such as the weight, maximum battery level, plug type, etc.) the algorithm identifies which of the edges are feasible for the EV under consideration and which are not. Once the routing request comes in, Maps EV routing augments the feasible graph with two new nodes, the origin and the destination, and with multiple new (feasible) edges that outline the potential trips from the origin to its nearby charging stations and to the destination from each of its nearby charging stations.

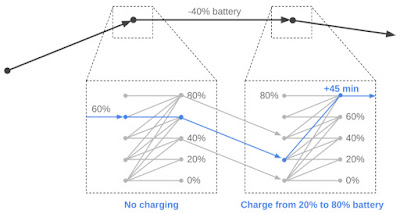

Routing using Dijkstra’s algorithm or A* on this graph is sufficient to give a feasible solution that optimizes for the travel time for drivers that do not care at all about the charging time, (i.e., drivers who always fully charge their batteries at each charging station). However, such algorithms are not sufficient to account for charging times. In this case, the algorithm constructs a new graph by replicating each charging station node multiple times. Half of the copies correspond to entering the station with a partially charged battery, with a charge, x, ranging from 0%-100%. The other half correspond to exiting the station with a fractional charge, y (again from 0%-100%). We add an edge from the entry node at the charge x to the exit node at charge y (constrained by y > x), with a corresponding charging time to get from x to y. When the trip from Station A to Station B spends some fraction (z) of the battery charge, we introduce an edge between every exit node of Station A to the corresponding entry node of Station B (at charge x–z). After performing this transformation, using Dijkstra or A* recovers the solution.

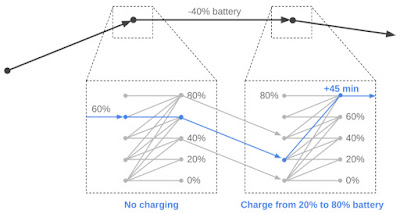

|

| An example of our node/edge replication. In this instance the algorithm opts to pass through the first station without charging and charges at the second station from 20% to 80% battery. |

Graph Sparsification

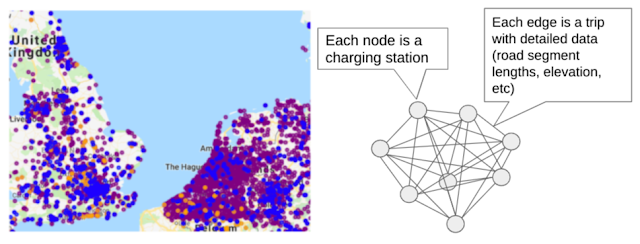

To perform the above operations while addressing range anxiety with confidence, the algorithm must compute the battery consumption of each trip between stations with good precision. For this reason, Maps maintains detailed information about the road characteristics along the trip between any two stations (e.g., the length, elevation, and slope, for each segment of the trip), taking into consideration the properties of each type of EV.

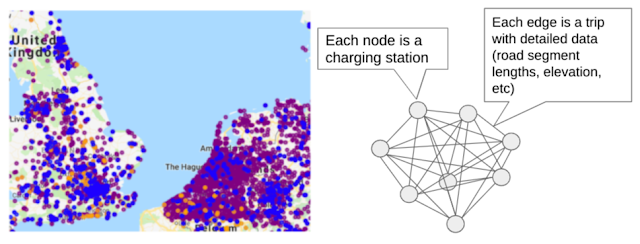

Due to the volume of information required for each segment, maintaining a large number of edges can become a memory intensive task. While this is not a problem for areas where EV charging stations are sparse, there exist locations in the world (such as Northern Europe) where the density of stations is very high. In such locations, adding an edge for every pair of stations between which an EV can travel quickly grows to billions of possible edges.

|

| The figure on the left illustrates the high density of charging stations in Northern Europe. Different colors correspond to different plug types. The figure on the right illustrates why the routing graph scales up very quickly in size as the density of stations increases. When there are many stations within range of each other, the induced routing graph is a complete graph that stores detailed information for each edge. |

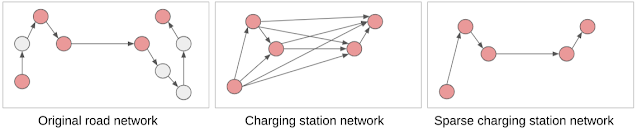

However, this high density implies that a trip between two stations that are relatively far apart will undoubtedly pass through multiple other stations. In this case, maintaining information about the long edge is redundant, making it possible to simply add the smaller edges (spanners) in the graph, resulting in sparser, more computationally feasible, graphs.

The spanner construction algorithm is a direct generalization of the greedy geometric spanner. The trips between charging stations are sorted from fastest to slowest and are processed in that order. For each trip between points a and b, the algorithm examines whether smaller subtrips already included in the spanner subsume the direct trip. To do so it compares the trip time and battery consumption that can be achieved using subtrips already in the spanner, against the same quantities for the direct a–b route. If they are found to be within a tiny error threshold, the direct trip from a to b is not added to the spanner, otherwise it is. Applying this sparsification algorithm has a notable impact and allows the graph to be served efficiently in responding to users’ routing requests.

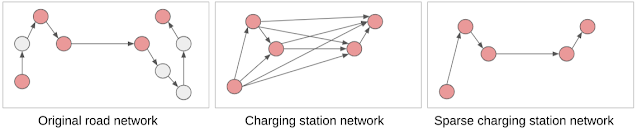

|

| On the left is the original road network (EV stations in light red). The station graph in the middle has edges for all feasible trips between stations. The sparse graph on the right maintains the distances with much fewer edges. |

Summary

In this work we engineer a scalable solution for routing EVs on long trips to include access to charging stations through the use of graph sparsification and novel framing of standard routing algorithms. We are excited to put algorithmic ideas and techniques in the hands of Maps users and look forward to serving stress-free routes for EV drivers across the globe!

Acknowledgements

We thank our collaborators Dixie Wang, Xin Wei Chow, Navin Gunatillaka, Stephen Broadfoot, Alex Donaldson, and Ivan Kuznetsov.

The project, which runs on a NVIDIA Jetson Nano 2GB Developer Kit, monitors the eyes of the user and voices a prompt when their blink rate is less than the recommended rate of 10 blinks per minute.

The project, which runs on a NVIDIA Jetson Nano 2GB Developer Kit, monitors the eyes of the user and voices a prompt when their blink rate is less than the recommended rate of 10 blinks per minute.  NVIDIA Omniverse is bringing the new standard in real-time graphics for developers. Check out some of the resources on the NVIDIA On-Demand catalog to learn more tips and tricks for developing in Omniverse.

NVIDIA Omniverse is bringing the new standard in real-time graphics for developers. Check out some of the resources on the NVIDIA On-Demand catalog to learn more tips and tricks for developing in Omniverse.

Nsight Graphics 2021.1 is available to download – check out this article to see what’s new.

Nsight Graphics 2021.1 is available to download – check out this article to see what’s new.