One of the most important aspects in machine learning is hyperparameter optimization, as finding the right hyperparameters for a machine learning task can make or break a model’s performance. Internally, we regularly use Google Vizier as the default platform for hyperparameter optimization. Throughout its deployment over the last 5 years, Google Vizier has been used more than 10 million times, over a vast class of applications, including machine learning applications from vision, reinforcement learning, and language but also scientific applications such as protein discovery and hardware acceleration. As Google Vizier is able to keep track of use patterns in its database, such data, usually consisting of optimization trajectories termed studies, contain very valuable prior information on realistic hyperparameter tuning objectives, and are thus highly attractive for developing better algorithms.

While there have been many previous methods for meta-learning over such data, such methods share one major common drawback: their meta-learning procedures depend heavily on numerical constraints such as the number of hyperparameters and their value ranges, and thus require all tasks to use the exact same total hyperparameter search space (i.e., tuning specifications). Additional textual information in the study, such as its description and parameter names, are also rarely used, yet can hold meaningful information about the type of task being optimized. Such a drawback becomes more exacerbated for larger datasets, which often contain significant amounts of such meaningful information.

Today in “Towards Learning Universal Hyperparameter Optimizers with Transformers”, we are excited to introduce the OptFormer, one of the first Transformer-based frameworks for hyperparameter tuning, learned from large-scale optimization data using flexible text-based representations. While numerous works have previously demonstrated the Transformer’s strong abilities across various domains, few have touched on its optimization-based capabilities, especially over text space. Our core findings demonstrate for the first time some intriguing algorithmic abilities of Transformers: 1) a single Transformer network is capable of imitating highly complex behaviors from multiple algorithms over long horizons; 2) the network is further capable of predicting objective values very accurately, in many cases surpassing Gaussian Processes, which are commonly used in algorithms such as Bayesian Optimization.

Approach: Representing Studies as Tokens

Rather than only using numerical data as common with previous methods, our novel approach instead utilizes concepts from natural language and represents all of the study data as a sequence of tokens, including textual information from initial metadata. In the animation below, this includes “CIFAR10”, “learning rate”, “optimizer type”, and “Accuracy”, which informs the OptFormer of an image classification task. The OptFormer then generates new hyperparameters to try on the task, predicts the task accuracy, and finally receives the true accuracy, which will be used to generate the next round’s hyperparameters. Using the T5X codebase, the OptFormer is trained in a typical encoder-decoder fashion using standard generative pretraining over a wide range of hyperparameter optimization objectives, including real world data collected by Google Vizier, as well as public hyperparameter (HPO-B) and blackbox optimization benchmarks (BBOB).

Imitating Policies

As the OptFormer is trained over optimization trajectories by various algorithms, it may now accurately imitate such algorithms simultaneously. By providing a text-based prompt in the metadata for the designated algorithm (e.g. “Regularized Evolution”), the OptFormer will imitate the algorithm’s behavior.

|

| Over an unseen test function, the OptFormer produces nearly identical optimization curves as the original algorithm. Mean and standard deviation error bars are shown. |

Predicting Objective Values

In addition, the OptFormer may now predict the objective value being optimized (e.g. accuracy) and provide uncertainty estimates. We compared the OptFormer’s prediction with a standard Gaussian Process and found that the OptFormer was able to make significantly more accurate predictions. This can be seen below qualitatively, where the OptFormer’s calibration curve closely follows the ideal diagonal line in a goodness-of-fit test, and quantitatively through standard aggregate metrics such as log predictive density.

|

| Left: Rosenblatt Goodness-of-Fit. Closer diagonal fit is better. Right: Log Predictive Density. Higher is better. |

Combining Both: Model-based Optimization

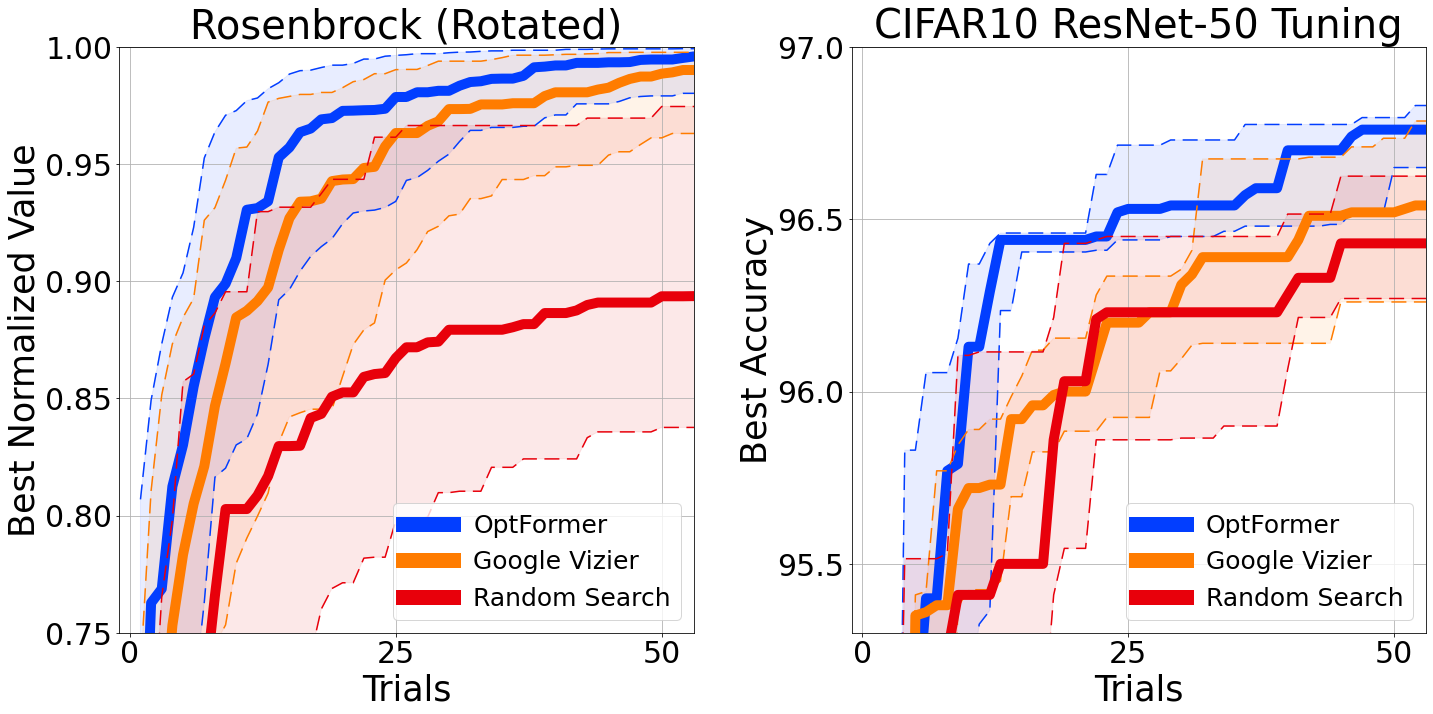

We may now use the OptFormer’s function prediction capability to better guide our imitated policy, similar to techniques found in Bayesian Optimization. Using Thompson Sampling, we may rank our imitated policy’s suggestions and only select the best according to the function predictor. This produces an augmented policy capable of outperforming our industry-grade Bayesian Optimization algorithm in Google Vizier when optimizing classic synthetic benchmark objectives and tuning the learning rate hyperparameters of a standard CIFAR-10 training pipeline.

|

| Left: Best-so-far optimization curve over a classic Rosenbrock function. Right: Best-so-far optimization curve over hyperparameters for training a ResNet-50 on CIFAR-10 via init2winit. Both cases use 10 seeds per curve, and error bars at 25th and 75th percentiles. |

Conclusion

Throughout this work, we discovered some useful and previously unknown optimization capabilities of the Transformer. In the future, we hope to pave the way for a universal hyperparameter and blackbox optimization interface to use both numerical and textual data to facilitate optimization over complex search spaces, and integrate the OptFormer with the rest of the Transformer ecosystem (e.g. language, vision, code) by leveraging Google’s vast collection of offline AutoML data.

Acknowledgements

The following members of DeepMind and the Google Research Brain Team conducted this research: Yutian Chen, Xingyou Song, Chansoo Lee, Zi Wang, Qiuyi Zhang, David Dohan, Kazuya Kawakami, Greg Kochanski, Arnaud Doucet, Marc’aurelio Ranzato, Sagi Perel, and Nando de Freitas.

We would like to also thank Chris Dyer, Luke Metz, Kevin Murphy, Yannis Assael, Frank Hutter, and Esteban Real for providing valuable feedback, and further thank Sebastian Pineda Arango, Christof Angermueller, and Zachary Nado for technical discussions on benchmarks. In addition, we thank Daniel Golovin, Daiyi Peng, Yingjie Miao, Jack Parker-Holder, Jie Tan, Lucio Dery, and Aleksandra Faust for multiple useful conversations.

Finally, we thank Tom Small for designing the animation for this post.