Mapping the wiring and firing activity of the human brain is fundamental to deciphering how we think — how we sense the world, learn, decide, remember, and create — as well as what issues can arise in brain disease or dysfunction. Recent efforts have delivered publicly available brain maps (high-resolution 3D mapping of brain cells and their connectivities) at unprecedented quality and scale, such as H01, a 1.4 petabyte nanometer-scale digital reconstruction of a sample of human brain tissue from Harvard / Google, and the cubic millimeter mouse cortex dataset from our colleagues at the MICrONS consortium.

To interpret brain maps at this scale requires multiple layers of analysis, including the identification of synaptic connections, cellular subcompartments, and cell types. Machine learning and computer vision technology have played a central role in enabling these analyses, but deploying such systems is still a laborious process, requiring hours of manual ground truth labeling by expert annotators and significant computational resources. Moreover, some important tasks, such as identifying the cell type from only a small fragment of axon or dendrite, can be challenging even for human experts, and have not yet been effectively automated.

Today, in “Multi-Layered Maps of Neuropil with Segmentation-Guided Contrastive Learning”, we are announcing Segmentation-Guided Contrastive Learning of Representations (SegCLR), a method for training rich, generic representations of cellular morphology (the cell’s shape) and ultrastructure (the cell’s internal structure) without laborious manual effort. SegCLR produces compact vector representations (i.e., embeddings) that are applicable across diverse downstream tasks (e.g., local classification of cellular subcompartments, unsupervised clustering), and are even able to identify cell types from only small fragments of a cell. We trained SegCLR on both the H01 human cortex dataset and the MICrONS mouse cortex dataset, and we are releasing the resulting embedding vectors, about 8 billion in total, for researchers to explore.

| From brain cells segmented out of a 3D block of tissue, SegCLR embeddings capture cellular morphology and ultrastructure and can be used to distinguish cellular subcompartments (e.g., dendritic spine versus dendrite shaft) or cell types (e.g., pyramidal versus microglia cell). |

Representing Cellular Morphology and Ultrastructure

SegCLR builds on recent advances in self-supervised contrastive learning. We use a standard deep network architecture to encode inputs comprising local 3D blocks of electron microscopy data (about 4 micrometers on a side) into 64-dimensional embedding vectors. The network is trained via a contrastive loss to map semantically related inputs to similar coordinates in the embedding space. This is close to the popular SimCLR setup, except that we also require an instance segmentation of the volume (tracing out individual cells and cell fragments), which we use in two important ways.

First, the input 3D electron microscopy data are explicitly masked by the segmentation, forcing the network to focus only on the central cell within each block. Second, we leverage the segmentation to automatically define which inputs are semantically related: positive pairs for the contrastive loss are drawn from nearby locations on the same segmented cell and trained to have similar representations, while inputs drawn from different cells are trained to have dissimilar representations. Importantly, publicly available automated segmentations of the human and mouse datasets were sufficiently accurate to train SegCLR without requiring laborious review and correction by human experts.

Reducing Annotation Training Requirements by Three Orders of Magnitude

SegCLR embeddings can be used in diverse downstream settings, whether supervised (e.g., training classifiers) or unsupervised (e.g., clustering or content-based image retrieval). In the supervised setting, embeddings simplify the training of classifiers, and can greatly reduce ground truth labeling requirements. For example, we found that for identifying cellular subcompartments (axon, dendrite, soma, etc.) a simple linear classifier trained on top of SegCLR embeddings outperformed a fully supervised deep network trained on the same task, while using only about one thousand labeled examples instead of millions.

|

| We assessed the classification performance for axon, dendrite, soma, and astrocyte subcompartments in the human cortex dataset via mean F1-Score, while varying the number of training examples used. Linear classifiers trained on top of SegCLR embeddings matched or exceeded the performance of a fully supervised deep classifier (horizontal line), while using a fraction of the training data. |

Distinguishing Cell Types, Even from Small Fragments

Distinguishing different cell types is an important step towards understanding how brain circuits develop and function in health and disease. Human experts can learn to identify some cortical cell types based on morphological features, but manual cell typing is laborious and ambiguous cases are common. Cell typing also becomes more difficult when only small fragments of cells are available, which is common for many cells in current connectomic reconstructions.

|

| Human experts manually labeled cell types for a small number of proofread cells in each dataset. In the mouse cortex dataset, experts labeled six neuron types (top) and four glia types (not shown). In the human cortex dataset, experts labeled two neuron types (not shown) and four glia types (bottom). (Rows not to scale with each other.) |

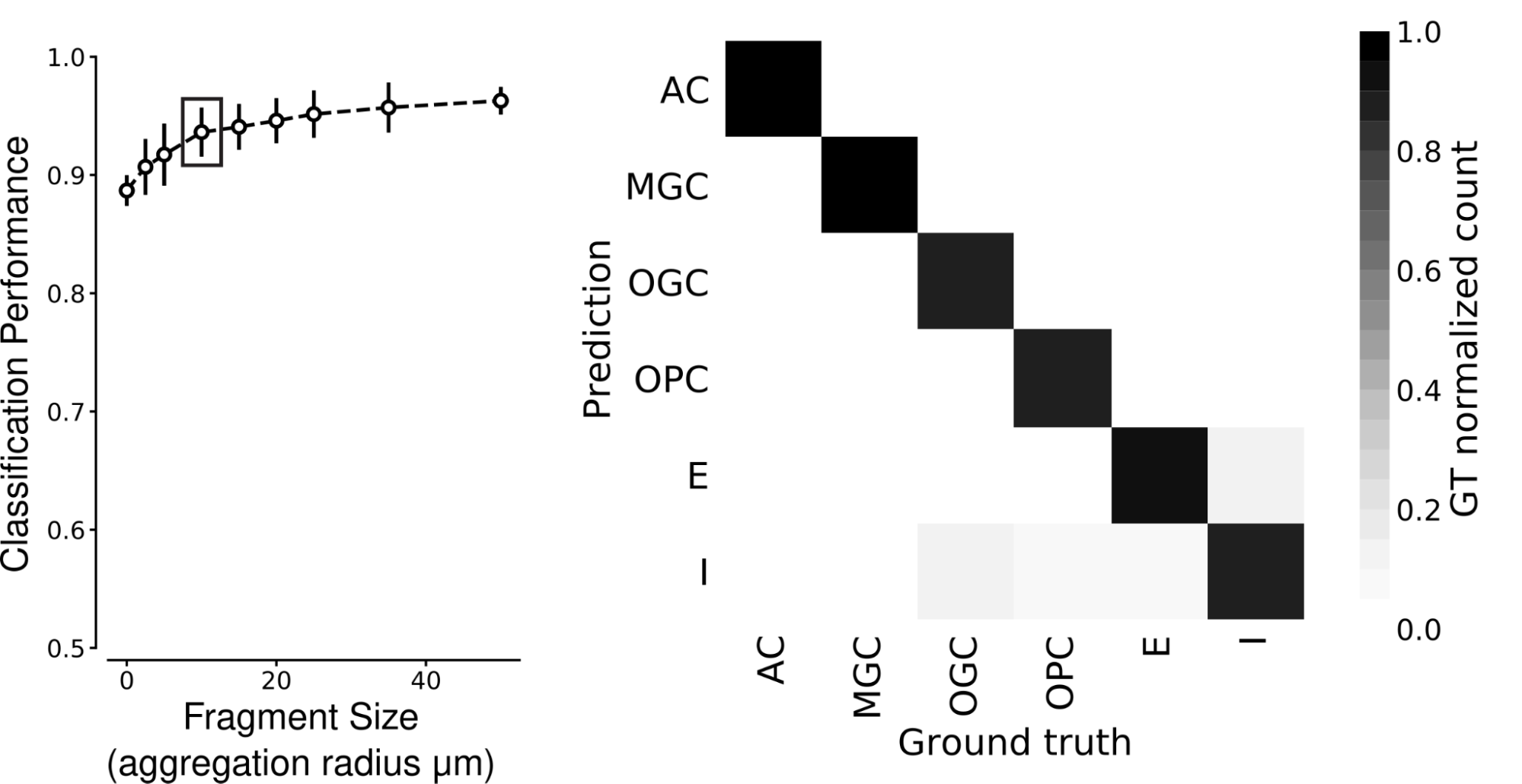

We found that SegCLR accurately infers human and mouse cell types, even for small fragments. Prior to classification, we collected and averaged embeddings within each cell over a set aggregation distance, defined as the radius from a central point. We found that human cortical cell types can be identified with high accuracy for aggregation radii as small as 10 micrometers, even for types that experts find difficult to distinguish, such as microglia (MGC) versus oligodendrocyte precursor cells (OPC).

|

| SegCLR can classify cell types, even from small fragments. Left: Classification performance over six human cortex cell types for shallow ResNet models trained on SegCLR embeddings for different sized cell fragments. Aggregation radius zero corresponds to very small fragments with only a single embedding. Cell type performance reaches high accuracy (0.938 mean F1-Score) for fragments with aggregation radii of only 10 micrometers (boxed point). Right: Class-wise confusion matrix at 10 micrometers aggregation radius. Darker shading along the diagonal indicates that predicted cell types agree with expert labels in most cases. AC: astrocyte; MGC: microglia cell; OGC: oligodendrocyte cell; OPC: oligodendrocyte precursor cell; E: excitatory neuron; I: inhibitory neuron. |

In the mouse cortex, ten cell types could be distinguished with high accuracy at aggregation radii of 25 micrometers.

|

| Left: Classification performance over the ten mouse cortex cell types reaches 0.832 mean F1-Score for fragments with aggregation radius 25 micrometers (boxed point). Right: The class-wise confusion matrix at 25 micrometers aggregation radius. Boxes indicate broad groups (glia, excitatory neurons, and inhibitory interneurons). P: pyramidal cell; THLC: thalamocortical axon; BC: basket cell; BPC: bipolar cell; MC: Martinotti cell; NGC: neurogliaform cell. |

In additional cell type applications, we used unsupervised clustering of SegCLR embeddings to reveal further neuronal subtypes, and demonstrated how uncertainty estimation can be used to restrict classification to high confidence subsets of the dataset, e.g., when only a few cell types have expert labels.

Revealing Patterns of Brain Connectivity

Finally, we showed how SegCLR can be used for automated analysis of brain connectivity by cell typing the synaptic partners of reconstructed cells throughout the mouse cortex dataset. Knowing the connectivity patterns between specific cell types is fundamental to interpreting large-scale connectomic reconstructions of brain wiring, but this typically requires manual tracing to identify partner cell types. Using SegCLR, we replicated brain connectivity findings that previously relied on intensive manual tracing, while extending their scale in terms of the number of synapses, cell types, and brain areas analyzed. (See the paper for further details.)

What’s Next?

SegCLR captures rich cellular features and can greatly simplify downstream analyses compared to working directly with raw image and segmentation data. We are excited to see what the community can discover using the ~8 billion embeddings we are releasing for the human and mouse cortical datasets (example access code; browsable human and mouse views in Neuroglancer). By reducing complex microscopy data to rich and compact embedding representations, SegCLR opens many novel avenues for biological insight, and may serve as a link to complementary modalities for high-dimensional characterization at the cellular and subcellular levels, such as spatially-resolved transcriptomics.