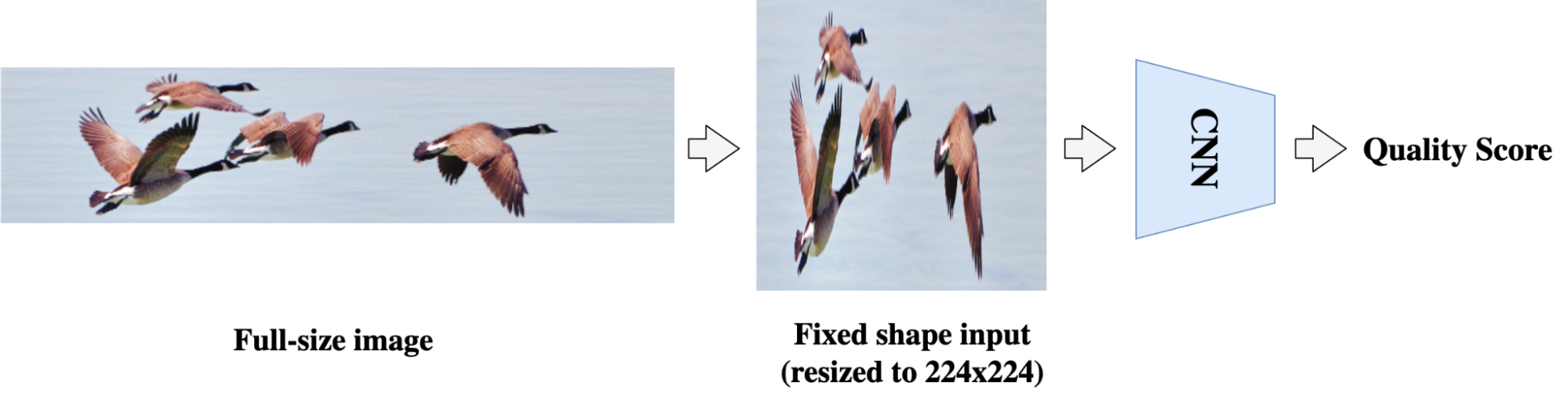

Understanding the aesthetic and technical quality of images is important for providing a better user visual experience. Image quality assessment (IQA) uses models to build a bridge between an image and a user’s subjective perception of its quality. In the deep learning era, many IQA approaches, such as NIMA, have achieved success by leveraging the power of convolutional neural networks (CNNs). However, CNN-based IQA models are often constrained by the fixed-size input requirement in batch training, i.e., the input images need to be resized or cropped to a fixed size shape. This preprocessing is problematic for IQA because images can have very different aspect ratios and resolutions. Resizing and cropping can impact image composition or introduce distortions, thus changing the quality of the image.

|

| In CNN-based models, images need to be resized or cropped to a fixed shape for batch training. However, such preprocessing can alter the image aspect ratio and composition, thus impacting image quality. Original image used under CC BY 2.0 license. |

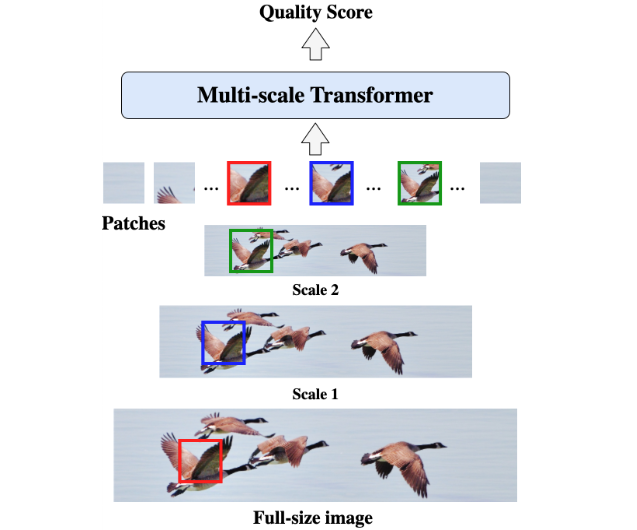

In “MUSIQ: Multi-scale Image Quality Transformer”, published at ICCV 2021, we propose a patch-based multi-scale image quality transformer (MUSIQ) to bypass the CNN constraints on fixed input size and predict the image quality effectively on native-resolution images. The MUSIQ model supports the processing of full-size image inputs with varying aspect ratios and resolutions and allows multi-scale feature extraction to capture image quality at different granularities. To support positional encoding in the multi-scale representation, we propose a novel hash-based 2D spatial embedding combined with an embedding that captures the image scaling. We apply MUSIQ on four large-scale IQA datasets, demonstrating consistent state-of-the-art results across three technical quality datasets (PaQ-2-PiQ, KonIQ-10k, and SPAQ) and comparable performance to that of state-of-the-art models on the aesthetic quality dataset AVA.

|

| The patch-based MUSIQ model can process the full-size image and extract multi-scale features, which better aligns with a person’s typical visual response. |

In the following figure, we show a sample of images, their MUSIQ score, and their mean opinion score (MOS) from multiple human raters in the brackets. The range of the score is from 0 to 100, with 100 being the highest perceived quality. As we can see from the figure, MUSIQ predicts high scores for images with high aesthetic quality and high technical quality, and it predicts low scores for images that are not aesthetically pleasing (low aesthetic quality) or that contain visible distortions (low technical quality).

| High quality |  |

|

| 76.10 [74.36] | 69.29 [70.92] | |

| Low aesthetics quality |  |

|

| 55.37 [53.18] | 32.50 [35.47] | |

| Low technical quality |  |

|

| 14.93 [14.38] | 15.24 [11.86] |

| Predicted MUSIQ score (and ground truth) on images from the KonIQ-10k dataset. Top: MUSIQ predicts high scores for high quality images. Middle: MUSIQ predicts low scores for images with low aesthetic quality, such as images with poor composition or lighting. Bottom: MUSIQ predicts low scores for images with low technical quality, such as images with visible distortion artifacts (e.g., blurry, noisy). |

The Multi-scale Image Quality Transformer

MUSIQ tackles the challenge of learning IQA on full-size images. Unlike CNN-models that are often constrained to fixed resolution, MUSIQ can handle inputs with arbitrary aspect ratios and resolutions.

To accomplish this, we first make a multi-scale representation of the input image, containing the native resolution image and its resized variants. To preserve the image composition, we maintain its aspect ratio during resizing. After obtaining the pyramid of images, we then partition the images at different scales into fixed-size patches that are fed into the model.

|

| Illustration of the multi-scale image representation in MUSIQ. |

Since patches are from images of varying resolutions, we need to effectively encode the multi-aspect-ratio multi-scale input into a sequence of tokens, capturing both the pixel, spatial, and scale information. To achieve this, we design three encoding components in MUSIQ, including: 1) a patch encoding module to encode patches extracted from the multi-scale representation; 2) a novel hash-based spatial embedding module to encode the 2D spatial position for each patch; and 3) a learnable scale embedding to encode different scales. In this way, we can effectively encode the multi-scale input as a sequence of tokens, serving as the input to the Transformer encoder.

To predict the final image quality score, we use the standard approach of prepending an additional learnable “classification token” (CLS). The CLS token state at the output of the Transformer encoder serves as the final image representation. We then add a fully connected layer on top to predict the IQS. The figure below provides an overview of the MUSIQ model.

Since MUSIQ only changes the input encoding, it is compatible with any Transformer variants. To demonstrate the effectiveness of the proposed method, in our experiments we use the classic Transformer with a relatively lightweight setting so that the model size is comparable to ResNet-50.

Benchmark and Evaluation

To evaluate MUSIQ, we run experiments on multiple large-scale IQA datasets. On each dataset, we report the Spearman’s rank correlation coefficient (SRCC) and Pearson linear correlation coefficient (PLCC) between our model prediction and the human evaluators’ mean opinion score. SRCC and PLCC are correlation metrics ranging from -1 to 1. Higher PLCC and SRCC means better alignment between model prediction and human evaluation. The graph below shows that MUSIQ outperforms other methods on PaQ-2-PiQ, KonIQ-10k, and SPAQ.

Notably, the PaQ-2-PiQ test set is entirely composed of large pictures having at least one dimension exceeding 640 pixels. This is very challenging for traditional deep learning approaches, which require resizing. MUSIQ can outperform previous methods by a large margin on the full-size test set, which verifies its robustness and effectiveness.

It is also worth mentioning that previous CNN-based methods often required sampling as many as 20 crops for each image during testing. This kind of multi-crop ensemble is a way to mitigate the fixed shape constraint in the CNN models. But since each crop is only a sub-view of the whole image, the ensemble is still an approximate approach. Moreover, CNN-based methods both add additional inference cost for every crop and, because they sample different crops, they can introduce randomness in the result. In contrast, because MUSIQ takes the full-size image as input, it can directly learn the best aggregation of information across the full image and it only needs to run the inference once.

To further verify that the MUSIQ model captures different information at different scales, we visualize the attention weights on each image at different scales.

|

| Attention visualization from the output tokens to the multi-scale representation, including the original resolution image and two proportionally resized images. Brighter areas indicate higher attention, which means that those areas are more important for the model output. Images for illustration are taken from the AVA dataset. |

We observe that MUSIQ tends to focus on more detailed areas in the full, high-resolution images and on more global areas on the resized ones. For example, for the flower photo above, the model’s attention on the original image is focusing on the pedal details, and the attention shifts to the buds at lower resolutions. This shows that the model learns to capture image quality at different granularities.

Conclusion

We propose a multi-scale image quality transformer (MUSIQ), which can handle full-size image input with varying resolutions and aspect ratios. By transforming the input image to a multi-scale representation with both global and local views, the model can capture the image quality at different granularities. Although MUSIQ is designed for IQA, it can be applied to other scenarios where task labels are sensitive to image resolution and aspect ratio. The MUSIQ model and checkpoints are available at our GitHub repository.

Acknowledgements

This work is made possible through a collaboration spanning several teams across Google. We’d like to acknowledge contributions from Qifei Wang, Yilin Wang and Peyman Milanfar.