Hi All,

I’m facing a weird slowness issue when trying to use generators for creating dataset. Details : https://stackoverflow.com/questions/71459793/tensorflow-slow-processing-with-generator

Can someone from the community take a look at this generator code and help me understand what I’m doing wrong ?

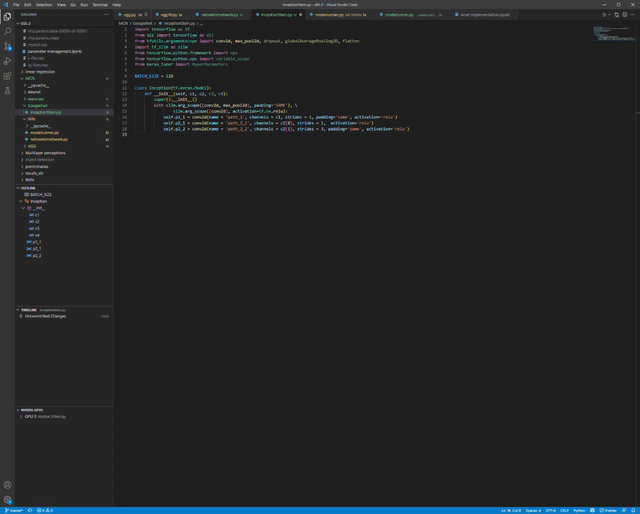

def getSplit(original_list, n): return [original_list[i:i + n] for i in range(0, len(original_list), n)] # # 200 files -> 48 Mb (1 file) # 15 files in memory at a time # 5 generators # 3 files per generator # def pandasGenerator(s3files, n=3): print(f"Processing: {s3files} to : {tf.get_static_value(s3files)}") s3files = tf.get_static_value(s3files) s3files = [str(s3file)[2:-1] for s3file in s3files] batches = getSplit(s3files, n) for batch in batches: t = time.process_time() print(f"Processing Batch: {batch}") panda_ds = pd.concat([pd.read_parquet(s3file) for s3file in batch], ignore_index=True) elapsed_time = time.process_time() - t print(f"base_read_time: {elapsed_time}") for row in panda_ds.itertuples(index=False): pan_row = dict(row._asdict()) labels = pan_row.pop('label') yield dict(pan_row), labels return def createDS(s3bucket, s3prefix): s3files = getFileLists(bucket=s3bucket, prefix=s3prefix) dataset = (tf.data.Dataset.from_tensor_slices(getSplit(s3files, 40)) .interleave( lambda files: tf.data.Dataset.from_generator(pandasGenerator, output_signature=( { }, tf.TensorSpec(shape=(), dtype=tf.float64)), args=(files, 3)), num_parallel_calls=tf.data.AUTOTUNE )).prefetch(tf.data.AUTOTUNE) return dataset

submitted by /u/h1t35hv1

[visit reddit] [comments]