Just like that, summer falls into September, and some of the most anticipated games of the year, like the Cyberpunk 2077: Phantom Liberty expansion, PAYDAY 3 and Party Animals, are dropping into the GeForce NOW library at launch this month. They’re part of 24 new games hitting the cloud gaming service in September. And the Read article >

Automatic speech recognition (ASR) technology has made conversations more accessible with live captions in remote conferencing software, mobile applications, and head-worn displays. However, to maintain real-time responsiveness, live caption systems often display interim predictions that are updated as new utterances are received. This can cause text instability (a “flicker” where previously displayed text is updated, shown in the captions on the left in the video below), which can impair users’ reading experience due to distraction, fatigue, and difficulty following the conversation.

In “Modeling and Improving Text Stability in Live Captions”, presented at ACM CHI 2023, we formalize this problem of text stability through a few key contributions. First, we quantify the text instability by employing a vision-based flicker metric that uses luminance contrast and discrete Fourier transform. Second, we also introduce a stability algorithm to stabilize the rendering of live captions via tokenized alignment, semantic merging, and smooth animation. Finally, we conducted a user study (N=123) to understand viewers’ experience with live captioning. Our statistical analysis demonstrates a strong correlation between our proposed flicker metric and viewers’ experience. Furthermore, it shows that our proposed stabilization techniques significantly improves viewers’ experience (e.g., the captions on the right in the video above).

| Raw ASR captions vs. stabilized captions |

Metric

Inspired by previous work, we propose a flicker-based metric to quantify text stability and objectively evaluate the performance of live captioning systems. Specifically, our goal is to quantify the flicker in a grayscale live caption video. We achieve this by comparing the difference in luminance between individual frames (frames in the figures below) that constitute the video. Large visual changes in luminance are obvious (e.g., addition of the word “bright” in the figure on the bottom), but subtle changes (e.g., update from “… this gold. Nice..” to “… this. Gold is nice”) may be difficult to discern for readers. However, converting the change in luminance to its constituting frequencies exposes both the obvious and subtle changes.

Thus, for each pair of contiguous frames, we convert the difference in luminance into its constituting frequencies using discrete Fourier transform. We then sum over each of the low and high frequencies to quantify the flicker in this pair. Finally, we average over all of the frame-pairs to get a per-video flicker.

For instance, we can see below that two identical frames (top) yield a flicker of 0, while two non-identical frames (bottom) yield a non-zero flicker. It is worth noting that higher values of the metric indicate high flicker in the video and thus, a worse user experience than lower values of the metric.

|

| Illustration of the flicker metric between two identical frames. |

|

| Illustration of the flicker between two non-identical frames. |

Stability algorithm

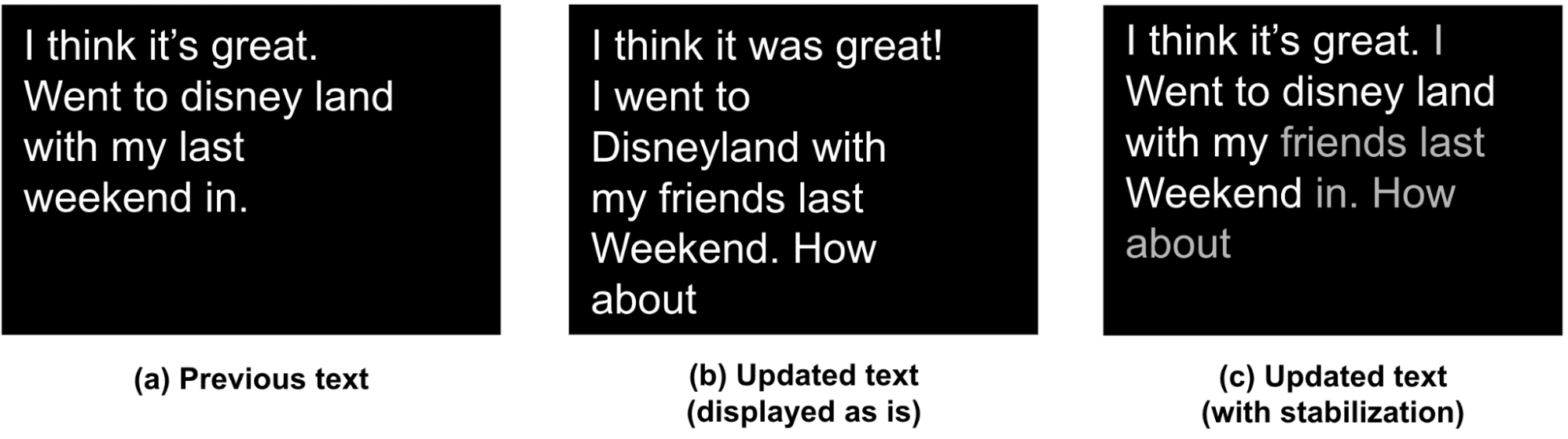

To improve the stability of live captions, we propose an algorithm that takes as input already rendered sequence of tokens (e.g., “Previous” in the figure below) and the new sequence of ASR predictions, and outputs an updated stabilized text (e.g., “Updated text (with stabilization)” below). It considers both the natural language understanding (NLU) aspect as well as the ergonomic aspect (display, layout, etc.) of the user experience in deciding when and how to produce a stable updated text. Specifically, our algorithm performs tokenized alignment, semantic merging, and smooth animation to achieve this goal. In what follows, a token is defined as a word or punctuation produced by ASR.

|

| We show (a) the previously already rendered text, (b) the baseline layout of updated text without our merging algorithm, and (c) the updated text as generated by our stabilization algorithm. |

Our algorithm address the challenge of producing stabilized updated text by first identifying three classes of changes (highlighted in red, green, and blue below):

- Red: Addition of tokens to the end of previously rendered captions (e.g., “How about”).

- Green: Addition / deletion of tokens, in the middle of already rendered captions.

- B1: Addition of tokens (e.g., “I” and “friends”). These may or may not affect the overall comprehension of the captions, but may lead to layout change. Such layout changes are not desired in live captions as they cause significant jitter and poorer user experience. Here “I” does not add to the comprehension but “friends” does. Thus, it is important to balance updates with stability specially for B1 type tokens.

- B2: Removal of tokens, e.g., “in” is removed in the updated sentence.

- Blue: Re-captioning of tokens: This includes token edits that may or may not have an impact on the overall comprehension of the captions.

- C1: Proper nouns like “disney land” are updated to “Disneyland”.

- C2: Grammatical shorthands like “it’s” are updated to “It was”.

|

| Classes of changes between previously displayed and updated text. |

Alignment, merging, and smoothing

To maximize text stability, our goal is to align the old sequence with the new sequence using updates that make minimal changes to the existing layout while ensuring accurate and meaningful captions. To achieve this, we leverage a variant of the Needleman-Wunsch algorithm with dynamic programming to merge the two sequences depending on the class of tokens as defined above:

- Case A tokens: We directly add case A tokens, and line breaks as needed to fit the updated captions.

- Case B tokens: Our preliminary studies showed that users preferred stability over accuracy for previously displayed captions. Thus, we only update case B tokens if the updates do not break an existing line layout.

- Case C tokens: We compare the semantic similarity of case C tokens by transforming original and updated sentences into sentence embeddings, measuring their dot-product, and updating them only if they are semantically different (similarity < 0.85) and the update will not cause new line breaks.

Finally, we leverage animations to reduce visual jitter. We implement smooth scrolling and fading of newly added tokens to further stabilize the overall layout of the live captions.

User evaluation

We conducted a user study with 123 participants to (1) examine the correlation of our proposed flicker metric with viewers’ experience of the live captions, and (2) assess the effectiveness of our stabilization techniques.

We manually selected 20 videos in YouTube to obtain a broad coverage of topics including video conferences, documentaries, academic talks, tutorials, news, comedy, and more. For each video, we selected a 30-second clip with at least 90% speech.

We prepared four types of renderings of live captions to compare:

- Raw ASR: raw speech-to-text results from a speech-to-text API.

- Raw ASR + thresholding: only display interim speech-to-text result if its confidence score is higher than 0.85.

- Stabilized captions: captions using our algorithm described above with alignment and merging.

- Stabilized and smooth captions: stabilized captions with smooth animation (scrolling + fading) to assess whether softened display experience helps improve the user experience.

We collected user ratings by asking the participants to watch the recorded live captions and rate their assessments of comfort, distraction, ease of reading, ease of following the video, fatigue, and whether the captions impaired their experience.

Correlation between flicker metric and user experience

We calculated Spearman’s coefficient between the flicker metric and each of the behavioral measurements (values range from -1 to 1, where negative values indicate a negative relationship between the two variables, positive values indicate a positive relationship, and zero indicates no relationship). Shown below, our study demonstrates statistically significant (𝑝 < 0.001) correlations between our flicker metric and users’ ratings. The absolute values of the coefficient are around 0.3, indicating a moderate relationship.

| Behavioral Measurement | Correlation to Flickering Metric* |

| Comfort | -0.29 |

| Distraction | 0.33 |

| Easy to read | -0.31 |

| Easy to follow videos | -0.29 |

| Fatigue | 0.36 |

| Impaired Experience | 0.31 |

| Spearman correlation tests of our proposed flickering metric. *p < 0.001. |

Stabilization of live captions

Our proposed technique (stabilized smooth captions) received consistently better ratings, significant as measured by the Mann-Whitney U test (p < 0.01 in the figure below), in five out of six aforementioned survey statements. That is, users considered the stabilized captions with smoothing to be more comfortable and easier to read, while feeling less distraction, fatigue, and impairment to their experience than other types of rendering.

|

| User ratings from 1 (Strongly Disagree) – 7 (Strongly Agree) on survey statements. (**: p<0.01, ***: p<0.001; ****: p<0.0001; ns: non-significant) |

Conclusion and future direction

Text instability in live captioning significantly impairs users’ reading experience. This work proposes a vision-based metric to model caption stability that statistically significantly correlates with users’ experience, and an algorithm to stabilize the rendering of live captions. Our proposed solution can be potentially integrated into existing ASR systems to enhance the usability of live captions for a variety of users, including those with translation needs or those with hearing accessibility needs.

Our work represents a substantial step towards measuring and improving text stability. This can be evolved to include language-based metrics that focus on the consistency of the words and phrases used in live captions over time. These metrics may provide a reflection of user discomfort as it relates to language comprehension and understanding in real-world scenarios. We are also interested in conducting eye-tracking studies (e.g., videos shown below) to track viewers’ gaze patterns, such as eye fixation and saccades, allowing us to better understand the types of errors that are most distracting and how to improve text stability for those.

| Illustration of tracking a viewer’s gaze when reading raw ASR captions. |

| Illustration of tracking a viewer’s gaze when reading stabilized and smoothed captions. |

By improving text stability in live captions, we can create more effective communication tools and improve how people connect in everyday conversations in familiar or, through translation, unfamiliar languages.

Acknowledgements

This work is a collaboration across multiple teams at Google. Key contributors include Xingyu “Bruce” Liu, Jun Zhang, Leonardo Ferrer, Susan Xu, Vikas Bahirwani, Boris Smus, Alex Olwal, and Ruofei Du. We wish to extend our thanks to our colleagues who provided assistance, including Nishtha Bhatia, Max Spear, and Darcy Philippon. We would also like to thank Lin Li, Evan Parker, and CHI 2023 reviewers.

Caching is as fundamental to computing as arrays, symbols, or strings. Various layers of caching throughout the stack hold instructions from memory while…

Caching is as fundamental to computing as arrays, symbols, or strings. Various layers of caching throughout the stack hold instructions from memory while…

Caching is as fundamental to computing as arrays, symbols, or strings. Various layers of caching throughout the stack hold instructions from memory while pending on your CPU. They enable you to reload the page quickly and without re-authenticating, should you navigate away. They also dramatically decrease application workloads, and increase throughput by not re-running the same queries repeatedly.

Caching is not new to NVIDIA Triton Inference Server, which is a system tuned to answering questions in the form of running inferences on tensors. Running inferences is a relatively computationally expensive task that often calls on the same inference to run repeatedly. This naturally lends itself to using a caching pattern.

The NVIDIA Triton team recently implemented the Triton response cache using the Triton local cache library. They have also built a cache API to make this caching pattern extensible within Triton. The Redis team then leveraged that API to build the Redis cache for NVIDIA Triton.

In this post, the Redis team explores the benefits of the new Redis implementation of the Triton Caching API. We explore how to get started and discuss some of the best practices for using Redis to supercharge your NVIDIA Triton instance.

What is Redis?

Redis is an acronym for REmote DIctionary Server. It is a NoSQL database that operates as a key-value data structure store. Redis is memory-first, meaning that the entire dataset in Redis is stored in memory, and optionally persisted to disk, based on configuration. Because it is a key-value database completely held in memory, Redis is blazingly fast. Execution times are measured in microseconds, and throughputs in tens of thousands of operations a second.

The remarkable speed and typical access pattern of Redis make it ideal for caching. Redis is synonymous with caching and is consequentially one of the built-in distributed caches of most major application frameworks across a variety of developer communities.

What is local cache?

The local cache is an in-memory derivation of the most common caching pattern out there (cache-aside). It is simple and efficient, making it easy to grasp and implement. After receiving a query, NVIDIA Triton:

- Computes a hash of the input query, including the tensor and some metadata. This becomes the inference key.

- Checks for a previously inferred result for that tensor at that key.

- Returns any results found.

- Performs the inference if no results are found.

- Caches the inference in memory using the key for storage.

- Returns the inference.

‘Local’ means that it is staying local to the process and storing the cache in the system’s main memory. Figure 1 shows the implementation of this pattern.

Benefits of local cache

There are a variety of benefits that flow naturally from using this pattern. Because the queries are cached, they can be retrieved again easily without rerunning the tensor through the models. Because everything is maintained locally in the process memory, there is no need to leave the process or machine to retrieve the cached data. These two in concert can dramatically increase throughput, as well as decrease the cost of this computation.

Drawbacks of local cache

This technique does have drawbacks. Because the cache is tied directly into the process memory, each time the Triton process restarts, it starts from square one (generally referred to as a cold start). You will not see the benefits from caching while the cache warms up. Also, because the cache is process-locked, other instances of Triton will not be able to share the cache, leading to duplication of caching across each node.

The other major drawback concerns resource contention. Since the local cache is tied to the process, it is limited to the resources of the system that Triton runs on. This means that it is impossible to horizontally scale the resources allocated to the cache (distributing the cache across multiple machines), which limits the options for expanding the local cache to vertical scaling. This makes the server running Triton bigger.

Benefits of distributed caching with Redis

Unlike local caching, distributed caching leverages an external service (such as Redis) to distribute the cache off the local server. This confers several advantages to the NVIDIA Triton caching API:

- Redis is not bound to the available system resources of the same machine as Triton, or for that matter, a single machine.

- Redis is decoupled from Triton’s process life cycle, enabling multiple Triton instances to leverage the same cache.

- Redis is extremely fast (execution times are typically sub-milliseconds).

- Redis is a significantly more specialized, feature-rich, and tunable caching service compared to the Triton local cache.

- Redis provides immediate access to tried and tested high availability, horizontal scaling, and cache-eviction features out of the box.

Distributed caching with Redis works much the same way as the local cache. Rather than staying within the same process, it crosses out of the Triton server process to Redis to check the cache and store inferences. After receiving a query, NVIDIA Triton:

- Computes a hash of the input query, including the tensor and some metadata. This becomes the inference key.

- Checks Redis for a previous run inference.

- Returns that inference, if it exists.

- Runs the tensor through Triton if the inference does not exist.

- Stores the inference in Redis.

- Returns the inference.

Architecturally, this is shown in Figure 2.

Distributed cache set up and configuration

To set up the distributed Redis cache requires two top-level steps:

- Deploy your Redis instance.

- Configure NVIDIA Triton to point at the Redis instance.

Triton will take care of the rest for you. To learn more about Redis, see redis.io, docs.redis.com, and Redis University.

To configure Triton to point at your Redis instance, use the --cache-config options in your start command. In the model config, enable the response cache for the model with {{response_cache { enable: true }}}.

tritonserver --cache-config redis,host=localhost --cache-config redis,port=6379The Redis cache calls on you to minimally configure the host and port of your Redis instance. For a full enumeration of configuration options, see the Triton Redis Cache GitHub repo.

Best practices with Redis

Redis is lightweight, easy to use, and extremely fast. Even with its small footprint and simplicity, there is much you can configure in and around Redis to optimize it for your use case. This section highlights best practices for using and configuring Redis.

Minimize round-trip time

The only real drawback of using an external service like Redis over an in-process memory cache is that the queries to Redis will, at least, have to cross process. They typically need to cross server boundaries as well.

Because of this, minimizing round-trip times (RTT) is of paramount importance in optimizing the use of Redis as a cache. The topic of how to minimize RTT is far too complex a topic to dive into in this post. A couple of key tips: maintain the locality of your Redis servers to your Triton servers and have them physically close to each other. If they are in a data center, try to keep them in the same rack or availability zone.

Scaling and high availability

Redis Cluster enables you to scale your Redis instances horizontally over multiple shards. The cluster includes the ability to replicate your Redis instance. If there is a failure in your primary shard, the replica can be promoted for high availability.

Maximum memory and eviction

If Redis memory is not capped, it will use all the available memory on the system that the OS will release to it. Set the maxmemory configuration key in redis.conf. But what happens if you set maxmemory and Redis runs out of memory? The default is, as you might expect, to stop accepting new writes to Redis.

However, you can also set an eviction policy. An eviction policy uses some basic intelligence to decide which keys might be good candidates to kick out of Redis. Allowing Redis to evict keys that no longer make sense to store enables it to continue accepting new writes without interruption when the memory fills.

For a full explanation of different Redis eviction policies, see key eviction in the Redis manual.

Durability and persistence

Redis is memory-first, meaning everything is stored in memory. If you do not configure persistence and the Redis process dies, it will essentially return to a cold-started state. (The cache will need to ‘warm up’ before you get the benefits from caching.)

There are two options for persisting Redis. Taking periodic snapshots of the state of Redis in .rdb files and keeping a log of all write commands in the append-only file. For a full explanation of these methods, see persistence in the Redis manual.

Speed comparison

Getting down to brass tacks, this section explores a comprehensive difference between the performance of Triton without Redis and Triton with Redis. In the interest of simplicity, we leveraged the perf_analyzer tool the Triton team built for measuring performance with Triton. We tested with two separate models, DenseNet and Simple.

We ran Triton Server version 23.06 on a Google Cloud Platform (GCP) n1-standard-4 VM with a single NVIDIA T4 GPU. We also ran a vanilla open-source Redis instance on a GCP n2-standard-4 VM. Finally, we ran the Triton client image in Docker on a GCP e2-medium VM.

We ran the perf_analyzer tool with both the DenseNet and Simple models, 10 times on each caching configuration, with no caching, with Redis as the cache, and with the local cache as the cache. We then averaged the results of these runs.

It is important to note that these runs assume a 100% cache-hit rate. So, the measurement is the difference between the performance of Triton when it has encountered the entry in the past and when it has not.

We used the following command for the DenseNet model:

perf_analyzer -m densenet_onnx -u triton-server:8000We used the following command for the Simple model:

perf_analyzer -m simple -u triton-server:8000In the case of the DenseNet model, the results showed that using either cache was dramatically better than running with no cache. Without caching, Triton was able to handle 80 inferences per second (inference/sec) with an average latency of 12,680 µs. With Redis, it was about 4x faster, processing 329 inference/sec with an average latency of 3,030 µs.

Interestingly, while local caching was somewhat faster than Redis, as you would expect it to be, it was only marginally faster. Local caching resulted in a throughput of 355 inference/sec with a latency of 2,817 µs, only about 8% faster. In this case, it’s clear that the speed tradeoff of caching locally versus in Redis is a marginal one. Given all the extra benefits that come from using a distributed versus a local cache, distributed will almost certainly be the way to go when handling these kinds of data.

The Simple model tells a slightly more complicated story. In the case of the simple model, not using any cache enabled a throughput of 1,358 inference/sec with a latency of 735 µs. Redis was somewhat faster with a throughput of 1,639 inference/sec and a latency of 608 µs. Local was faster than Redis with a throughput of 2,753 inference/sec with a latency of 363 µs.

This is an important case to note, as not all uses are created equal. The system of record, in this case, may be fast enough and not worth adding the extra system for the 20% boost in throughput of Redis. Even with the halving of latency in the case of the local cache, it may not be worth the resource contention, depending on other factors such as cache hit rate and available system resources.

Best practices for managing trade-offs

As shown in the experiment, the difference between models, expected inputs, and expected outputs is critically important for assessing what, if any, caching is appropriate for your Triton instance.

Whether caching adds value is largely a function of how computationally expensive your queries are. The more computationally expensive your queries, the more each query will benefit from caching.

The relative performance of local versus Redis will largely be a function of how large the output tensors are from the model. The larger the output tensors, the more the transport costs will impact the throughput allowable by Redis.

Of course, the larger the output tensors are, the fewer output tensors you’ll be able to store in the local cache before you run out of room and begin contending with Triton for resources. Fundamentally, these factors need to be balanced when assessing which caching solution works best for your deployment of Triton.

| Benefits | Drawbacks |

| 1. Horizontally scalable 2. Effectively unlimited memory access 3. Enables high availability and disaster recovery 4. Removes resource contention 5. Minimizes cold starts |

A distributed Redis cache requires calls over the network. Naturally, you can expect somewhat lower throughput and higher latency as compared to the local cache. |

Summary

Distributed caching is an old trick that developers use to boost system performance while enabling horizontal scalability and separation of concerns. With the introduction of the Redis Cache for Triton Inference Server, you can now leverage this technique to greatly increase the performance and efficiency of your Triton instance, while managing heavier workloads and enabling multiple Triton instances to share in the same cache. Fundamentally, by offloading caching to Redis, Triton can concentrate its resources on its fundamental role—running inferences.

Get started with Triton Redis Cache and NVIDIA Triton Inference Server. For more information about setting up and administering Redis instances, see redis.io and docs.redis.com.

Learn to create an end-to-end machine learning pipeline for large datasets with this virtual, hands-on workshop.

Learn to create an end-to-end machine learning pipeline for large datasets with this virtual, hands-on workshop.

Learn to create an end-to-end machine learning pipeline for large datasets with this virtual, hands-on workshop.

Episode 5 of the NVIDIA CUDA Tutorials Video series is out. Jackson Marusarz, product manager for Compute Developer Tools at NVIDIA, introduces a suite of tools…

Episode 5 of the NVIDIA CUDA Tutorials Video series is out. Jackson Marusarz, product manager for Compute Developer Tools at NVIDIA, introduces a suite of tools…

Episode 5 of the NVIDIA CUDA Tutorials Video series is out. Jackson Marusarz, product manager for Compute Developer Tools at NVIDIA, introduces a suite of tools to help you build, debug, and optimize CUDA applications, making development easy and more efficient.

This includes:

IDEs and debuggers: integration with popular IDEs like NVIDIA Nsight Visual Studio Edition, NVIDIA Nsight Visual Studio Code Edition, and NVIDIA Nsight Eclipse simplifies code development and debugging for CUDA applications. These tools adapt familiar CPU-based programming workflows for GPU development, offering features like intellisense and code completion.

System-wide insights: NVIDIA Nsight Systems provides system-wide performance insights, visualization of CPU processes, GPU streams, and resource bottlenecks. It also traces APIs and libraries, helping developers locate optimization opportunities.

CUDA kernel profiling: NVIDIA Nsight Compute enables detailed analysis of CUDA kernel performance. It collects hardware and software counters and uses a built-in expert system for issue detection and performance analysis.

Episode 5 of the NVIDIA CUDA Tutorials Video series is out. Jackson Marusarz, product manager for Compute Developer Tools at NVIDIA, introduces a suite of tools to help you build, debug, and optimize CUDA applications, making development easy and more efficient.

Learn about key features for each tool, and discover the best fit for your needs.

Resources

- Learn more about NVIDIA Developer Tools.

- Download the CUDA toolkit.

- Dive deeper or ask questions in CUDA Developer Tools forums.

Each year, nearly 32 million people travel through the Bengaluru Airport, or BLR, one of the busiest airports in the world’s most populous nation. To provide such multitudes with a safer, quicker experience, the airport in the city formerly known as Bangalore is tapping vision AI technologies powered by Industry.AI. A member of the NVIDIA Read article >

In the global entertainment landscape, TV show and film production stretches far beyond Hollywood or Bollywood — it’s a worldwide phenomenon. However, while streaming platforms have broadened the reach of content, dubbing and translation technology still has plenty of room for growth. Deepdub acts as a digital bridge, providing access to content by using generative Read article >

Simple and effective interaction between human and quadrupedal robots paves the way towards creating intelligent and capable helper robots, forging a future where technology enhances our lives in ways beyond our imagination. Key to such human-robot interaction systems is enabling quadrupedal robots to respond to natural language instructions. Recent developments in large language models (LLMs) have demonstrated the potential to perform high-level planning. Yet, it remains a challenge for LLMs to comprehend low-level commands, such as joint angle targets or motor torques, especially for inherently unstable legged robots, necessitating high-frequency control signals. Consequently, most existing work presumes the provision of high-level APIs for LLMs to dictate robot behavior, inherently limiting the system’s expressive capabilities.

In “SayTap: Language to Quadrupedal Locomotion”, we propose an approach that uses foot contact patterns (which refer to the sequence and manner in which a four-legged agent places its feet on the ground while moving) as an interface to bridge human commands in natural language and a locomotion controller that outputs low-level commands. This results in an interactive quadrupedal robot system that allows users to flexibly craft diverse locomotion behaviors (e.g., a user can ask the robot to walk, run, jump or make other movements using simple language). We contribute an LLM prompt design, a reward function, and a method to expose the SayTap controller to the feasible distribution of contact patterns. We demonstrate that SayTap is a controller capable of achieving diverse locomotion patterns that can be transferred to real robot hardware.

SayTap method

The SayTap approach uses a contact pattern template, which is a 4 X T matrix of 0s and 1s, with 0s representing an agent’s feet in the air and 1s for feet on the ground. From top to bottom, each row in the matrix gives the foot contact patterns of the front left (FL), front right (FR), rear left (RL) and rear right (RR) feet. SayTap’s control frequency is 50 Hz, so each 0 or 1 lasts 0.02 seconds. In this work, a desired foot contact pattern is defined by a cyclic sliding window of size Lw and of shape 4 X Lw. The sliding window extracts from the contact pattern template four foot ground contact flags, which indicate if a foot is on the ground or in the air between t + 1 and t + Lw. The figure below provides an overview of the SayTap method.

SayTap introduces these desired foot contact patterns as a new interface between natural language user commands and the locomotion controller. The locomotion controller is used to complete the main task (e.g., following specified velocities) and to place the robot’s feet on the ground at the specified time, such that the realized foot contact patterns are as close to the desired contact patterns as possible. To achieve this, the locomotion controller takes the desired foot contact pattern at each time step as its input in addition to the robot’s proprioceptive sensory data (e.g., joint positions and velocities) and task-related inputs (e.g., user-specified velocity commands). We use deep reinforcement learning to train the locomotion controller and represent it as a deep neural network. During controller training, a random generator samples the desired foot contact patterns, the policy is then optimized to output low-level robot actions to achieve the desired foot contact pattern. Then at test time a LLM translates user commands into foot contact patterns.

|

| SayTap approach overview. |

| SayTap uses foot contact patterns (e.g., 0 and 1 sequences for each foot in the inset, where 0s are foot in the air and 1s are foot on the ground) as an interface that bridges natural language user commands and low-level control commands. With a reinforcement learning-based locomotion controller that is trained to realize the desired contact patterns, SayTap allows a quadrupedal robot to take both simple and direct instructions (e.g., “Trot forward slowly.”) as well as vague user commands (e.g., “Good news, we are going to a picnic this weekend!”) and react accordingly. |

We demonstrate that the LLM is capable of accurately mapping user commands into foot contact pattern templates in specified formats when given properly designed prompts, even in cases when the commands are unstructured or vague. In training, we use a random pattern generator to produce contact pattern templates that are of various pattern lengths T, foot-ground contact ratios within a cycle based on a given gait type G, so that the locomotion controller gets to learn on a wide distribution of movements leading to better generalization. See the paper for more details.

Results

With a simple prompt that contains only three in-context examples of commonly seen foot contact patterns, an LLM can translate various human commands accurately into contact patterns and even generalize to those that do not explicitly specify how the robot should react.

SayTap prompts are concise and consist of four components: (1) general instruction that describes the tasks the LLM should accomplish; (2) gait definition that reminds the LLM of basic knowledge about quadrupedal gaits and how they can be related to emotions; (3) output format definition; and (4) examples that give the LLM chances to learn in-context. We also specify five velocities that allow a robot to move forward or backward, fast or slow, or remain still.

General instruction block You are a dog foot contact pattern expert. Your job is to give a velocity and a foot contact pattern based on the input. You will always give the output in the correct format no matter what the input is. Gait definition block The following are description about gaits: 1. Trotting is a gait where two diagonally opposite legs strike the ground at the same time. 2. Pacing is a gait where the two legs on the left/right side of the body strike the ground at the same time. 3. Bounding is a gait where the two front/rear legs strike the ground at the same time. It has a longer suspension phase where all feet are off the ground, for example, for at least 25% of the cycle length. This gait also gives a happy feeling. Output format definition block The following are rules for describing the velocity and foot contact patterns: 1. You should first output the velocity, then the foot contact pattern. 2. There are five velocities to choose from: [-1.0, -0.5, 0.0, 0.5, 1.0]. 3. A pattern has 4 lines, each of which represents the foot contact pattern of a leg. 4. Each line has a label. "FL" is front left leg, "FR" is front right leg, "RL" is rear left leg, and "RR" is rear right leg. 5. In each line, "0" represents foot in the air, "1" represents foot on the ground. Example block Input: Trot slowly Output: 0.5 FL: 11111111111111111000000000 FR: 00000000011111111111111111 RL: 00000000011111111111111111 RR: 11111111111111111000000000 Input: Bound in place Output: 0.0 FL: 11111111111100000000000000 FR: 11111111111100000000000000 RL: 00000011111111111100000000 RR: 00000011111111111100000000 Input: Pace backward fast Output: -1.0 FL: 11111111100001111111110000 FR: 00001111111110000111111111 RL: 11111111100001111111110000 RR: 00001111111110000111111111 Input:

| SayTap prompt to the LLM. Texts in blue are used for illustration and are not input to LLM. |

Following simple and direct commands

We demonstrate in the videos below that the SayTap system can successfully perform tasks where the commands are direct and clear. Although some commands are not covered by the three in-context examples, we are able to guide the LLM to express its internal knowledge from the pre-training phase via the “Gait definition block” (see the second block in our prompt above) in the prompt.

Following unstructured or vague commands

But what is more interesting is SayTap’s ability to process unstructured and vague instructions. With only a little hint in the prompt to connect certain gaits with general impressions of emotions, the robot bounds up and down when hearing exciting messages, like “We are going to a picnic!” Furthermore, it also presents the scenes accurately (e.g., moving quickly with its feet barely touching the ground when told the ground is very hot).

Conclusion and future work

We present SayTap, an interactive system for quadrupedal robots that allows users to flexibly craft diverse locomotion behaviors. SayTap introduces desired foot contact patterns as a new interface between natural language and the low-level controller. This new interface is straightforward and flexible, moreover, it allows a robot to follow both direct instructions and commands that do not explicitly state how the robot should react.

One interesting direction for future work is to test if commands that imply a specific feeling will allow the LLM to output a desired gait. In the gait definition block shown in the results section above, we provide a sentence that connects a happy mood with bounding gaits. We believe that providing more information can augment the LLM’s interpretations (e.g., implied feelings). In our evaluation, the connection between a happy feeling and a bounding gait led the robot to act vividly when following vague human commands. Another interesting direction for future work is to introduce multi-modal inputs, such as videos and audio. Foot contact patterns translated from those signals will, in theory, still work with our pipeline and will unlock many more interesting use cases.

Acknowledgements

Yujin Tang, Wenhao Yu, Jie Tan, Heiga Zen, Aleksandra Faust and Tatsuya Harada conducted this research. This work was conceived and performed while the team was in Google Research and will be continued at Google DeepMind. The authors would like to thank Tingnan Zhang, Linda Luu, Kuang-Huei Lee, Vincent Vanhoucke and Douglas Eck for their valuable discussions and technical support in the experiments.

Dramatic gains in hardware performance have spawned generative AI, and a rich pipeline of ideas for future speedups that will drive machine learning to new heights, Bill Dally, NVIDIA’s chief scientist and senior vice president of research, said today in a keynote. Dally described a basket of techniques in the works — some already showing Read article >

NVIDIA DOCA SDK and acceleration framework empowers developers with extensive libraries, drivers, and APIs to create high-performance applications and services…

NVIDIA DOCA SDK and acceleration framework empowers developers with extensive libraries, drivers, and APIs to create high-performance applications and services…

NVIDIA DOCA SDK and acceleration framework empowers developers with extensive libraries, drivers, and APIs to create high-performance applications and services for NVIDIA BlueField DPUs and ConnectX SmartNICs. It fuels data center innovation, enabling rapid application deployment.

With comprehensive features, NVIDIA DOCA serves as a one-stop-shop for BlueField developers looking to accelerate data center workloads and AI applications at scale.

With over 10,000 developers already benefiting, NVIDIA DOCA is now generally available, granting access to a broader developer community to leverage the BlueField DPU platform for innovative AI and cloud services.

New NVIDIA DOCA 2.2 features and enhancements

NVIDIA DOCA 2.2 introduces new features and enhancements for offloading, accelerating, and isolating network, storage, security, and management infrastructure within the data center.

Programmability

The NVIDIA BlueField-3 DPU—in conjunction with its onboard, purpose-built data path accelerator (DPA) and the DOCA SDK framework—offers an unparalleled platform. It is now available for developers to create high-performance and scalable network applications that demand high throughput and low latency.

Data path accelerator

NVIDIA DOCA 2.2 delivers several enhancements to leverage the BlueField-3 DPA programming subsystem. DOCA DPA, a new compute subsystem part of the DOCA SDK package, offers a programming model for offloading communication-centric user code to run on the DPA processor. DOCA DPA helps to offload the CPU traffic and increase the performance through DPU acceleration.

DOCA DPA also offers significant development benefits, including greater flexibility when creating custom emulations and congestion controls. Customized congestion control is critical for AI workflows, enabling performance isolation, improving fairness, and preventing packet drop on lossy networks.

The DOCA 2.2 release introduces the following SDKs:

DOCA-FlexIO: A low-level SDK for DPA programming. Specifically, the DOCA FlexIO driver exposes the API for managing and running code over the DPA.

DOCA-PCC: An SDK for congestion-control development that enables CSP and enterprise customers to create their own congestion control algorithms to increase stability and efficient network operations through higher bandwidth and lower latency.

NVIDIA also supplies the necessary toolchains, examples, and collateral to expedite and support development efforts. Note that NVIDIA DOCA DPA is available in both DPU mode and NIC mode.

Networking

NVIDIA DOCA and the BlueField-3 DPU together enable the development of applications that deliver breakthrough networking performance with a comprehensive, open development platform. Including a range of drivers, libraries, tools, and example applications, NVIDIA DOCA continues to evolve. This release offers the following additional features to support the development of networking applications.

NVIDIA DOCA Flow

With NVIDIA DOCA Flow, you can define and control the flow of network traffic, implement network policies, and manage network resources programmatically. It offers network virtualization, telemetry, load balancing, security enforcement, and traffic monitoring. These capabilities are beneficial for processing high packet workloads with low latency, conserving CPU resources and reducing power usage.

This release includes the following new features that offer immediate benefits to cloud deployments:

Support for tunnel offloads – GENEVE and GRE: Offering enhanced security, visibility, scalability, flexibility, and extensibility, are the building blocks for site communication, network isolation, and multi-tenancy. Specifically, GRE tunnels used to connect separate networks and establish secure VPN communication support overlay networks, offer protocol flexibility, and enable traffic engineering.

Support per-flow meter with bps/pps option: Essential in cloud environments to monitor/analyze traffic (measure bandwidth or packet rate), manage QoS (enforce limits), or enhance security (block denial-of-service attacks).

Enhanced mirror capability (FDB/switch domain): Used for monitoring, troubleshooting, security analysis, and performance optimization, this added functionality also provides better CPU utilization for mirrored workloads.

OVS-DOCA (Beta)

OVS-DOCA is a highly optimized virtual switch for NVIDIA Network Services. An extremely efficient design promotes next-generation performance and scale through an NVIDIA NIC or DPU. OVS-DOCA is now available in DOCA for DPU and DOCA for Host (binaries and source).

Based on Open vSwitch, OVS-DOCA offers the same northbound API, OpenFlow, CLI, and data interface, providing a drop-in replacement alternative to OVS. Using OVS-DOCA enables faster implementation of future NVIDIA innovative networking features.

BlueField-3 (enhanced) NIC mode (Beta)

This release benefits from an enhanced BlueField-3 NIC mode, currently in Beta. In contrast to BlueField-3 DPU mode, where offloading, acceleration, and isolation are all available, BlueField-3 NIC mode only offers acceleration features.

While continuing to leverage the BlueField low power and lower compute-intensive SKUs, the enhanced BlueField-3 NIC mode offers many advantages over the current ConnectX BlueField-2 NIC mode, including:

- Higher performance and lower latency at scale using local DPU memory

- Performant RDMA with Programmable Congestion Control (PCC)

- Programmability with DPA and additional BlueField accelerators

- Robust platform security with device attestation and on-card BMC

Note that BlueField-3 NIC mode will be productized as a software mode, not a separate SKU, to enable future DPU-mode usage. As such, BlueField-3 NIC mode is a fully supported software feature available on all BlueField-3 SKUs. DPA programmability for any BlueField-3 DPU operating in NIC mode mandates the installation of DOCA on the host and an active host-based service.

Services

NVIDIA DOCA services are containerized DOCA-based programs that provide an end-to-end solution for a given use case. These services are accessible through NVIDIA NGC, from which they can be easily deployed directly to the DPU. DOCA 2.2 gives you greater control and now enables offline installation of DOCA services.

NGC offline service installation

DOCA services installed from NGC require Internet connectivity. However, many customers operate in a secure production environment without Internet access. Providing the option for ‘nonconnected’ deployment enables service installation in a fully secure production environment, simplifying the process and avoiding the already unlikely scenario whereby each server would need a connection to complete the installation process.

For example, consider the installation of DOCA Telemetry Service (DTS) in a production environment to support metrics collection. The full installation process is completed in just two steps:

- Step 1: NGC download on connected server

- Step 2: Offline installation using internal secure delivery

Summary

NVIDIA DOCA 2.2 plays a pivotal and indispensable role in driving data center innovation and transforming cloud and enterprise data center networks for AI applications. By providing a comprehensive SDK and acceleration framework for BlueField DPUs, DOCA empowers developers with powerful libraries, drivers, and APIs, enabling the creation of high-performance applications and services.

With several new features and enhancements to DOCA 2.2, a number of immediate gains are available. In addition to the performance gains realized through DPU acceleration, the inclusion of DOCA-FlexIO and DOCA-PCC SDK offers developers accelerated computing for AI-centric benefits. These SDKs enable the creation of custom emulations and algorithms, reducing the time to market, and significantly improving the overall development experience.

Additionally, networking-specific updates to NVIDIA DOCA FLOW and OVS-DOCA offer simplified delivery pathways for software-defined networking and security solutions. These features increase efficiency and enhance visibility, scalability, and flexibility, essential for building sophisticated and secure infrastructures.

With wide-ranging contributions to data center innovation, AI application acceleration, and robust network infrastructure, DOCA is a crucial component of NVIDIA AI cloud services. As the industry moves towards more complex and demanding computing requirements, the continuous evolution of DOCA and integration with cutting-edge technologies will further solidify its position as a trailblazing platform for empowering the future of data centers and AI-driven solutions.

Download NVIDIA DOCA to begin your development journey with all the benefits DOCA has to offer. For more information, see the following resources: