Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

Discover the latest cybersecurity tools and trends with NVIDIA Deep Learning Institute workshops at GTC 2022.

In recent years video conferencing has played an increasingly important role in both work and personal communication for many users. Over the past two years, we have enhanced this experience in Google Meet by introducing privacy-preserving machine learning (ML) powered background features, also known as “virtual green screen”, which allows users to blur their backgrounds or replace them with other images. What is unique about this solution is that it runs directly in the browser without the need to install additional software.

So far, these ML-powered features have relied on CPU inference made possible by leveraging neural network sparsity, a common solution that works across devices, from entry level computers to high-end workstations. This enables our features to reach the widest audience. However, mid-tier and high-end devices often have powerful GPUs that remain untapped for ML inference, and existing functionality allows web browsers to access GPUs via shaders (WebGL).

With the latest update to Google Meet, we are now harnessing the power of GPUs to significantly improve the fidelity and performance of these background effects. As we detail in “Efficient Heterogeneous Video Segmentation at the Edge”, these advances are powered by two major components: 1) a novel real-time video segmentation model and 2) a new, highly efficient approach for in-browser ML acceleration using WebGL. We leverage this capability to develop fast ML inference via fragment shaders. This combination results in substantial gains in accuracy and latency, leading to crisper foreground boundaries.

|

| CPU segmentation vs. HD segmentation in Meet. |

Moving Towards Higher Quality Video Segmentation Models

To predict finer details, our new segmentation model now operates on high definition (HD) input images, rather than lower-resolution images, effectively doubling the resolution over the previous model. To accommodate this, the model must be of higher capacity to extract features with sufficient detail. Roughly speaking, doubling the input resolution quadruples the computation cost during inference.

Inference of high-resolution models using the CPU is not feasible for many devices. The CPU may have a few high-performance cores that enable it to execute arbitrary complex code efficiently, but it is limited in its ability for the parallel computation required for HD segmentation. In contrast, GPUs have many, relatively low-performance cores coupled with a wide memory interface, making them uniquely suitable for high-resolution convolutional models. Therefore, for mid-tier and high-end devices, we adopt a significantly faster pure GPU pipeline, which is integrated using WebGL.

This change inspired us to revisit some of the prior design decisions for the model architecture.

|

| HD segmentation model architecture. |

In aggregate, these changes substantially improve the mean Intersection over Union (IoU) metric by 3%, resulting in less uncertainty and crisper boundaries around hair and fingers.

We have also released the accompanying model card for this segmentation model, which details our fairness evaluations. Our analysis shows that the model is consistent in its performance across the various regions, skin-tones, and genders, with only small deviations in IoU metrics.

| Model | Resolution | Inference | IoU | Latency (ms) | ||||

| CPU segmenter | 256×144 | Wasm SIMD | 94.0% | 8.7 | ||||

| GPU segmenter | 512×288 | WebGL | 96.9% | 4.3 |

| Comparison of the previous segmentation model vs. the new HD segmentation model on a Macbook Pro (2018). |

Accelerating Web ML with WebGL

One common challenge for web-based inference is that web technologies can incur a performance penalty when compared to apps running natively on-device. For GPUs, this penalty is substantial, only achieving around 25% of native OpenGL performance. This is because WebGL, the current GPU standard for Web-based inference, was primarily designed for image rendering, not arbitrary ML workloads. In particular, WebGL does not include compute shaders, which allow for general purpose computation and enable ML workloads in mobile and native apps.

To overcome this challenge, we accelerated low-level neural network kernels with fragment shaders that typically compute the output properties of a pixel like color and depth, and then applied novel optimizations inspired by the graphics community. As ML workloads on GPUs are often bound by memory bandwidth rather than compute, we focused on rendering techniques that would improve the memory access, such as Multiple Render Targets (MRT).

MRT is a feature in modern GPUs that allows rendering images to multiple output textures (OpenGL objects that represent images) at once. While MRT was originally designed to support advanced graphics rendering such as deferred shading, we found that we could leverage this feature to drastically reduce the memory bandwidth usage of our fragment shader implementations for critical operations, like convolutions and fully connected layers. We do so by treating intermediate tensors as multiple OpenGL textures.

In the figure below, we show an example of intermediate tensors having four underlying GL textures each. With MRT, the number of GPU threads, and thus effectively the number of memory requests for weights, is reduced by a factor of four and saves memory bandwidth usage. Although this introduces considerable complexities in the code, it helps us reach over 90% of native OpenGL performance, closing the gap with native applications.

Conclusion

We have made rapid strides in improving the quality of real-time segmentation models by leveraging the GPU on mid-tier and high-end devices for use with Google Meet. We look forward to the possibilities that will be enabled by upcoming technologies like WebGPU, which bring compute shaders to the web. Beyond GPU inference, we’re also working on improving the segmentation quality for lower powered devices with quantized inference via XNNPACK WebAssembly.

Acknowledgements

Special thanks to those on the Meet team and others who worked on this project, in particular Sebastian Jansson, Sami Kalliomäki, Rikard Lundmark, Stephan Reiter, Fabian Bergmark, Ben Wagner, Stefan Holmer, Dan Gunnarsson, Stéphane Hulaud, and to all our team members who made this possible: Siargey Pisarchyk, Raman Sarokin, Artsiom Ablavatski, Jamie Lin, Tyler Mullen, Gregory Karpiak, Andrei Kulik, Karthik Raveendran, Trent Tolley, and Matthias Grundmann.

Looking for a change of art? Try using AI — that’s what 3D artist Nikola Damjanov is doing. Based in Serbia, Damjanov has over 15 years of experience in the graphics industry, from making 3D models and animations to creating high-quality visual effects for music videos and movies. Now an artist at game developer company Read article >

The post 3D Artist Creates Blooming, Generative Sculptures With NVIDIA RTX and AI appeared first on NVIDIA Blog.

Expert-led, hands-on conversational AI workshops at GTC 2022 are just $99 when you register by August 29 (standard price $500).

Expert-led, hands-on conversational AI workshops at GTC 2022 are just $99 when you register by August 29 (standard price $500).

Expert-led, hands-on conversational AI workshops at GTC 2022 are just $99 when you register by August 29 (standard price $500).

E-commerce sales have skyrocketed as more people shop remotely, spurred by the pandemic. But this surge has also led fraudsters to use the opportunity to scam retailers and customers, according to David Sutton, director of analytical technology at fintech company Featurespace. The company, headquartered in the U.K., has developed AI-powered technology to increase the speed Read article >

The post Fintech Company Blocks Fraud Attacks for Financial Institutions With AI and NVIDIA GPUs appeared first on NVIDIA Blog.

Some weeks, GFN Thursday reveals new or unique features. Other weeks, it’s a cool reward. And every week, it offers its members new games. This week, it’s all of the above. First, Saints Row marches into GeForce NOW. Be your own boss in the new reboot of the classic open-world criminal adventure series, now available Read article >

The post GFN Thursday Adds ‘Saints Row,’ ‘Genshin Impact’ on Mobile With Touch Controls appeared first on NVIDIA Blog.

Join NVIDIA at the 16th annual ACM Conference on Recommender Systems (RecSys 2022) to see how recommender systems are driving our future.

Join NVIDIA at the 16th annual ACM Conference on Recommender Systems (RecSys 2022) to see how recommender systems are driving our future.

Join NVIDIA at the 16th annual ACM Conference on Recommender Systems (RecSys 2022) to see how recommender systems are driving our future.

Quarterly revenue of $6.70 billion, up 3% from a year agoData Center revenue of $3.81 billion, up 61% from a year agoQuarterly return to shareholders of $3.44 billion SANTA CLARA, Calif., Aug. …

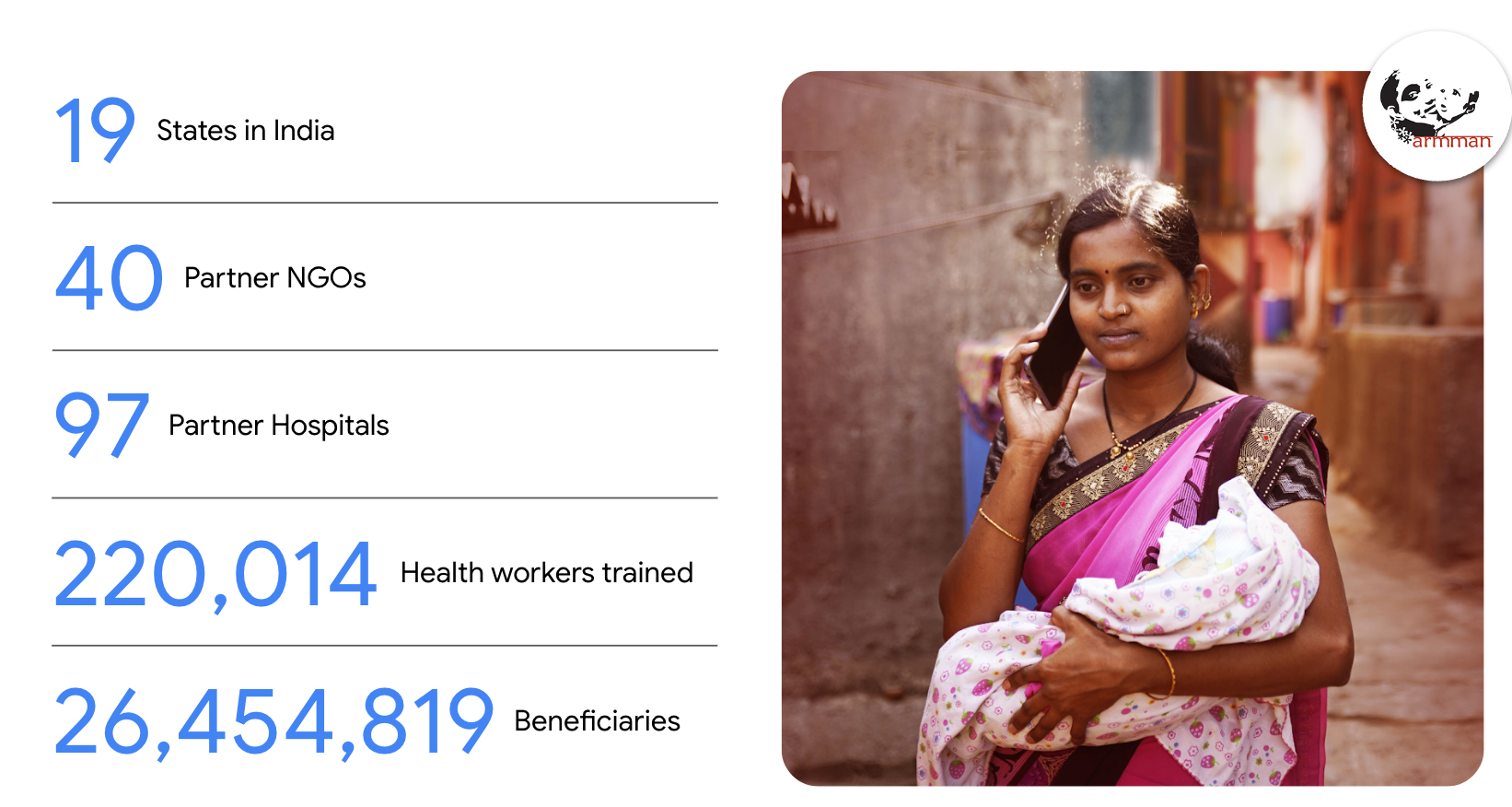

The widespread availability of mobile phones has enabled non-profits to deliver critical health information to their beneficiaries in a timely manner. While advanced applications on smartphones allow for richer multimedia content and two-way communication between beneficiaries and health coaches, simpler text and voice messaging services can be effective in disseminating information to large communities, particularly those that are underserved with limited access to information and smartphones. ARMMAN1, one non-profit doing just this, is based in India with the mission of improving maternal and child health outcomes in underserved communities.

|

| Overview of ARMMAN |

One of the programs run by them is mMitra, which employs automated voice messaging to deliver timely preventive care information to expecting and new mothers during pregnancy and until one year after birth. These messages are tailored according to the gestational age of the beneficiary. Regular listenership to these messages has been shown to have a high correlation with improved behavioral and health outcomes, such as a 17% increase in infants with tripled birth weight at end of year and a 36% increase in women knowing the importance of taking iron tablets.

However, a key challenge ARMMAN faced was that about 40% of women gradually stopped engaging with the program. While it’s possible to mitigate this with live service calls to women to explain the advantage of listening to the messages, it is infeasible to call all the low listeners in the program because of limited support staff — this highlights the importance of effectively prioritizing who receives such service calls.

In “Field Study in Deploying Restless Multi-Armed Bandits: Assisting Non-Profits in Improving Maternal and Child Health”, published in AAAI 2022, we describe an ML-based solution that uses historical data from the NGO to predict which beneficiaries will benefit most from service calls. We address the challenges that come with a large-scale real world deployment of such a system and show the usefulness of deploying this model in a real study involving over 23,000 participants. The model showed an increase in listenership of 30% compared to the current standard of care group.

Background

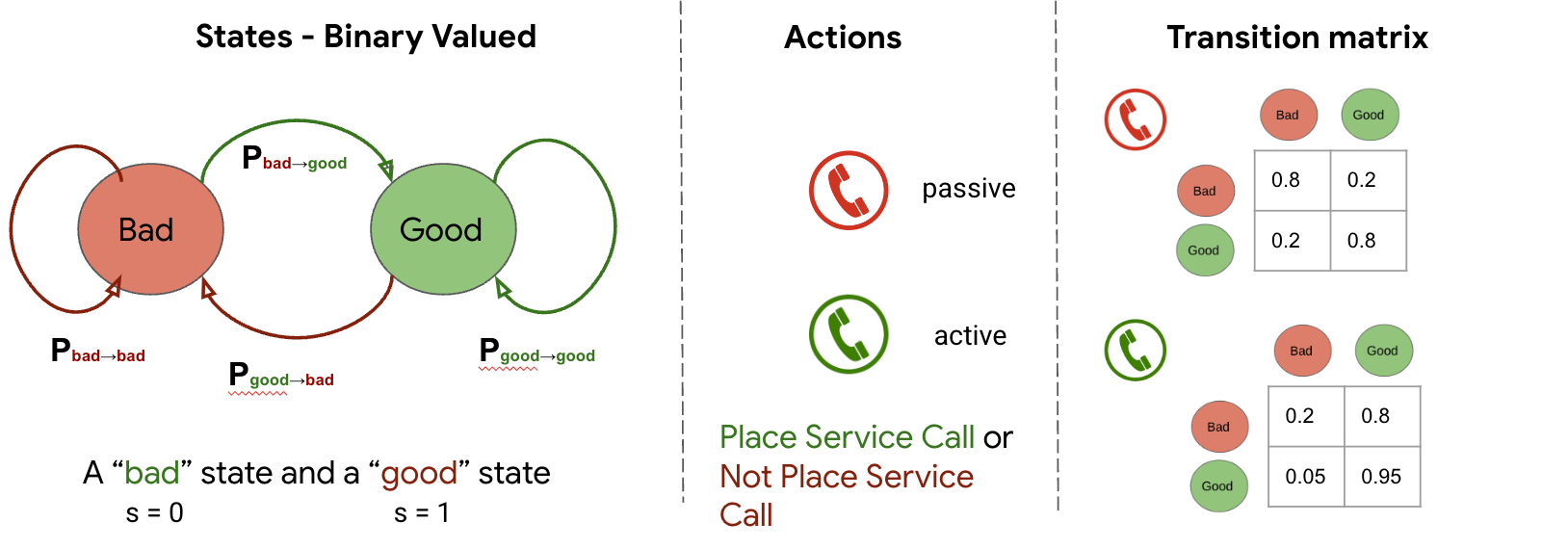

We model this resource optimization problem using restless multi-armed bandits (RMABs), which have been well studied for application to such problems in a myriad of domains, including healthcare. An RMAB consists of n arms where each arm (representing a beneficiary) is associated with a two-state Markov decision process (MDP). Each MDP is modeled as a two-state (good or bad state, where the good state corresponds to high listenership in the previous week), two-action (corresponding to whether the beneficiary was chosen to receive a service call or not) problem. Further, each MDP has an associated reward function (i.e., the reward accumulated at a given state and action) and a transition function indicating the probability of moving from one state to the next under a given action, under the Markov condition that the next state depends only on the previous state and the action taken on that arm in that time step. The term restless indicates that all arms can change state irrespective of the action.

|

| State of a beneficiary may transition from good (high engagement) to bad (low engagement) with example passive and active transition probabilities shown in the transition matrix. |

Model Development

Finally, the RMAB problem is modeled such that at any time step, given n total arms, which k arms should be acted on (i.e., chosen to receive a service call), to maximize reward (engagement with the program).

The probability of transitioning from one state to another with (active probability) or without (passive probability) receiving a service call are therefore the underlying model parameters that are critical to solving the above optimization. To estimate these parameters, we use the demographic data of the beneficiaries collected at time of enrolment by the NGO, such as age, income, education, number of children, etc., as well as past listenership data, all in-line with the NGO’s data privacy standards (more below).

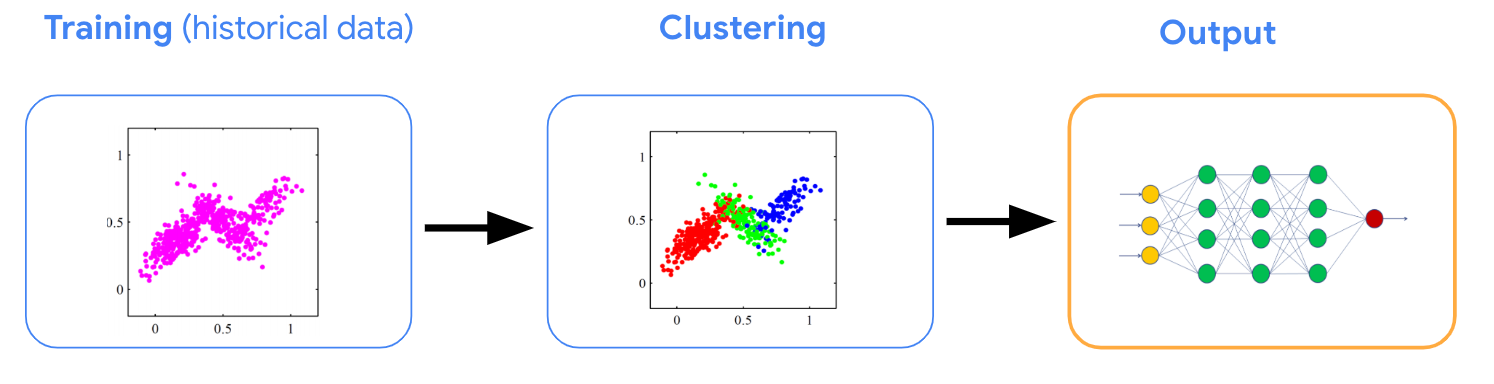

However, the limited volume of service calls limits the data corresponding to receiving a service call. To mitigate this, we use clustering techniques to learn from the collective observations of beneficiaries within a cluster and enable overcoming the challenge of limited samples per individual beneficiary.

In particular, we perform clustering on listenership behaviors, and then compute a mapping from the demographic features to each cluster.

|

| Clustering on past listenership data reveals clusters with beneficiaries that behave similarly. We then infer a mapping from demographic features to clusters. |

This mapping is useful because when a new beneficiary is enrolled, we only have access to their demographic information and have no knowledge of their listenership patterns, since they haven’t had a chance to listen yet. Using the mapping, we can infer transition probabilities for any new beneficiary that enrolls into the system.

We used several qualitative and quantitative metrics to infer the optimal set of of clusters and explored different combinations of training data (demographic features only, features plus passive probabilities, features plus all probabilities, passive probabilities only) to achieve the most meaningful clusters, that are representative of the underlying data distribution and have a low variance in individual cluster sizes.

Clustering has the added advantage of reducing computational cost for resource-limited NGOs, as the optimization needs to be solved at a cluster level rather than an individual level. Finally, solving RMAB’s is known to be P-space hard, so we choose to solve the optimization using the popular Whittle index approach, which ultimately provides a ranking of beneficiaries based on their likely benefit of receiving a service call.

Results

We evaluated the model in a real world study consisting of approximately 23,000 beneficiaries who were divided into three groups: the current standard of care (CSOC) group, the “round robin” (RR) group, and the RMAB group. The beneficiaries in the CSOC group follow the original standard of care, where there are no NGO initiated service calls. The RR group represents the scenario where the NGO often conducts service calls using some systematic set order — the idea here is to have an easily executable policy that services enough of a cross-section of beneficiaries and can be scaled up or down per week based on available resources (this is the approach used by the NGO in this particular case, but the approach may vary for different NGOs). The RMAB group receives service calls as predicted by the RMAB model. All the beneficiaries across the three groups continue to receive the automated voice messages independent of the service calls.

At the end of seven weeks, RMAB-based service calls resulted in the highest (and statistically significant) reduction in cumulative engagement drops (32%) compared to the CSOC group.

|

| The plot shows cumulative engagement drops prevented compared to the control group. |

| RMAB vs CSOC | RR vs CSOC | RMAB vs RR | |

| % reduction in cumulative engagement drops | 32.0% | 5.2% | 28.3% |

| p-value | 0.044 | 0.740 | 0.098 |

Ethical Considerations

An ethics board at the NGO reviewed the study. We took significant measures to ensure participant consent is understood and recorded in a language of the community’s choice at each stage of the program. Data stewardship resides in the hands of the NGO, and only the NGO is allowed to share data. The code will soon be available publicly. The pipeline only uses anonymized data and no personally identifiable information (PII) is made available to the models. Sensitive data, such as caste, religion, etc., are not collected by ARMMAN for mMitra. Therefore, in pursuit of ensuring fairness of the model, we worked with public health and field experts to ensure other indicators of socioeconomic status were measured and adequately evaluated as shown below.

|

| Distribution of highest education received (top) and monthly family income in Indian Rupees (bottom) across a cohort that received service calls compared to the whole population. |

The proportion of beneficiaries that received a live service call within each income bracket reasonably matches the proportion in the overall population. However, differences are observed in lower income categories, where the RMAB model favors beneficiaries with lower income and beneficiaries with no formal education. Lastly, domain experts at ARMMAN have been deeply involved in the development and testing of this system and have provided continuous input and oversight in data interpretation, data consumption, and model design.

Conclusions

After thorough testing, the NGO has currently deployed this system for scheduling of service calls on a weekly basis. We are hopeful that this will pave the way for more deployments of ML algorithms for social impact in partnerships with non-profits in service of populations that have so far benefited less from ML. This work was also featured in Google for India 2021.

Acknowledgements

This work is part of our AI for Social Good efforts and was led by Google Research, India. Thanks to all our collaborators at ARMMAN, Google Research India, Google.org, and University Relations: Aparna Hegde, Neha Madhiwalla, Suresh Chaudhary, Aditya Mate, Lovish Madaan, Shresth Verma, Gargi Singh, Divy Thakkar.

1ARMMAN runs multiple programs to provide preventive care information to women through pregnancy and infancy enabling them to seek care, as well as programs to train and support health workers for timely detection and management of high-risk conditions. ↩

Learn about the latest RTX and neural rendering technologies and how they are accelerating game development.

Learn about the latest RTX and neural rendering technologies and how they are accelerating game development.

Learn about the latest RTX and neural rendering technologies and how they are accelerating game development.