|

submitted by /u/Ordinary_Craft [visit reddit] [comments] |

|

submitted by /u/Ordinary_Craft [visit reddit] [comments] |

For the past few days, I was trying to run this python code and I can’t figure out how to run it.

tkipf/gae: Implementation of Graph Auto-Encoders in TensorFlow (github.com)

My steps were the below.

step1: I created all 10 .py files (via atom) and also downloaded the data folder.

step2: I run the cmd typed python setup.py install

step 3: I run the cmd from gae folder and typed python train.py

I had multiple tensoflow1.X errors since I used the updated version.

I am sure I am doing something terribly wrong due to my lack of experience.

How would you run this code? If it’s not too complicated, could you show me with a short video or describe it? Thank you in advance

submitted by /u/xlimitxfb

[visit reddit] [comments]

Meet the electric vehicle that’s quick-witted and fully outfitted. Last week, NIO began deliveries of its highly anticipated ET7 fully electric vehicle, in Hefei, China. The full-size luxury sedan is the first production vehicle built on the NIO Adam supercomputer, powered by four NVIDIA DRIVE Orin systems-on-a-chip (SoCs). The production launch of its flagship sedan Read article >

The post Fast and Luxurious: The Intelligent NIO ET7 EV Built on NVIDIA DRIVE Orin Arrives appeared first on NVIDIA Blog.

Reinforcement learning (RL) can be used to train a policy to perform a task via trial and error, but a major challenge in RL is learning policies from scratch in environments with hard exploration challenges. For example, consider the setting depicted in the door-binary-v0 environment from the adroit manipulation suite, where an RL agent must control a hand in 3D space to open a door placed in front of it.

|

| An RL agent must control a hand in 3D space to open a door placed in front of it. The agent receives a reward signal only when the door is completely open. |

Since the agent receives no intermediary rewards, it cannot measure how close it is to completing the task, and so must explore the space randomly until it eventually opens the door. Given how long the task takes and the precise control required, this is extremely unlikely.

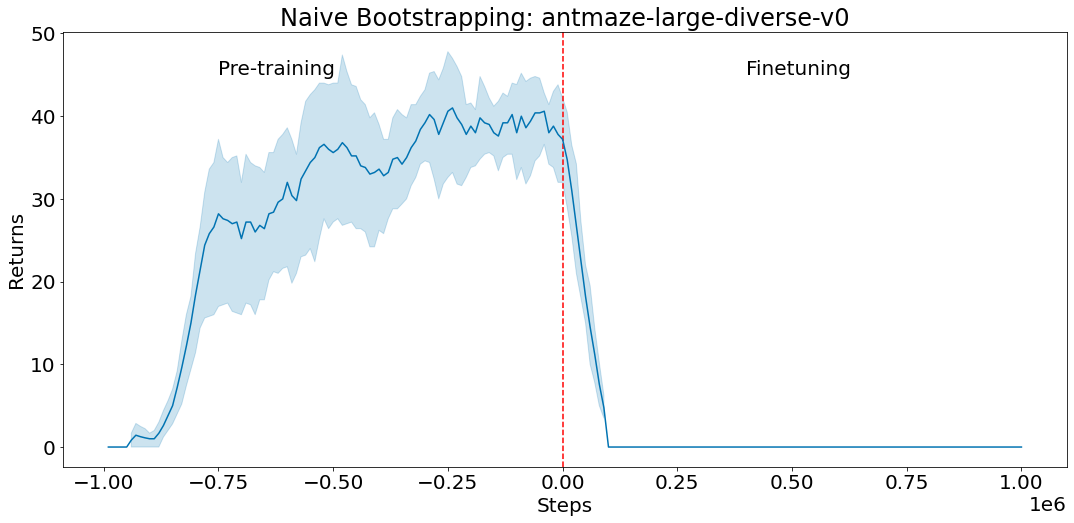

For tasks like this, we can avoid exploring the state space randomly by using prior information. This prior information helps the agent understand which states of the environment are good, and should be further explored. We could use offline data (i.e., data collected by human demonstrators, scripted policies, or other RL agents) to train a policy, then use it to initialize a new RL policy. In the case where we use neural networks to represent the policies, this would involve copying the pre-trained policy’s neural network over to the new RL policy. This procedure makes the new RL policy behave like the pre-trained policy. However, naïvely initializing a new RL policy like this often works poorly, especially for value-based RL methods, as shown below.

|

| A policy is pre-trained on the antmaze-large-diverse-v0 D4RL environment with offline data (negative steps correspond to pre-training). We then use the policy to initialize actor-critic fine-tuning (positive steps starting from step 0) with this pre-trained policy as the initial actor. The critic is initialized randomly. The actor’s performance immediately drops and does not recover, as the untrained critic provides a poor learning signal and causes the good initial policy to be forgotten. |

With the above in mind, in “Jump-Start Reinforcement Learning” (JSRL), we introduce a meta-algorithm that can use a pre-existing policy of any form to initialize any type of RL algorithm. JSRL uses two policies to learn tasks: a guide-policy, and an exploration-policy. The exploration-policy is an RL policy that is trained online with new experience that the agent collects from the environment, and the guide-policy is a pre-existing policy of any form that is not updated during online training. In this work, we focus on scenarios where the guide-policy is learned from demonstrations, but many other kinds of guide-policies can be used. JSRL creates a learning curriculum by rolling in the guide-policy, which is then followed by the self-improving exploration-policy, resulting in performance that compares to or improves on competitive IL+RL methods.

The JSRL Approach

The guide-policy can take any form: it could be a scripted policy, a policy trained with RL, or even a live human demonstrator. The only requirements are that the guide-policy is reasonable (i.e., better than random exploration), and it can select actions based on observations of the environment. Ideally, the guide-policy can reach poor or medium performance in the environment, but cannot further improve itself with additional fine-tuning. JSRL then allows us to leverage the progress of this guide-policy to take the performance even higher.

At the beginning of training, we roll out the guide-policy for a fixed number of steps so that the agent is closer to goal states. The exploration-policy then takes over and continues acting in the environment to reach these goals. As the performance of the exploration-policy improves, we gradually reduce the number of steps that the guide-policy takes, until the exploration-policy takes over completely. This process creates a curriculum of starting states for the exploration-policy such that in each curriculum stage, it only needs to learn to reach the initial states of prior curriculum stages.

Comparison to IL+RL Baselines

Since JSRL can use a prior policy to initialize RL, a natural comparison would be to imitation and reinforcement learning (IL+RL) methods that train on offline datasets, then fine-tune the pre-trained policies with new online experience. We show how JSRL compares to competitive IL+RL methods on the D4RL benchmark tasks. These tasks include simulated robotic control environments, along with datasets of offline data from human demonstrators, planners, and other learned policies. Out of the D4RL tasks, we focus on the difficult ant maze and adroit dexterous manipulation environments.

|

| Example ant maze (left) and adroit dexterous manipulation (right) environments. |

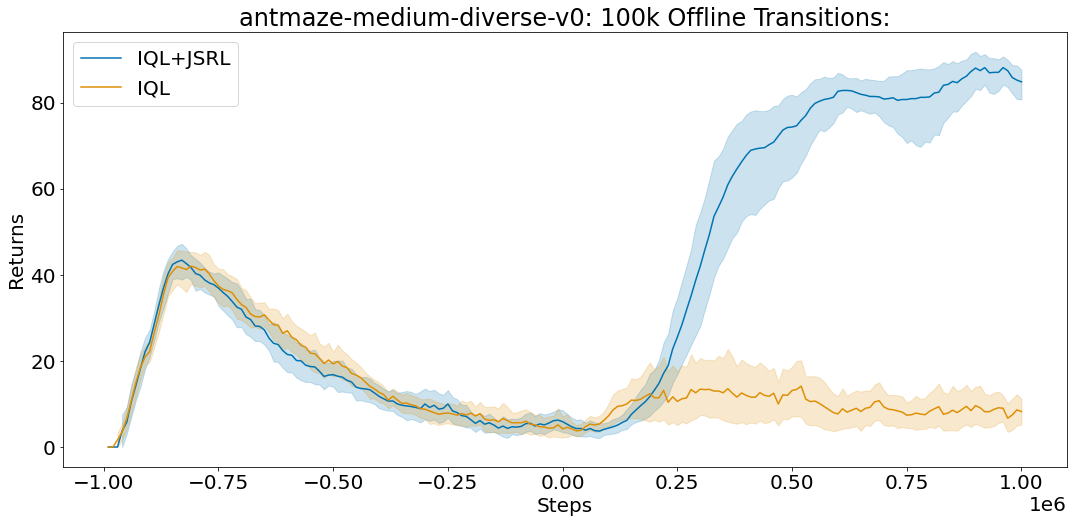

For each experiment, we train on an offline dataset and then run online fine-tuning. We compare against algorithms designed specifically for each setting, which include AWAC, IQL, CQL, and behavioral cloning. While JSRL can be used in combination with any initial guide-policy or fine-tuning algorithm, we use our strongest baseline, IQL, as a pre-trained guide and for fine-tuning. The full D4RL dataset includes one million offline transitions for each ant maze task. Each transition is a sequence of format (S, A, R, S’) which specifies what state the agent started in (S), the action the agent took (A), the reward the agent received (R), and the state the agent ended up in (S’) after taking action A. We find that JSRL performs well with as few as ten thousand offline transitions.

|

|

| Average score (max=100) on the antmaze-medium-diverse-v0 environment from the D4RL benchmark suite. JSRL can improve even with limited access to offline transitions. |

Vision-Based Robotic Tasks

Utilizing offline data is especially challenging in complex tasks such as vision-based robotic manipulation due to the curse of dimensionality. The high dimensionality of both the continuous-control action space and the pixel-based state space present scaling challenges for IL+RL methods in terms of the amount of data required to learn good policies. To study how JSRL scales to such settings, we focus on two difficult simulated robotic manipulation tasks: indiscriminate grasping (i.e., lifting any object) and instance grasping (i.e., lifting a specific target object).

We compare JSRL against methods that are able to scale to complex vision-based robotics settings, such as QT-Opt and AW-Opt. Each method has access to the same offline dataset of successful demonstrations and is allowed to run online fine-tuning for up to 100,000 steps.

In these experiments, we use behavioral cloning as a guide-policy and combine JSRL with QT-Opt for fine-tuning. The combination of QT-Opt+JSRL improves faster than all other methods while achieving the highest success rate.

|

|

| Mean grasping success for indiscriminate and instance grasping environments using 2k successful demonstrations. |

Conclusion

We proposed JSRL, a method for leveraging a prior policy of any form to improve exploration for initializing RL tasks. Our algorithm creates a learning curriculum by rolling in a pre-existing guide-policy, which is then followed by the self-improving exploration-policy. The job of the exploration-policy is greatly simplified since it starts exploring from states closer to the goal. As the exploration-policy improves, the effect of the guide-policy diminishes, leading to a fully capable RL policy. In the future, we plan to apply JSRL to problems such as Sim2Real, and explore how we can leverage multiple guide-policies to train RL agents.

Acknowledgements

This work would not have been possible without Ikechukwu Uchendu, Ted Xiao, Yao Lu, Banghua Zhu, Mengyuan Yan, Joséphine Simon, Matthew Bennice, Chuyuan Fu, Cong Ma, Jiantao Jiao, Sergey Levine, and Karol Hausman. Special thanks to Tom Small for creating the animations for this post.

In its debut in the industry MLPerf benchmarks, NVIDIA Orin, a low-power system-on-chip based on the NVIDIA Ampere architecture, set new records in AI inference, raising the bar in per-accelerator performance at the edge. Overall, NVIDIA with its partners continued to show the highest performance and broadest ecosystem for running all machine-learning workloads and scenarios Read article >

The post NVIDIA Orin Leaps Ahead in Edge AI, Boosting Leadership in MLPerf Tests appeared first on NVIDIA Blog.

Edge computing is a new paradigm shift for organizations, this article looks at the cost factors for rolling out these solutions.

Edge computing is a new paradigm shift for organizations, this article looks at the cost factors for rolling out these solutions.

The growth in Internet of Things (IoT) and enterprise adoption of AI has led to a renewed focus on edge computing. Organizations are looking to capitalize on the data these IoT devices produce at the edge and solve challenges that were previously impossible with centralized data centers or cloud computing.

When combined edge computing and AI, also known as edge AI, is used for real-time inference that powers digital transformation of business processes. Edge AI is a core technology for intelligent spaces that drive efficiency, automate workflows, reduce cost, and improve overall customer experience.

Today, organizations in all industries are in process of rolling out edge AI solutions—on factory floors, in retail stores, on oil rigs, and within autonomous machines.

As with any new IT initiative, getting the full benefits of edge computing requires careful planning to build a platform that will meet the needs of today and any expansion in the future.

In a broad sense, edge computing is used to refer to anything outside of the data center or cloud. More specifically, it is the practice of moving compute power physically closer to where data is generated, usually an IoT device or sensor.

Compared to cloud computing, edge computing delivers lower latency, reduced bandwidth requirements, and improved data privacy. There are different types of edge computing, often broken down based on their use case, networking requirements, or location. Content delivery networks, factory inspections, frictionless stores, and robotics are all considered examples of edge computing.

Despite the differences, the benefits remain the same: low latency, reduced bandwidth requirements, and data privacy.

The cost of edge computing varies wildly depending on scale, data, location, and expertise. Overall costs can increase or decrease depending on the infrastructure currently in place at edge environments. Figure 1 shows a few key factors.

One of the first considerations for deploying AI applications to the edge are the systems and sensors needed to support the use case. Generally, there is already some sort of infrastructure in place that must be accounted for before adding new hardware or software. The most common components of edge infrastructure are the sensors, compute systems, and network.

Both the number and type of sensors affect the cost to an organization. Many organizations have already made investments in IoT sensors, reducing the overall investment required for an edge computing roll-out. When adding sensors, single-use scanners can add up quickly.

Cameras are some of the most versatile edge devices that give you the ability to run multiple applications simultaneously. Other sensors include microphones, barcode scanners, or RFID-enabled smart tags.

Compute at the edge can run on a simple embedded device that costs hundreds of dollars up. Or it can run to a half or even full rack of servers for hundreds of thousands of dollars. Compute systems are sized based on the amount of data that is being collected and processed, the complexity of one or more AI models, and the number of inferences being run at any given time.

When building out compute at the edge, it is important to consider both existing and future edge use cases that will run in the environment. Some applications can run on CPU-only systems, while others require or can greatly benefit from GPUs.

Embedded devices or even single GPU systems are cheaper up front. However, if your plan is to run multiple AI applications, a single system with multiple GPUs that can run more than one workload provides cost savings. It will also generally be more efficient in space-constrained areas.

Most enterprise edge use cases are run on-premises, either hardwired to a network or Wi-Fi. That makes the networking component essentially free. Remote devices that rely on cellular networks do incur cost based on the data streamed, which can get especially expensive if the data is video.

AI-on-5G is a key topic for consideration for many organizations, especially those that are looking at use cases that rely on guaranteed performance and high speed wireless. These solutions are still in the early stages of development, which makes cost difficult to determine.

Another networking consideration with edge computing is looking at what and how much data will be sent back to a data center or cloud. Most organizations use data from the edge to validate and retrain their AI models in a centralized location. Building a strategy around data that takes into consideration network and storage is critical to ensuring that the overall cost to maintain edge applications is managed.

The number of AI applications in production is expected to grow to over 60% in the next few years. It’s not if an organization will deploy an AI application but when. Organizations either build or buy applications or use a hybrid approach.

Building a data science team from scratch can be a large undertaking, especially due to the high demand and limited supply of qualified candidates in most locations. The average salary of a data scientist starts between $100-150K depending on skill level. Even organizations that have AI developers in-house often use a combination of build and buy strategy, saving their internal expertise for those critical applications that help them differentiate.

For organizations that do not already have data scientists and AI developers on staff, buying an AI application is the preferred method.

Additional service contracts can be purchased for ongoing management and upgrades of these applications, depending on customer need.

Edge computing presents unique challenges around management. These environments are highly distributed, deployed in remote locations without trained IT staff, and often lack the physical security expected from a data center.

Dedicated edge AI management solutions are most often priced based on usage, with systems or GPUs under management as the determining factor. These solutions have the benefit of key features that are tailored for edge deployments as well as the ability to scale as you grow in terms of cost. Some examples of these solutions include NVIDIA Fleet Command, Azure IoT, and AWS IoT.

Another management option is to extend traditional data center management solutions to the edge. Both VMware Tanzu and RedHat OpenShift are commonly found in many data center deployments, which means that IT teams have experience with them. Extending these solutions to the edge may require an increase in licensing cost, depending on the contract the company has.

Other costs that should be considered are the time it takes to make these solutions compatible with edge deployments, as well as the ongoing management of these environments.

Some organizations look to outsource the management of their edge computing environments to system integrators or other management partners. These engagements can vary greatly, and include development of AI models, provisioning and management of infrastructure, as well as roll-out and updates of AI applications.

This option is typically considered when there is a limited amount of in-house expertise building and managing an edge AI solution. Depending on the scope, scale, and duration, these engagements cost anywhere from hundreds of thousands to millions of dollars.

Many organizations have made large investments in cloud computing. Now, with the rise of edge computing, they are looking for the cost savings. When comparing edge to cloud, edge AI is typically a new investment, so there is an upfront cost to getting started. When this cost is compared to the cost of moving streaming data and storing it in the cloud, there may be some reduced cost.

Most often, the move to edge computing is due to a use case that requires real-time responses or deployment in remote locations that have limited bandwidth. As an example, workloads like predictive maintenance, safety alerts, or autonomous machines would not be possible from a cloud environment due to the latency requirements.

When this is the case, it is not so important that the cost of edge computing is reduced; rather that the AI algorithm brings huge business value to the organization.

Edge computing is a paradigm shift for most organizations. Like other transformational shifts, the process can be complex and expensive if not carefully thought through. However, when combined with AI, your organization can see huge benefits. From improved efficiency to reduced operating costs to improved customer intelligence and experience, the economic benefits that edge AI brings can be measured in millions of dollars.

AI enables a frictionless shopping experience where customers can walk into a store, select the items they want to purchase, and leave with the items automatically charged to their account.

Retailers solve labor shortages and supply chain issues with AI: Over the last year retailers have experienced incredible challenges with the labor force being reduced by 6.2%, and shutdowns wreaking havoc on global supply chains. Using AI solutions, stores and restaurants have been able to improve automation, forecasting, and logistics to provide even better experiences to their customers.

AI inspection reduces total manufacturing cost: In any manufacturing line, manual inspection takes a significant amount of time and requires highly skilled workers to keep quality high. When accurate and quick defect detection is required, AI can be a perfect answer to increase overall equipment effectiveness (OEE) and boost production on a line. One manufacturer was able to reduce inspection costs from 30% of total manufacturing cost by using AI optical inspection in the factory.

Smart hospitals optimize workflows and improve clinician experiences: The delivery of healthcare services is becoming more challenging, with providers, staff, and IT all having to do more with less resources. AI helps augment the work of these providers, giving them valuable and timely insights that not only reduce their burden but also save lives. Using vision AI to monitor patients and automate workflows, a 100-bed facility can save up to $11m annually.

Given the value of edge AI, the topic of how to roll out a successful edge strategy is certainly be a key focus for organizations and IT departments. As a leader in AI, NVIDIA has worked with customers and partners to create edge computing solutions that deliver powerful, distributed compute; secure remote management; and compatibility with industry-leading technologies.

To understand if edge computing is the right solution for you, download the Considerations for Deploying AI at the Edge whitepaper.

Today NVIDIA announced the NGC catalog now provides a one-click deploy feature to run Jupyter notebooks on Google Cloud’s Vertex AI Workbench.

Today NVIDIA announced the NGC catalog now provides a one-click deploy feature to run Jupyter notebooks on Google Cloud’s Vertex AI Workbench.

Developing AI with your favorite tool, Jupyter Notebooks, just got easier due to a partnership between NVIDIA and Google Cloud.

The NVIDIA NGC catalog offers GPU-optimized frameworks, SDKs, pretrained AI models, and example notebooks to help you build AI solutions faster. To further speed up your development workflow, a simplified deployment of this software with the NGC catalog’s new one-click deploy feature was released today.

Simply go to the software page in the NGC catalog and click on “Deploy” to get started. Under the hood, this feature: launches the JupyterLab instance on Google Cloud Vertex AI Workbench with optimal configuration; preloads the software dependencies; and downloads the NGC notebook in one go. You can also change the configuration before launching the instance.

You can deploy frameworks like TensorFlow and PyTorch from the NGC catalog to Google Cloud Vertex AI Workbench with this feature. This will create the instance, load the framework, and create a blank notebook to start your development.

And in case you are deploying a pretrained model from the NGC catalog, it will load the model, the framework associated with that model, other dependencies, and a blank notebook on a preconfigured instance.

Now, you can start executing your code right away without needing any IT expertise to configure the development environment.

To quickly get started, we offer Jupyter Notebook examples for hundreds of use-cases including:

And this is just the beginning. We will keep adding more examples across use cases and industries to help you accelerate your AI development.

NVIDIA offers an enterprise support option with the purchase of NVIDIA AI Enterprise licenses that provide a single point of contact for AI developers and researchers. The benefits include hybrid-cloud platform support, access to NVIDIA AI experts and training resources, and long-term support for designated software branches. Learn more about NVIDIA AI Enterprise Support.

Learn about the characteristics of various accelerated workload categories and the system features needed to run them.

Learn about the characteristics of various accelerated workload categories and the system features needed to run them.

Deep learning has come to mean the most common implementation of a neural network for performing many AI tasks. Data scientists use software frameworks such as TensorFlow and PyTorch to develop and run DL algorithms.

By this point, there has been a lot written about deep learning, and you can find more detailed information from many sources. For a good high-level summary, see What’s the Difference Between Artificial Intelligence, Machine Learning and Deep Learning?

A popular way to get started with deep learning is to run these frameworks in the cloud. However, as enterprises start to grow and mature their AI expertise, they look for ways to run these frameworks in their own data centers, to avoid the costs and other challenges of cloud-based AI.

In this post, I discuss how to choose an enterprise server for deep learning training. I review specific computational requirements of this unique workload, and then discuss how to address these needs with the best choice for component configuration.

Deep learning training is often designed as a data processing pipeline. Raw input data must first be prepared in terms of data format, size, and other factors.

Data is also often preprocessed so that the same input can be presented in different ways to the model, depending on what the data scientist has determined will provide a more robust training set. For example, images can be rotated by a random amount, so that the model learns to recognize objects regardless of orientation. The prepared data is then fed into the DL algorithm.

With this understanding of how DL training works, here are the specific computational needs for performing this task in the quickest and most efficient way.

At the heart of deep learning is the GPU. The process of calculating the values for each layer of a network is ultimately a huge set of matrix multiplications. The data for each layer can usually be worked on in parallel, with coordination steps between layers.

GPUs are designed to perform matrix multiplication in a massively parallel manner, and have proven to be ideal for achieving tremendous speed-ups for deep learning.

For training, size of model is the driving factor, and so GPUs with larger and faster memory, such as the NVIDIA A100 Tensor Core GPU, are able to crunch through batches of training data more quickly.

The data preparation and preprocessing computations required for DL training are usually performed on the CPU, although recent innovations have enabled more and more of this to be performed on GPUs.

It is critical to use a high-performance CPU to sustain these operations at a fast enough rate so that the GPU is not starved by waiting for data. The CPU should be enterprise-class, such as from the Intel Xeon Scalable processor family or AMD EPYC line, and the ratio of CPU cores to GPUs should be large enough to keep the pipeline running.

Especially for today’s biggest models, DL training works only when there is an extremely large amount of input data to train on. This data is retrieved from storage in batches and then worked on by the CPU while in system memory before being fed to the GPU.

To keep this process flowing at a sustained rate, the system memory should be large enough so the rate of CPU processing can match the rate at which the data is processed by the GPU. This can be expressed in terms of the ratio of system memory to GPU memory (across all GPUs in the server).

Different models and algorithms require a different ratio, but it is better to have a higher ratio, so that the GPU is never waiting for data.

As DL models have gotten larger, techniques have been developed to perform training with multiple GPUs working together. When more than one GPU is installed in a server, they can communicate with each other through the PCIe bus, although more specialized technologies such as NVLink and NVSwitch can be used for the highest performance.

Multi-GPU training can also be extended to work across multiple servers too. In this case, the network adapter becomes a critical component of a server’s design. High-bandwidth Ethernet or InfiniBand adapters are necessary to minimize bottlenecks due to data transfer when executing multi-node DL training.

DL frameworks make use of libraries such as NCCL to perform the coordination between GPUs in an optimum and performant manner. Technologies such as GPUDirect RDMA enable data to be transferred from the network directly to the GPU without having to go through the CPU, thus eliminating this as a source of latency.

Ideally, there should be one network adapter for every one or two GPUs in the system, to minimize contention when data must be transferred.

DL training data typically resides on external storage arrays. NVMe drives on the server can greatly accelerate the training process by providing a means to cache data.

DL I/O patterns typically consist of multiple iterations of reading the training data. The first pass, or epoch, of training reads the data that is used to start training the model. If adequate local caching is provided on the node, subsequent passes through the data can avoid rereading the data from remote storage.

To avoid contention when pulling data from remote storage, there should be one NVMe drive per CPU.

With the complex interplay between CPU, GPU, and networking, it should be clear that having a connectivity design that reduces any potential bottlenecks in the DL training pipeline is crucial to achieving the best performance.

Most enterprise servers today use PCIe as the means of communication between components. The primary traffic on the PCIe bus occurs on the following pathways:

Servers to be used for deep learning should have a balanced PCIe topology, with GPUs spread evenly across CPU sockets and PCIe root ports. In all cases, the number of PCIe lanes to each GPU should be the maximum number supported.

If there are multiple GPUs and the number of PCIe lanes from the CPU are not enough to accommodate them all, then a PCIe switch might be required. In this case, the number of PCIe switch layers should be limited to one or two, to minimize PCIe latency.

Similarly, network adapters and NVMe drives should be under the same PCIe switch or PCIe root complex as the GPUs. In server configurations that use PCIe switches, these devices should be located under the same PCIe switch as the GPUs for best performance.

It’s complex to design a server optimized for DL training. NVIDIA has published guidelines on configuring servers for various types of accelerated workloads, based on many years of experience with these workloads and working with developers to optimize code.

To make it easier for you to get started, NVIDIA developed the NVIDIA-Certified Systems program. System vendor partners have configured and tested numerous server models in various form factors with specific NVIDIA GPUs and network adapters to validate the optimal design for the best performance.

The validation also covers other important features for production deployment, such as manageability, security, and scalability. Systems are certified in a range of categories targeted to different workload types. The Qualified System Catalog has a list of NVIDIA-Certified Systems from NVIDIA partners. Servers in the Data Center category have been validated to provide the best performance for DL training.

Along with the right hardware, enterprise customers want to choose a supported software solution for AI workloads. NVIDIA AI Enterprise is an end-to-end, cloud-native suite of AI and data analytics software. It’s optimized so every organization can be good at AI, certified to deploy anywhere from the enterprise data center to the public cloud. AI Enterprise includes global enterprise support so that AI projects stay on track.

When you run NVIDIA AI Enterprise on optimally configured servers, you can be assured that you are getting the best out of your hardware and software investments.

In this post, I showed you how to choose an enterprise server for deep learning training, with specific computational requirements. Hopefully, you’ve learned how to address these needs with the best choices for component configuration.

For more information, see the following resources:

I am new to TensorFlow so I apologize for any easy things I get wrong. I am trying to install the TensorFlow object detection API for TensorFlow 2 onto an aarch64 machine. There is no package for it so I am going to try and install it from source. I’ve never installed something from source code before so I don’t know what source code looks like or where to find it. Could someone please tell me where I find the source code for the object detection API. The internet says there should be a .zip file or .tar file on git hub but I can’t seem to find it.

submitted by /u/Apprehensive_Monk_11

[visit reddit] [comments]

|

I’m currently working with images that are 4032×3024 in size but after my computer freezing from 2 epochs and some advice from my colleagues, I have reduced this size considerably. My initial plan was to resize the images to 10% of their original (i.e. 403×302 when converted to integers) but when I tried to train my neural network with these new images, I received an error saying that my image size was incompatible. Is there a way to fix this or do I need to resize my images to a standardized size? My code: Note: I am extremely new to tensorflow so if this code can be improved in any way please let me know Context: I’m working with images of candled eggs (I have a very small sample size as this is brand new research) and have learned everything I know so far from youtube. I’m unaware of the importance of the learning rate value, how the metrics are tracked, or what an activation function is. submitted by /u/Kapooshi |