Virtual consensus in Delos, Balakrishnan et al. (Facebook, Inc.), OSDI’2020

Before we dive into this paper, if you click on the link above and then download and open up the paper pdf you might notice the familiar red/orange splash of USENIX, and appreciate the fully open access. USENIX is a nonprofit organisation committed to making content and research freely available – both conference proceedings and the recorded presentations of their events. Without in-person conferences this year, income is down and events are under threat. If you want to help them, you have options to donate, become a member, or even talk to your organisation about becoming a partner, benefactor, or patron. Every little helps!

Back in 2017 the engineering team at Facebook had a problem. They needed a table store to power core control plane services, which meant strong guarantees on durability, consistency, and availability. They also needed it fast – the goal was to be in production within 6 to 9 months. While ultimately this new system should be able to take advantage of the latest advances in consensus for improved performance, that’s not realistic given a 6-9 month in-production target. So realistically all that could be done was to choose an existing consensus implementation and integrate it. Integrating an existing implementation brings problems of its own though:

Laying the system over an existing shared log such as LogDevice would allow us to reach production quickly, but also tie us for perpetuity to the fault-tolerance and performance properties of that consensus implementation.

What Facebook needed, literally, was a plan to throw one away. I.e., a plan that let them get into production quickly with an existing implementation, and then be able to upgrade it later without disturbing system operation (nobody wants to take down the central control plane for maintenance!). This calls for the oldest tool in the box: a level of indirection. In other words, an API based abstraction over consensus, together with a runtime that supports hot-swapping of those implementations. The standout candidate as the API for consensus is the shared log.

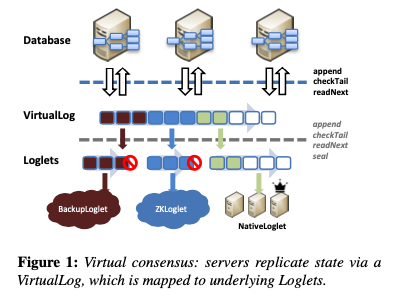

Recently, the shared log has gained traction as an API for consensus in research and industry. Applications can replicate state via this API by appending updates to the shared log, checking its tail, and reading back updates from it. The consensus protocol is hidden behind the shared log API, allowing applications to bind to any implementation at deployment time.

Behind the shared log API is a log abstraction that maps log positions to log entries. If you think of this a bit like mapping memory addresses to data in memory, then another parallel comes to mind: the virtual address space. One logical log, but with different portions of the log address space mapped to different backing shared log instances. This is the core idea in Delos, a VirtualLog that virtualises consensus.

We propose the novel abstraction of a virtual shared log (or VirtualLog). The VirtualLog exposes a conventional shared log API; applications above it are oblivious to its virtualized nature. Under the hood, the VirtualLog chains multiple shared log instances (called Loglets) into a single shared log.

It’s such a powerful idea that I can imagine distributed systems implementers everywhere adopting it from now on. What does the VirtualLog give us?

Firstly, it solves the upgrade problem. We have an existing Loglet writing log entries into the address space. To upgrade, the portion of the address space managed by this Loglet is sealed to prevent further writes, and then the Loglet chain is reconfigured to add the new Loglet at the tail. That’s not just theoretical, Facebook actually did this in production while Delos was processing over 1.8 billion transactions per day. The initial version of Delos went into production after eight months using a ZooKeeper-backed Loglet implementation, and then four months later it was swapped out for a new custom-built NativeLoglet that gave a 10x improvement in end-to-end latency.

Once you have a trusted reconfiguration protocol to move from one Loglet chain configuration to another, you can do lots of interesting things. For example, Loglets might be instances of the same ordering protocol, but with different parameters, or they could be entirely different log implementations (e.g. replacing Paxos with Raft), or they could be shims over external storage systems. If you have an existing implementation with its own leader elections, internal reconfiguration, and epochs that can sit happily under the Loglet abstraction. But critically, Loglets no longer need to handle all of that complexity:

While the VirtualLog’s reconfiguration mechanism can be used solely for migrating between entirely different Loglet implementations, it can also switch between different instances of the same Loglet protocol with changes to leadership, roles, parameters, and membership. As a result, the Loglet itself can be a statically configured protocol, without any internal support for reconfiguration. In fact, the Loglet does not even have to implement fault-tolerant consensus (i.e. be highly available for appends via leader election), as long as it provides a fault tolerant

sealcommand, which is theoretically weaker and simpler to implement.

This separation of concerns moves reconfiguration into the VirtualLog control plane, leaving Loglets responsible for the data plane. It makes reconfiguration easier as well as simplifying the implementation of Loglets. If a Loglet fails for appends, it is simply sealed and the VirtualLog switches to a new Loglet.

Sealing

A minimal Loglet needs to provide totally ordered, durable storage via the shared log API. It can do this within a static configuration with no support for role or membership changes and no leader election. What it must provide however, is a highly available seal command that prevents any new appends from being acknowledged.

Once sealed, a Loglet can never be unsealed. So we have a couple of handy properties that make implementing seal much easier than a general purpose highly available append: only one value can ever be proposed, and that value is sticky. In Facebook’s implementation of NativeLoglet, seal simply sets a bit on a quorum of servers.

In addition to seal, a minimal Loglet is also responsible for its own failure detection, asking the VirtualLog to reconfigure when a failure is detected, and supplying a new Loglet configuration minus the failed servers.

Reconfiguration

Existing consensus systems often store the configuration and epoch information inline in the same totally ordered log as other commands. For VirtualLog, that would mean writing the configuration for the new Loglet inside the log address space of the outgoing Loglet before it is sealed. And that would put more complexity onto Loglets, requiring them to be highly available for appends, not just seal.

The VirtualLog uses a separate MetaStore instead, whose job is to manage the configuration of the VirtualLog over time. Because reconfiguration happens less frequently than regular command sequencing, the consensus protocol for reconfiguration can favour simplicity over out-and-out performance. For Facebook’s Delos, reconfiguration latencies of 10s of ms are ok.

The MetaStore exposes a single versioned register supporting a conditional write: writing requires supplying a new value and an expected existing version. Any client can initiate a reconfiguration, or complete a reconfiguration begun by another client. Reconfiguration has three steps:

- The client seals the current chain by sealing its active segment. A call to

checkTailwill now return the start of a new active segment. This is an idempotent operation. - The reconfiguring client writes a new chain to the MetaStore. Because of the conditional write, if multiple clients are racing to reconfigure, at most one one can win.

- The reconfiguring client fetches the new chain from the MetaStore (in the case where its write failed in step 2).

Clients trying to write to the old active segment after step 1 will receive a ‘sealed’ error code, and can retry after fetching the latest chain from the MetaStore.

The NativeLoglet

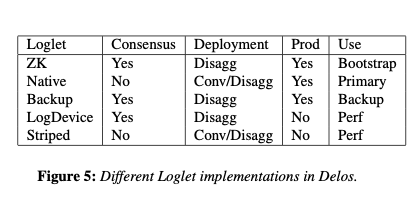

Delos currently supports three disaggregated Loglets acting as shims over external services (ZooKeeper, a LogDevice service, and a Backup service used for cold storage). It also has two of its own Loglet implementations that can be run either converged or disaggregated: the NativeLoglet and the StripingLoglet.

In production, Facebook use the NativeLoglet in converged form. NativeLoglet implements seal by setting a bit on a quorum of servers. It uses a a central sequencer to assign positions to commands and forward requests to LogServers. An append is considered globally committed once a majority acknowledge. If the sequencer fails, NativeLoglet simply becomes unavailable for appends.

Practically, we found this protocol much easier to implement than fault-tolerant consensus: it took just under 4 months to implement and deploy a production-quality native Loglet.

Striping Loglets

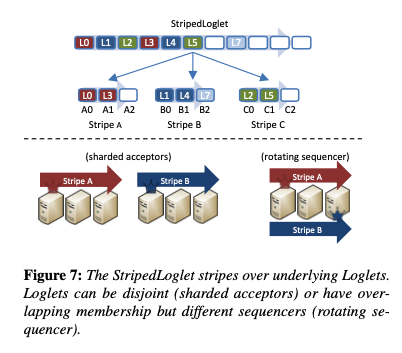

The StripedLoglet is where things start to get even more interesting. A StripedLoglet is responsible for one portion of the global log address space, but internally it further maps (stripes) that portion over multiple nested Loglets.

This provides a simple way (about 300 loc) to scale throughput. For example, a shared sequencer bottleneck can be relieved by introducing a rotating sequencer – multiple sequencers dividing an address space between them and sharing the same underling LogServers. Alternatively, the address space can mapped to multiple underlying LogServer clusters to increase throughput.

Even though it is a composite, a StripedLoglet must still be sealed as a whole even if only one of its stripes needs to be reconfigured.

Delos in production

The evaluation section has lots of good information on experiences running Delos in production, as well as some synthetic benchmarks. The in-production switch from a ZK Loglet to NativeLoglet was done for the first time on April 2nd 2019, and gave a 10x improvement at p99 for gets, and a 5x improvement for writes.

My favourite example here is a really cool use case for reconfiguration. Most of the time Delos runs with a converged Loglet implementation, since this reduces the external dependencies of a very critical system. Under a log spike though, it can be reconfigured to run with a disaggregated Loglet, giving higher throughput. A related example is when Delos is running in converged mode and e.g. two of the five converged database replicas crash. Now the database and the shared log are fate-sharing and at risk if one more node is lost…

In this situation (which was not uncommon), we found it valuable to reconfigure the system to a disaggregated log, temporarily decoupling the fate of the database and the log. Once the database was restored to five replicas, we reconfigured back.

The overheads of virtualisation are pleasingly low: about 100-150µs at p99 latency, 10s of ms for reconfiguration, and no impact on peak throughput.

Limitations and future work

It all sounds pretty amazing doesn’t it! There are a couple of limitations: (1) consensus protocols that exploit speculation or commutativity don’t currently fit under the Loglet API. “In future work, we plan to extend virtual consensus to partially ordered shared logs.”, and (2) there’s a latency hit for VirtualLog-driven reconfiguration which may or may not be important in your scenario.