Can someone explain what a stretched 3×3 kernel to 5×5 kernel with insertion of zeros mean. How is this helpful and where would it be used.

submitted by /u/larekop

[visit reddit] [comments]

Can someone explain what a stretched 3×3 kernel to 5×5 kernel with insertion of zeros mean. How is this helpful and where would it be used.

submitted by /u/larekop

[visit reddit] [comments]

I’m doing some testing using Google Cloud AI Platform and have seen some strange variation in training times. As an example, I did a test run that had an average training time of around 3.2 seconds per batch. I repeated it with the exact same hyperparameters and machine type and it took around 2.4 seconds the next time. Is there some explanation for this other than one GPU I’m assigned to being better in some way than another? That doesn’t really make sense either, but I don’t know how else to explain it.

submitted by /u/EdvardDashD

[visit reddit] [comments]

I have a dataset of type:

<BatchDataset shapes: ((None, 256, 256, 3), (None,)), types: (tf.float32, tf.int32)>

How do i convert it into a dataset of type:

<PrefetchDataset shapes: {image: (256, 256, 3), label: ()}, types: {image: tf.uint8, label: tf.int64}>

in tensorflow

submitted by /u/Accurate-Ad-7303

[visit reddit] [comments]

For the ~466 million people in the world who are deaf or hard of hearing, the lack of easy access to accessibility services can be a barrier to participating in spoken conversations encountered daily. While hearing aids can help alleviate this, simply amplifying sound is insufficient for many. One additional option that may be available is the cochlear implant (CI), which is an electronic device that is surgically inserted into a part of the inner ear, called the cochlea, and stimulates the auditory nerve electrically via external sound processors. While many individuals with these cochlear implants can learn to interpret these electrical stimulations as audible speech, the listening experience can be quite varied and particularly challenging in noisy environments.

Modern cochlear implants drive electrodes with pulsatile signals (i.e., discrete stimulation pulses) that are computed by external sound processors. The main challenge still facing the CI field is how to best process sounds — to convert sounds to pulses on electrodes — in a way that makes them more intelligible to users. Recently, to stimulate progress on this problem, scientists in industry and academia organized a CI Hackathon to open the problem up to a wider range of ideas.

In this post, we share exploratory research demonstrating that a speech enhancement preprocessor — specifically, a noise suppressor — can be used at the input of a CI’s processor to enhance users’ understanding of speech in noisy environments. We also discuss how we built on this work in our entry for the CI Hackathon and how we will continue developing this work.

Improving CIs with Noise Suppression

In 2019, a small internal project demonstrated the benefits of noise suppression at the input of a CI’s processor. In this project, participants listened to 60 pre-recorded and pre-processed audio samples and ranked them by their listening comfort. CI users listened to the audio using their devices’ existing strategy for generating electrical pulses.

| Audio without background noise | ||

| Audio with background noise | ||

| Audio with background noise + noise suppression | ||

| Background audio clip from “IMG_0991.MOV” by Kenny MacCarthy, license: CC-BY 2.0. |

As shown below, both listening comfort and intelligibility usually increased, sometimes dramatically, when speech with noise (the lightest bar) was processed with noise suppression.

For the CI Hackathon, we built on the project above, continuing to leverage our use of a noise suppressor while additionally exploring an approach to compute the pulses too

Overview of the Processing Approach

The hackathon considered a CI with 16 electrodes. Our approach decomposes the audio into 16 overlapping frequency bands, corresponding to the positions of the electrodes in the cochlea. Next, because the dynamic range of sound easily spans multiple orders of magnitude more than what we expect the electrodes to represent, we aggressively compress the dynamic range of the signal by applying “per-channel energy normalization” (PCEN). Finally, the range-compressed signals are used to create the electrodogram (i.e., what the CI displays on the electrodes).

In addition, the hackathon required a submission be evaluated in multiple audio categories, including music, which is an important but notoriously difficult category of sounds for CI users to enjoy. However, the speech enhancement network was trained to suppress non-speech sounds, including both noise and music, so we needed to take extra measures to avoid suppressing instrumental music (note that in general, music suppression might be preferred by some users in certain contexts). To do this, we created a “mix” of the original audio with the noise-suppressed audio so that enough of the music would pass through to remain audible. We varied in real-time the fraction of original audio mixed from 0% to 40% (0% if all of the input is estimated as speech, up to 40% as more of the input is estimated as non-speech) based on the estimate from the open-source YAMNet classifier on every ~1 second window of audio of whether the input is speech or non-speech.

The Conv-TasNet Speech Enhancement Model

To implement a speech enhancement module that suppresses non-speech sounds, such as noise and music, we use the Conv-TasNet model, which can separate different kinds of sounds. To start, the raw audio waveforms are transformed and processed into a form that can be used by a neural network. The model transforms short, 2.5 millisecond frames of input audio with a learnable analysis transform to generate features optimized for sound separation. The network then produces two “masks” from those features: one mask for speech and one mask for noise. These masks indicate the degree to which each feature corresponds to either speech or noise. Separated speech and noise are reconstructed back to the audio domain by multiplying the masks with the analysis features, applying a synthesis transform back to audio-domain frames, and stitching the resulting short frames together. As a final step, the speech and noise estimates are processed by a mixture consistency layer, which improves the quality of the estimated waveforms by ensuring that they sum up to the original input mixture waveform.

|

| Block diagram of the speech enhancement system, which is based on Conv-TasNet. |

The model is both causal and low latency: for each 2.5 milliseconds of input audio, the model produces estimates of separated speech and noise, and thus could be used in real-time. For the hackathon, to demonstrate what could be possible with increased compute power in future hardware, we chose to use a model variant with 2.9 million parameters. This model size is too large to be practically implemented in a CI today, but demonstrates what kind of performance would be possible with more capable hardware in the future.

Listening to the Results

As we optimized our models and overall solution, we used the hackathon-provided vocoder (which required a fixed temporal spacing of electrical pulses) to produce audio simulating what CI users might perceive. We then conducted blind A-B listening tests as typical hearing users.

Listening to the vocoder simulations below, the speech in the reconstructed sounds — from the vocoder processing the electrodograms — is reasonably intelligible when the input sound doesn’t contain too much background noise, however there is still room to improve the clarity of the speech. Our submission performed well in the speech-in-noise category and achieved second place overall.

| Simulated audio with fixed temporal spacing |

| Vocoder simulation of what CI users might perceive from audio from an electrodogram with fixed temporal spacing, with background noise and noise suppression applied. |

A bottleneck on quality is that the fixed temporal spacing of stimulation pulses sacrifices fine-time structure in the audio. A change to the processing to produce pulses timed to peaks in the filtered sound waveforms captures more information about the pitch and structure of sound than is conventionally represented in implant stimulation patterns.

| Simulated audio with adaptive spacing and fine time structure |

| Vocoder simulation, using the same vocoder as above, but on an electrodogram from the modified processing that synchronizes stimulation pulses to peaks of the sound waveform. |

It’s important to note that this second vocoder output is overly optimistic about how well it might sound to a real CI user. For instance, the simple vocoder used here does not model how current spread in the cochlea blurs the stimulus, making it harder to resolve different frequencies. But this at least suggests that preserving fine-time structure is valuable and that the electrodogram itself is not the bottleneck.

Ideally, all processing approaches would be evaluated by a broad range of CI users, with the electrodograms implemented directly on their CIs rather than relying upon vocoder simulations.

Conclusion and a Call to Collaborate

We are planning to follow up on this experience in two main directions. First, we plan to explore the application of noise suppression to other hearing-accessibility modalities, including hearing aids, transcription, and vibrotactile sensory substitution. Second, we’ll take a deeper dive into the creation of electrodogram patterns for cochlear implants, exploiting fine temporal structure that is not accommodated in the usual CIS (continous interleaved sampling) patterns that are standard in the industry. According to Louizou: “It remains a puzzle how some single-channel patients can perform so well given the limited spectral information they receive”. Therefore, using fine temporal structure might be a critical step towards achieving an improved CI experience.

Google is committed to building technology with and for people with disabilities. If you are interested in collaborating to improve the state of the art in cochlear implants (or hearing aids), please reach out to ci-collaborators@googlegroups.com.

Acknowledgements

We would like to thank the Cochlear Impact hackathon organizers for giving us this opportunity and partnering with us. The participating team within Google is Samuel J. Yang, Scott Wisdom, Pascal Getreuer, Chet Gnegy, Mihajlo Velimirović, Sagar Savla, and Richard F. Lyon with guidance from Dan Ellis and Manoj Plakal.

An impressive array of NVIDIA GDC announcements elevates game development to the next level. Real-time ray tracing comes to Arm and Linux, DLSS gets an expansive update, the newly announced RTX Memory Utility enables efficient memory allocation, and Omniverse supercharges the development workflow.

An impressive array of NVIDIA GDC announcements elevates game development to the next level. Real-time ray tracing comes to Arm and Linux, DLSS gets an expansive update, the newly announced RTX Memory Utility enables efficient memory allocation, and Omniverse supercharges the development workflow.

Increasingly, game developers are making full use of real-time ray tracing and AI in their games. As a result, more gamers than ever are enjoying the beautifully realized lighting and AI-boosted images that you can only achieve with NVIDIA technology. At GDC 2021, NVIDIA’s updates, enhancements, and platform compatibility expansions enable RTX to be turned ON for a larger base than ever before.

Check out GDC 2021 announcements, video sessions, and game development demos on the NVIDIA at GDC Official Webpage.

Real-Time Ray Tracing Comes to Arm and Linux

NVIDIA RTX enables game developers to integrate stunning real-time ray traced lighting into games. Now, NVIDIA ray tracing and AI are making their premiere on Arm and Linux systems.

Arm processors are the engines inside of billions of power-efficient devices. Linux is an extensively adopted open-source operating system with an avid user base. Together, these two platforms offer a massive new audience for ray tracing technology. To show our commitment to nurturing the Arm and Linux gaming ecosystems, NVIDIA has prepared a demo of Wolfenstein: Youngblood and Amazon’s Bistro scene running RTX on an Arm-based MediaTek processor.

The demos were powered by NVIDIA’s flagship software development kits: RTX Global Illumination (RTXGI), RTX Direct Illumination (RTXDI), NVIDIA Real-Time Denoiser (NRD), Deep Learning Super Sampling (DLSS), and the all-new RTX Memory Utility (RTXMU). Read about the launch of ray tracing on Arm and Linux in the GDC news release.

For more information, contact NVIDIA’s developer relations team or visit developer.nvidia.com.

New Ray Tracing SDK: RTX Memory Utility Improves Memory Allocation for Games

Real-time ray tracing elevates game visuals to new heights with dynamic, physically-accurate lighting running at interactive frame rates. Though the results of ray tracing are stunning, the process is computationally expensive and can put a strain on memory availability in hardware. To alleviate this heavy cost, NVIDIA is releasing a new open source ray tracing SDK, RTX Memory Utility (RTXMU), built to optimize and reduce memory consumption of acceleration structures.

RTXMU uses sophisticated compaction and suballocation techniques that eliminates wasted memory, resulting in a roughly 50% reduction in memory footprint. By freeing this space, larger and more robust ray tracing worlds can be built than ever before.

RTXMU is easy to integrate, provides immediate benefits, and is available today.

Learn how to get started with RTXMU here.

Dive into the details of RTXMU in the developer blog.

DLSS Update brings Linux Support, Streamlined Access, new Customizable Options

A new DLSS update brings support for games running natively on Linux, alongside support for Vulkan API games on Proton introduced in June 2021. Arm support for DLSS has been announced as well. This update also brings a host of customizable options for both users and developers. New features include a Sharpening Slider that enables user-specific sharpening preferences, an Auto Mode that calibrates DLSS to the optimal quality given a particular resolution, and an Auto-Exposure Option that can improve image quality in low-contrast scenes.

Furthermore, accessing DLSS has been streamlined, and an application is no longer required to download DLSS SDK 2.2.1. DLSS is a game-changing software, and integrating it has never been easier. Learn more about using DLSS in NVIDIA’s DLSS Overview GDC session.

Read more about Linux and Arm support, new customizable options, and how to access DLSS in the DLSS 2.2.1 developer blog.

Download DLSS SDK 2.2.1 here.

Access DLSS in Unreal Engine 5 and Unreal Engine 4.26.

Use DLSS native integration in Unity 2021.2 beta.

NvRTX: NVIDIA’s Branch of Unreal Engine Includes Best-in-Class Ray Tracing Technology

NVIDIA makes it easy for Unreal Engine developers to use RTX and AI in their games with the NvRTX branch, which adds RTX Direct Illumination (RTXDI), RTX Global Illumination (RTXGI), and DLSS to Unreal Engine 4.26 and Unreal Engine 5. Enhancing the game development ecosystem with support like NvRTX is incredibly important to NVIDIA; read more about how NVIDIA strives to empower game creation here.

Delve into how NVIDIA integrates ray tracing support into Unreal Engine with the NvRTX branch, solving ray tracing challenges on our end so that implementation is seamless and intuitive in the hands of developers. Watch the NvRTX Technical Overview GDC session.

Learn about how RTXDI and RTXGI in the NvRTX branch collaborate with a variety of Unreal Engine tools to enable artists to create ray traced scenes and effects. Watch the NvRTX Artists Guide GDC session.

Learn more and download NvRTX for Unreal Engine 5 and Unreal Engine 4.26.

Omniverse Accelerates Game Development to the Speed of Light

For game development, NVIDIA Omniverse offers the ultimate platform for cross-collaboration between the library of applications and development teams that must work in unison to push a game from concept to credits. Omniverse is a powerful collaboration tool that enables seamless communication and so much more — providing engines for simulation, ray traced rendering, and AI development to name a few. Learn about NVIDIA Omniverse’s extensive feature list and everything the platform can offer to game creation in the Omniverse at GDC blog.

Watch NVIDIA’s Omniverse Game Development GDC session, covering how to connect your favorite industry tools to the Omniverse Nucleus as well as how to use Extensions to build custom tools for the workflow.

Learn even more about Omniverse here.

NVIDIA Nsight Updates: Optimize and Debug GPU Performance on a Super-Granular Scale

NVIDIA Nsight enables developers to build and profile state-of-the-art games and applications that harness the full power of NVIDIA GPUs. Announced at GDC is a new serving of Nsight Developer Tools to improve the debugging and optimization process, learn more about these new additions in the Nsight Tools update blog.

Included in the newly available developer tools: Nsight Systems 2021.3, the latest Nsight release adding new features for register dependency visualization. Learn more about Nsight Systems 2021.3 in the release blog.

You can also read more about Nsight Graphics 2021.3, NVIDIA’s tool for deep analysis of GPU performance, in the Nsight Graphics 2021.3 release blog.

Ensuring that the GPU is being fully utilized when performing ray tracing tasks can be challenging. Explore how Nsight and other NVIDIA Developer Tools allow for optimizing and debugging GPU performance in the Nsight: Developer Tools GDC session.

Watch a demo of NSight Graphics in action below.

That’s a wrap on NVIDIA at GDC 2021!

Take a closer look at our game development SDKs and developer resources here.

Learn more at NVIDIA’s GDC webpage here.

Become an NVIDIA Developer today: Join the NVIDIA Developer Program.

For Python 3.8 and TensorFlow 2.5, I have a 3-D tensor of shape (3, 3, 3) where the goal is to compute the L2-norm for each of the three (3, 3) square matrices. The code that I came up with is:

a = tf.random.normal(shape = (3, 3, 3)) a.shape # TensorShape([3, 3, 3]) a.numpy() ''' array([[[-0.30071023, 0.9958398 , -0.77897555], [-1.4251901 , 0.8463568 , -0.6138699 ], [ 0.23176959, -2.1303613 , 0.01905925]], [[-1.0487134 , -0.36724553, -1.0881581 ], [-0.12025198, 0.20973174, -2.1444907 ], [ 1.4264063 , -1.5857363 , 0.31582597]], [[ 0.8316077 , -0.7645084 , 1.5271858 ], [-0.95836663, -1.868056 , -0.04956183], [-0.16384012, -0.18928945, 1.04647 ]]], dtype=float32) '''

I am using axis = 2 since the 3rd axis should contain three 3×3 square matrices. The output I get is:

tf.math.reduce_euclidean_norm(input_tensor = a, axis = 2).numpy() ''' array([[1.299587 , 1.7675754, 2.1430166], [1.5552354, 2.158075 , 2.15614 ], [1.8995634, 2.1001325, 1.0759989]], dtype=float32) '''

How are these values computed? The formula for computing L2-norm is this. What am I missing?

Also, I was expecting three L2-norm values, one for each of the three (3, 3) matrices. The code I have to achieve this is:

tf.math.reduce_euclidean_norm(a[0]).numpy() # 3.0668826 tf.math.reduce_euclidean_norm(a[1]).numpy() # 3.4241767 tf.math.reduce_euclidean_norm(a[2]).numpy() # 3.0293021

Is there any better way to get this without having to explicitly refer to each indices of tensor ‘a’?

Thanks!

submitted by /u/grid_world

[visit reddit] [comments]

The DevKit is an integrated hardware-software platform for creating, evaluating, and benchmarking HPC, AI, and scientific computing applications for Arm server based accelerated platforms.

The DevKit is an integrated hardware-software platform for creating, evaluating, and benchmarking HPC, AI, and scientific computing applications for Arm server based accelerated platforms.

Today NVIDIA announced the availability of the NVIDIA Arm HPC Developer Kit with the NVIDIA HPC SDK version 21.7. The DevKit is an integrated hardware-software platform for creating, evaluating, and benchmarking HPC, AI, and scientific computing applications for Arm server based accelerated platforms. The HPC SDK v21.7 is the latest update of the software development kit, and fully supports the new Arm HPC DevKit.

This DevKit targets heterogeneous GPU/CPU system development, and includes an Arm CPU, two NVIDIA A100 Tensor Core GPUs, two NVIDIA BlueField-2 data processing units (DPUs), and the NVIDIA HPC SDK suite of tools.

The integrated HW/SW DevKit delivers:

The NVIDIA Arm HPC Developer Kit is based on the GIGABYTE G242-P32 2U server, and leverages the NVIDIA HPC SDK, a comprehensive suite of compilers, libraries, and tools for HPC delivering performance, portability, and productivity. The platform will support Ubuntu, SLES, and RHEL operating systems.

HPC SDK 21.7 includes:

Previously HPC SDK 21.5 introduced support for:

The NVIDIA HPC SDK C++ and Fortran compilers are the first compilers to support automatic GPU acceleration of standard language constructs including C++17 parallel algorithms and Fortran intrinsics.

Download the HPC SDK v21.7 for free today.

Contact our partner GIGABYTE about the hardware pricing and availability via the link on our DevKit web page.

Learn more about NVIDIA and Arm support:

At GDC 2021, NVIDIA introduced a suite of Omniverse apps and tools to simplify and accelerate game development content creation pipelines.

At GDC 2021, NVIDIA introduced a suite of Omniverse apps and tools to simplify and accelerate game development content creation pipelines.

Content creation in game development involves multiple steps and processes, which can be notoriously complicated. To create the best experiences, game artists need to build massive libraries of 3D content while incorporating realistic lighting, physics and optimal game performance with AI. An explosion in the number of Digital Content Creation (DCC) tools to design different elements of a game leads to long review cycles, and difficulties in maximizing iteration. And often, studios are spending development hours creating their own proprietary tools to enable these workflows.

At GDC 2021, NVIDIA introduced a suite of Omniverse apps and tools to simplify and accelerate game development content creation pipelines. Developers can plug into any layer of the platform stack — whether at the top level, utilizing pre-built Omniverse Apps such as Create, Machinima, or Audio2Face; or the platform component level, to easily build custom extensions and tools to accelerate their workflow.

USD Comes to Game Development

Universal Scene Description (USD), the foundation of NVIDIA Omniverse, is an easily extensible, open-source 3D scene description and file format developed by Pixar for content creation and interchange among different tools.

Because of its versatility, USD is now being widely adopted across industries like media and entertainment, architecture, robotics, manufacturing — and now, game development.

Luminous and Embark Studios, two of the early evaluators of NVIDIA Omniverse for game development, have adopted USD to leverage the Omniverse-connected ecosystem and accelerate their workflows.

“Game development content pipelines are complex and require us to use the best aspects of multiple applications,” said Takeshi Aramaki, Studio Head and VP at Luminous. “By adopting Pixar’s Universal Scene Description (USD), we will leverage universal asset and application interoperability across our tools to accelerate time to production and optimize our workflows.”

Accelerate Workflows with Live-Sync Collaboration and Intuitive Tools

When it comes to creating content, game developers must use various industry tools, many of which are often incompatible. Omniverse Connectors, which are plug-ins to popular applications, provide game developers with the ability to work live and simultaneously across their favorite applications, so they can easily speed up workflows.

And with Omniverse Create, developers can leverage simple, intuitive tools to build and test content, and rapidly iterate during creation pipelines. Use paint tools for set dressing, or tap into Omniverse Physics — like PhysX 5, Flow, and Blast — to bring realistic details to 3D models. NVIDIA RTX technology enables real-time ray tracing and path tracing for ground truth lighting. And users can easily stream content from Omniverse, so they can view models or assets on any device.

Simplify Asset Management Woes

Game developers are burdened with extremely large asset catalogs being built over several years, by thousands of artists and developers, across several studios. Accelerating and simplifying asset search and management is critical to maintaining productivity and limiting cost spent on duplicating assets that can’t be found.

With Omniverse Nucleus, the core collaboration and database engine for ease of 3D asset interchange, assets are stored as ground truth, and can easily be passed from artist to artist, or studio to studio.

Plus, with Omniverse’s AI and advanced rendering capabilities, developers can leverage Omniverse DeepSearch to easily search through thousands of 3D assets using still images or natural language, including adjectives or qualifiers.

An AI-Powered Playground

Realistic facial animation is a notoriously tedious process, but game creators can add enhanced levels of detail to characters using Omniverse Audio2Face, an app that automatically generates facial animation using AI. Audio2Face allows developers to create realistic facial expressions and motions to match any voice-over track. The technology feeds the audio input into a pre-trained Deep Neural Network, and the output of the network drives the facial animation of 3D characters in real time.

And Omniverse Machinima is a tool that helps game developers create cinematic animations and storytelling with their USD-based assets, or they can seed to their community to generate remixed User Generated Content to promote iconic characters or scenes. Today, Machinima includes notable assets from Mount & Blade II: Bannerlord and Squad, with more to come.

Kit Extensions System

The Omniverse Kit Extensions system enables anybody with basic programming knowledge to build powerful tools quickly and distribute them to the content makers, or to package them into micro-services to empower new distributed workflows. Extensions are mostly authored in Python for ultimate usability and have source code provided, so developers can inspect, experiment and build to suit their needs using a Script Editor.

Developers can also use the powerful Omni.UI system — an ultra-lightweight, GPU-accelerated user interface framework that is the foundational UI for all Omniverse Kit-based applications which is fully styleable, similar to HTML stylesheets, and works on Linux and Windows with DX12 and Vulkan-accelerated backends.

Graph Editing Framework

For team members without extensive scripting or coding experience, Omni.UI Graph is an easy-to-use graph editing framework to develop custom behaviors for extensions or apps. With Omni.UI Graph, Omniverse Kit and some skills in Python, users can intuitively create and customize extensions at runtime for fast iteration.

Dive further into NVIDIA Omniverse for Game Development with free access to our GTC On-Demand sessions, Omniverse Tutorials, and Twitch live streams. For technical questions, chat with our team in the Omniverse Forums, and connect with the community on the Omniverse Discord server.

The intensive care unit (ICU) of a hospital looks after the most medically vulnerable patients, many of whom require organ support, such as mechanical ventilation or dialysis. While always critical, the demand on ICU services during the COVID-19 pandemic has further underscored the importance of data-driven decision-making in healthcare. Furthermore, the ability to accurately predict the clinical outcomes of ICU patients has the potential to guide therapy and may inform decisions about most effective care, including staffing and triage support.

Applying machine learning (ML) to electronic health records (EHRs) has shown promise in predicting clinical outcomes. However, many of these ML models are based on single-task learning (ST), where the models are trained only to predict a specific adverse event, such as an organ dysfunction or the need for a life-support intervention. Of greater benefit would be to train multi-task models, which take into account a variety of competing risks along with the interdependencies between organ systems that factor into patient outcomes in a realistic setting.

In “Multi-task prediction of organ dysfunction in the ICU using sequential sub-network routing”, we propose a multi-task learning (MTL) architecture, called Sequential Sub-Network Routing (SeqSNR), that better captures the complexity of a realistic setting. Inspired by a clinician’s holistic approach to diagnosing problems, SeqSNR is designed to use flexible parameter sharing and routing to find related tasks and encourage cross-learning between them. We successfully applied SeqSNR to the task of continuous adverse event prediction in an ICU setting and showed advantages over single-task and naïve multi-tasking, especially in low training data scenarios.

Data and Labels

In this study, we used the freely available, open access, de-identified MIMIC-III EHR dataset, which includes a patient cohort consisting of 36,498 adults across 52,038 critical care admissions at the Beth Israel Deaconess Medical Center between 2001 and 2012. Similar to our previous studies, we employed a version of the MIMIC-III dataset that was mapped to the Fast Healthcare Interoperability Resource (FHIR) standard and used a comprehensive set of features, including a sequence of vital signs, laboratory results, past medications, procedures, diagnoses, and more.

The MIMIC-III database contains multi-modal recordings from ICU patients. Unlike most datasets in ML, the input and targets are often not explicitly defined and must be inferred from the data. So, using a combination of automated rule-based methods and clinical review, we defined a suite of diverse endpoints, including critical care interventions, specific organ dysfunctions, and overall patient outcomes.

The task given to the model was to predict the onset of a selection of adverse events within 24–48 hours for every hour after a patient’s admission into the ICU. The defined adverse events included acute kidney injury (AKI), continuous renal replacement therapy (CRRT) dialysis, administration of vasopressors and inotropes, mechanical ventilation (MV), mortality, and remaining length of stay (LoS).

The SeqSNR Algorithm

While multi-task learning captures the interdependencies between organ systems and balances competing risks, it can be challenging to implement successfully. In practice, jointly-trained tasks often impair one another, an effect called “negative transfer”. The intuition behind SeqSNR was that modular ‘sub-networks’ would mitigate this issue by automatically optimizing how information is shared across multiple tasks.

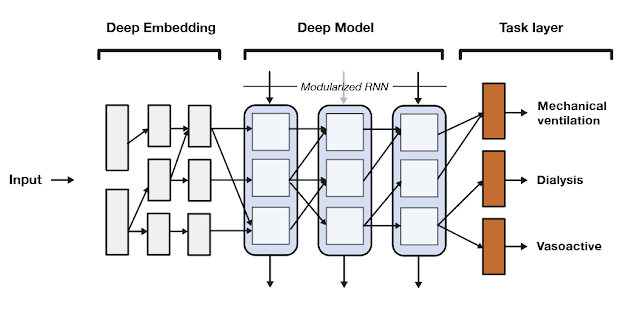

SeqSNR is a time series adaptation of the SNR architecture and is a combination of a deep embedding layer followed by stacked recurrent neural network (RNN) layers. Modularisation is achieved by splitting both the embedding layer and the RNN stack into multiple modules connected by routing variables that are learned during the training phase. The routing connections are always created between blocks in one layer and the next. This approach minimizes negative transfer by ensuring that data of low relevance to a particular task layer is filtered out. In essence, this means that each task utilizes a different path through the model.

|

| A high-level overview of the SeqSNR architecture. |

Findings

SeqSNR shows a modest improvement in discriminative performance overall relative to single-task and naïve multitasking. However, it’s performance improvement is more significant in scenarios with few training labels.

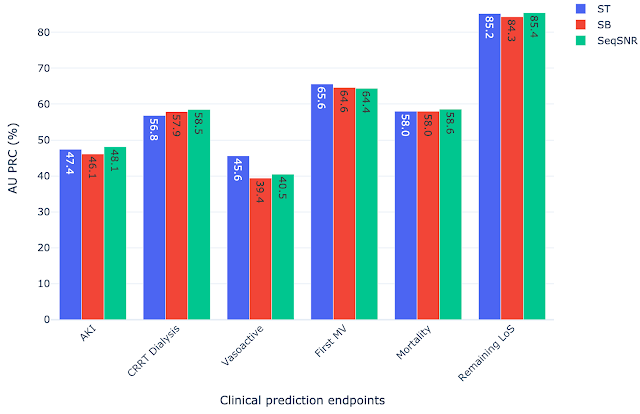

Because the prevalence of different outcomes varied widely in the dataset (e.g. ~38% of patients had MV, but CRRT dialysis is present for only ~3%), many accuracy metrics are not suitable. Instead, we report the area under the precision recall curve (AU PRC), which is more reliable given imbalanced data. Moreover, we performed the Wilcoxon Signed Rank Tests to draw statistically significant conclusions for pairwise comparisons of ST learning, shared-bottom (SB) multi-task learning (i.e., naïve multi-task learning), and SeqSNR across bootstrapped samples from the held-out test set. The performance differences between the three architectures were modest, but SeqSNR outperformed both ST and SB in four out of six tasks (p-values are reported in the paper).

|

| Comparison of single task (ST), shared bottom (SB) and SeqSNR performance on the MIMIC-III dataset. |

Label Efficiency

We hypothesized that multi-task learning could assist in low-data scenarios by using easy-to-label auxiliary tasks to boost the performance of the main tasks. We formulated prediction tasks with only a portion of the training labels available for the primary prediction task, but kept the entire dataset for the “helper tasks”. The latter are chosen because they are reliably encoded in the EHR and are straightforward to timestamp. An example of such a helper task is length of stay, since the start and end of admissions are accurately timestamped in MIMIC-III. On the other hand, the start and end of mechanical ventilation events are not reliably timestamped. So, we defined a set of rules based on expert-defined heuristics to determine the ventilation times using multiple sources of mechanical ventilator–related settings along with physiological measurements in the EHR dataset that are indicative of MV.

The development of these rules for a new clinical endpoint was time-consuming and involved manual review of the dataset by experts. The difficulty in exhaustively labeling the dataset led us to test the model performance with only 1–10% of the data labeled, which resulted in a decline in model performance. The “helper tasks” are useful in this scenario since they are 100% labeled and can be used with the primary tasks (1–10% labeled) to jointly train the multi-task model for improved overall performance.

We chose AKI, mechanical ventilation, CRRT Dialysis, and vasoactive medications as primary endpoints using 1%, 5%, and 10% of the training labels, along with 100% of labels for the helper tasks — labs and vitals, mortality, and LoS. Performance of both ST and SeqSNR decreased as the percentage of labels for the primary endpoint was reduced, but SeqSNR outperformed ST across all tasks and all training data reduction percentages, with a statistically significant boost in performance for all cases.

This is a useful finding, given the difficulties of annotating endpoint labels in EHR datasets, which frequently necessitates human evaluation by doctors. The ability to use numerous endpoints, some of which may be easier to label (like duration of stay or mortality), could lessen the need for manual curation on more difficult endpoints that are annotated differently (like mechanical ventilation).

Subgroup Performance

While the version of the MIMIC-III dataset used contained labels for gender and age, it did not contain information on race and the information on ethnicity was limited. We computed the performance of all selected models across age and gender subgroups. We observed that in the scenarios with few instances in the dataset, the MTL models (both SB models and SeqSNR) often outperform ST. Even though there are exceptions, on average all models seem to be relatively balanced across age and gender subgroups. We invite the reader to refer to the supplemental section of our paper for a detailed performance breakdown.

Next Steps

This work is a proof of concept for SeqSNR on a set of canonical EHR prediction tasks. The code for this architecture is publicly available here. And will hopefully stimulate further research in EHR multi-tasking and other deep learning architectures inspired by clinical reasoning.

In future, it will be important to evaluate the performance of SeqSNR on different combinations of tasks, different time horizons and different datasets. One other area of potential growth in this project is to expand subgroup analysis by including datasets with additional population information, race, ethnicity, etc. Another area we are exploring is expanding subgroup analysis by including datasets with additional population information, such as race, ethnicity, etc. We also emphasize that these are prototype models designed to showcase methodologies, and more rigorous evaluation would be needed to bring these tools into deployment.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of researchers, software engineers, clinicians, and cross-functional contributors. We thank our co-authors: Eric Loreaux, Anne Mottram, Ivan Protsyuk, Natalie Harris, Sebastien Baur, Yuan Xue, Jessica Schrouff, Ali Connell, Alan Karthikesalingam, Martin Seneviratne from Google, Nenad Tomasev from Deepmind, and Hugh Montgomery from University College London. We also thank Zhe Zhao from Google Research and Kathryn Rough, Cian Hughes, Megumi Morigami and Doris Wong from Google Health for their input and review, and the MIMIC team for curating this open access dataset for the research community.

NVIDIA Operators streamline installing and managing GPUs and NICs on Kubernetes to make the software stack ready to run the most resource-demanding workloads, such as AI, ML, DL, and HPC, in the cloud, data center, and at the edge.

NVIDIA Operators streamline installing and managing GPUs and NICs on Kubernetes to make the software stack ready to run the most resource-demanding workloads, such as AI, ML, DL, and HPC, in the cloud, data center, and at the edge.

Kubernetes is an open-source container-orchestration system for automating computer application deployment, scaling, and management. It’s an extremely popular tool, and can be used for automated rollouts and rollbacks, horizontal scaling, storage orchestration, and more. For many organizations, Kubernetes is a key component to their infrastructure.

A critical step to installing and scaling Kubernetes is ensuring that it is properly utilizing the other components of the infrastructure. NVIDIA Operators streamline installing and managing GPUs and NICs on Kubernetes to make the software stack ready to run the most resource-demanding workloads, such as AI, ML, DL, and HPC, in the cloud, data center, and at the edge. NVIDIA Operators consist of the GPU Operator and the Network Operator, and are open source and based on the Operator Framework.

NVIDIA GPU Operator

The NVIDIA GPU Operator is packaged as a Helm Chart and installs and manages the lifecycle of software components so that the GPU-accelerated applications can be run on Kubernetes. The components are the GPU feature discovery, the NVIDIA Driver, the Kubernetes Device Plugin, the NVIDIA Container Toolkit, and DCGM Monitoring.

The GPU Operator enables infrastructure teams to manage the lifecycle of GPUs when used with Kubernetes at the Cluster level, therefore eliminating the need to manage each node individually. Previously infrastructure teams had to manage two operating system images, one for GPU nodes and one CPU nodes. When using the GPU Operator, infrastructure teams can use the CPU image with GPU worker nodes as well.

NVIDIA Network Operator

The Network Operator is responsible for automating the deployment and management of the host networking components in a Kubernetes cluster. It includes the Kubernetes Device Plugin, NVIDIA Driver, NVIDIA Peer Memory Driver, and the Multus, macvlan CNIs. These components were previously installed manually, but are automated through the Network Operator, streamlining the deployment process and enabling accelerated computing with enhanced customer experience.

Used independently or together, NVIDIA Operators simplify GPU and SmartNIC configurations on Kubernetes and are compatible with partner cloud platforms. To learn more about these components and how the NVIDIA Operators solve the key challenges to running AI, ML, DL, and HPC workloads and simplify initial setup and Day 2 operations, check out the on-demand webinar “Accelerating Kubernetes with NVIDIA Operators“.