Replit aims to empower the next billion software creators. In this week’s episode of NVIDIA’s AI Podcast, host Noah Kraviz dives into a conversation with Replit CEO Amjad Masad. Read article >

On Aug. 29, learn how to create efficient AI models with NVIDIA TAO Toolkit on STM32 MCUs.

On Aug. 29, learn how to create efficient AI models with NVIDIA TAO Toolkit on STM32 MCUs.

On Aug. 29, learn how to create efficient AI models with NVIDIA TAO Toolkit on STM32 MCUs.

Editor’s note: This post is part of Into the Omniverse, a series focused on how artists, developers and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse. Whether animating a single 3D character or generating a group of them for industrial digitalization, creators and developers who use the popular Reallusion Read article >

The start of a new school year is an ideal time for students to upgrade their content creation, gaming and educational capabilities by picking up an NVIDIA Studio laptop, powered by GeForce RTX 40 Series graphics cards. Read article >

When it comes to preserving profit margins, data scientists for vehicle and parts manufacturers are sitting in the driver’s seat. Viaduct, which develops models for time-series inference, is helping enterprises harvest failure insights from the data captured on today’s connected cars. Read article >

Reading has many benefits for young students, such as better linguistic and life skills, and reading for pleasure has been shown to correlate with academic success. Furthermore students have reported improved emotional wellbeing from reading, as well as better general knowledge and better understanding of other cultures. With the vast amount of reading material both online and off, finding age-appropriate, relevant and engaging content can be a challenging task, but helping students do so is a necessary step to engage them in reading. Effective recommendations that present students with relevant reading material helps keep students reading, and this is where machine learning (ML) can help.

ML has been widely used in building recommender systems for various types of digital content, ranging from videos to books to e-commerce items. Recommender systems are used across a range of digital platforms to help surface relevant and engaging content to users. In these systems, ML models are trained to suggest items to each user individually based on user preferences, user engagement, and the items under recommendation. These data provide a strong learning signal for models to be able to recommend items that are likely to be of interest, thereby improving user experience.

In “STUDY: Socially Aware Temporally Causal Decoder Recommender Systems”, we present a content recommender system for audiobooks in an educational setting taking into account the social nature of reading. We developed the STUDY algorithm in partnership with Learning Ally, an educational nonprofit, aimed at promoting reading in dyslexic students, that provides audiobooks to students through a school-wide subscription program. Leveraging the wide range of audiobooks in the Learning Ally library, our goal is to help students find the right content to help boost their reading experience and engagement. Motivated by the fact that what a person’s peers are currently reading has significant effects on what they would find interesting to read, we jointly process the reading engagement history of students who are in the same classroom. This allows our model to benefit from live information about what is currently trending within the student’s localized social group, in this case, their classroom.

Data

Learning Ally has a large digital library of curated audiobooks targeted at students, making it well-suited for building a social recommendation model to help improve student learning outcomes. We received two years of anonymized audiobook consumption data. All students, schools and groupings in the data were anonymized, only identified by a randomly generated ID not traceable back to real entities by Google. Furthermore all potentially identifiable metadata was only shared in an aggregated form, to protect students and institutions from being re-identified. The data consisted of time-stamped records of student’s interactions with audiobooks. For each interaction we have an anonymized student ID (which includes the student’s grade level and anonymized school ID), an audiobook identifier and a date. While many schools distribute students in a single grade across several classrooms, we leverage this metadata to make the simplifying assumption that all students in the same school and in the same grade level are in the same classroom. While this provides the foundation needed to build a better social recommender model, it’s important to note that this does not enable us to re-identify individuals, class groups or schools.

The STUDY algorithm

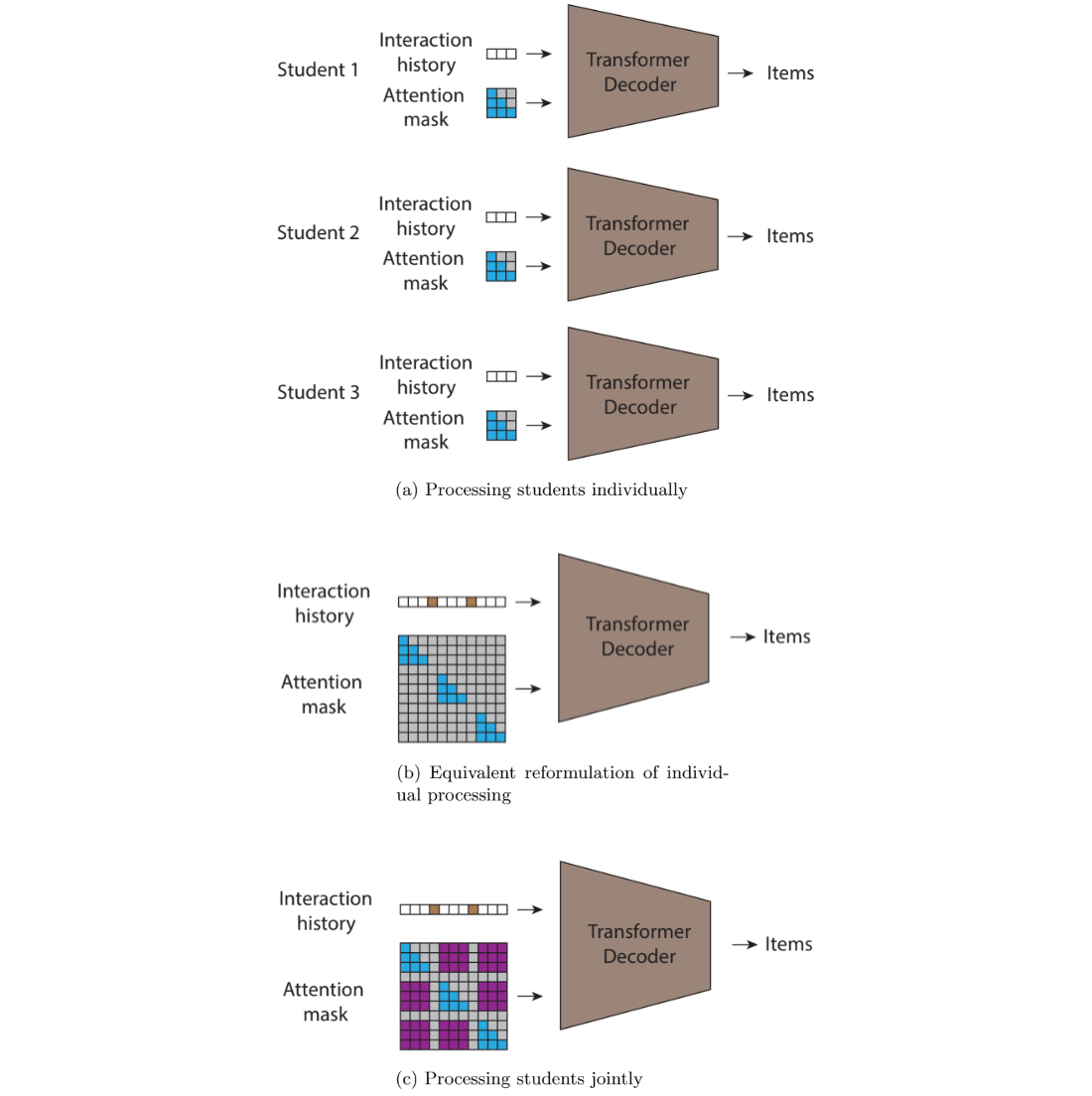

We framed the recommendation problem as a click-through rate prediction problem, where we model the conditional probability of a user interacting with each specific item conditioned on both 1) user and item characteristics and 2) the item interaction history sequence for the user at hand. Previous work suggests Transformer-based models, a widely used model class developed by Google Research, are well suited for modeling this problem. When each user is processed individually this becomes an autoregressive sequence modeling problem. We use this conceptual framework to model our data and then extend this framework to create the STUDY approach.

While this approach for click-through rate prediction can model dependencies between past and future item preferences for an individual user and can learn patterns of similarity across users at train time, it cannot model dependencies across different users at inference time. To recognise the social nature of reading and remediate this shortcoming we developed the STUDY model, which concatenates multiple sequences of books read by each student into a single sequence that collects data from multiple students in a single classroom.

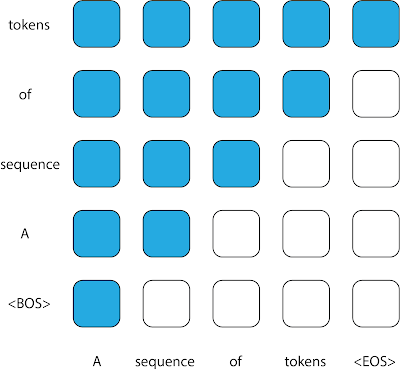

However, this data representation requires careful diligence if it is to be modeled by transformers. In transformers, the attention mask is the matrix that controls which inputs can be used to inform the predictions of which outputs. The pattern of using all prior tokens in a sequence to inform the prediction of an output leads to the upper triangular attention matrix traditionally found in causal decoders. However, since the sequence fed into the STUDY model is not temporally ordered, even though each of its constituent subsequences is, a standard causal decoder is no longer a good fit for this sequence. When trying to predict each token, the model is not allowed to attend to every token that precedes it in the sequence; some of these tokens might have timestamps that are later and contain information that would not be available at deployment time.

The STUDY model builds on causal transformers by replacing the triangular matrix attention mask with a flexible attention mask with values based on timestamps to allow attention across different subsequences. Compared to a regular transformer, which would not allow attention across different subsequences and would have a triangular matrix mask within sequence, STUDY maintains a causal triangular attention matrix within a sequence and has flexible values across sequences with values that depend on timestamps. Hence, predictions at any output point in the sequence are informed by all input points that occurred in the past relative to the current time point, regardless of whether they appear before or after the current input in the sequence. This causal constraint is important because if it is not enforced at train time, the model could potentially learn to make predictions using information from the future, which would not be available for a real world deployment.

<!–

–><!–

–>

Experiments

We used the Learning Ally dataset to train the STUDY model along with multiple baselines for comparison. We implemented an autoregressive click-through rate transformer decoder, which we refer to as “Individual”, a k-nearest neighbor baseline (KNN), and a comparable social baseline, social attention memory network (SAMN). We used the data from the first school year for training and we used the data from the second school year for validation and testing.

We evaluated these models by measuring the percentage of the time the next item the user actually interacted with was in the model’s top n recommendations, i.e., hits@n, for different values of n. In addition to evaluating the models on the entire test set we also report the models’ scores on two subsets of the test set that are more challenging than the whole data set. We observed that students will typically interact with an audiobook over multiple sessions, so simply recommending the last book read by the user would be a strong trivial recommendation. Hence, the first test subset, which we refer to as “non-continuation”, is where we only look at each model’s performance on recommendations when the students interact with books that are different from the previous interaction. We also observe that students revisit books they have read in the past, so strong performance on the test set can be achieved by restricting the recommendations made for each student to only the books they have read in the past. Although there might be value in recommending old favorites to students, much value from recommender systems comes from surfacing content that is new and unknown to the user. To measure this we evaluate the models on the subset of the test set where the students interact with a title for the first time. We name this evaluation subset “novel”.

We find that STUDY outperforms all other tested models across almost every single slice we evaluated against.

Importance of appropriate grouping

At the heart of the STUDY algorithm is organizing users into groups and doing joint inference over multiple users who are in the same group in a single forward pass of the model. We conducted an ablation study where we looked at the importance of the actual groupings used on the performance of the model. In our presented model we group together all students who are in the same grade level and school. We then experiment with groups defined by all students in the same grade level and district and also place all students in a single group with a random subset used for each forward pass. We also compare these models against the Individual model for reference.

We found that using groups that were more localized was more effective, with the school and grade level grouping outperforming the district and grade level grouping. This supports the hypothesis that the STUDY model is successful because of the social nature of activities such as reading — people’s reading choices are likely to correlate with the reading choices of those around them. Both of these models outperformed the other two models (single group and Individual) where grade level is not used to group students. This suggests that data from users with similar reading levels and interests is beneficial for performance.

Future work

This work is limited to modeling recommendations for user populations where the social connections are assumed to be homogenous. In the future it would be beneficial to model a user population where relationships are not homogeneous, i.e., where categorically different types of relationships exist or where the relative strength or influence of different relationships is known.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of researchers, software engineers and educational subject matter experts. We thank our co-authors: Diana Mincu, Lauren Harrell, and Katherine Heller from Google. We also thank our colleagues at Learning Ally, Jeff Ho, Akshat Shah, Erin Walker, and Tyler Bastian, and our collaborators at Google, Marc Repnyek, Aki Estrella, Fernando Diaz, Scott Sanner, Emily Salkey and Lev Proleev.

Optical Character Detection (OCD) and Optical Character Recognition (OCR) are computer vision techniques used to extract text from images. Use cases vary across…

Optical Character Detection (OCD) and Optical Character Recognition (OCR) are computer vision techniques used to extract text from images. Use cases vary across…

Optical Character Detection (OCD) and Optical Character Recognition (OCR) are computer vision techniques used to extract text from images. Use cases vary across industries and include extracting data from scanned documents or forms with handwritten texts, automatically recognizing license plates, sorting boxes or objects in a fulfillment center based on serial numbers, identifying components for inspection on assembly lines based on part numbers, and more.

OCR is used in many industries, including financial services, healthcare, logistics, industrial inspection, and smart cities. OCR improves productivity and increases operational efficiency for businesses by automating manual tasks.

To be effective, OCR must achieve or exceed human-level accuracy. It is inherently complicated due to the unique use cases it works across. For example, when OCR is analyzing text, the text can vary in font, size, color, shape, and orientation, and can be handwritten or have other noise like partial occlusion. Fine-tuning the model on the test environment becomes extremely important to maintain high accuracy and reduce error rate.

NVIDIA TAO Toolkit is a low-code AI toolkit that can help developers customize and optimize models for many vision AI applications. NVIDIA introduced new models and features for automating character detection and recognition in TAO 5.0. These models and features will accelerate the creation of custom OCR solutions. For more details, see Access the Latest in Vision AI Model Development Workflows with NVIDIA TAO Toolkit 5.0.

This post is part of a series on using NVIDIA TAO and pretrained models to create and deploy custom AI models to accurately detect and recognize handwritten texts. This part explains the training and fine-tuning of character detection and recognition models using TAO. Part 2 walks you through the steps to deploy the model using NVIDIA Triton. The steps presented can be used with any other OCR tasks.

NVIDIA TAO OCD/OCR workflow

A pretrained model has been trained on large datasets and can be further fine-tuned with additional data to accomplish a specific task. The Optical Character Detection Network (OCDNet) is a TAO pretrained model that detects text in images with complex backgrounds. It uses a process called differentiable binarization to help accurately locate text of various shapes, sizes, and fonts. The result is a bounding box with the detected text.

A text rectifier is middleware that serves as a bridge between character detection and character recognition during the inference phase. Its primary function is to improve the accuracy of recognizing characters on texts that are at extreme angles. To achieve this, the text rectifier takes the vertices of polygons that cover the text area and the original images as inputs.

The Optical Character Recognition Network (OCRNet) is another TAO pretrained model that can be used to recognize the characters of text that reside in the detected bounding box regions. This model takes the image as network input and produces a sequence of characters as output.

Prerequisites

To follow along with the tutorial, you will need the following:

- An NGC account

- The sample Jupyter notebook for training an OCD and OCR model.

- NVIDIA TAO Toolkit 5.0 (Installation instructions are included in the Jupyter notebooks). For a complete set of dependencies and prerequisites, see the TAO Toolkit Quick Start Guide.

Download the dataset

This tutorial fine-tunes the OCD and OCR model to detect and recognize handwritten letters. It works with the IAM Handwriting Database, a large dataset containing various handwritten English text documents. These text samples will be used to train and test handwritten text recognizers for the OCD and OCR models.

To gain access to this dataset, register your email address on the IAM registration page.

Once registered, download the following datasets from the downloads page:

- data/ascii.tgz

- data/formsA-D.tgz

- data/formsE-H.tgz

- data/formsI-Z.tgz

The following section explores various aspects of the Jupyter notebook to delve deeper into the fine-tuning process of OCDNet and OCRNet for the purpose of detecting and recognizing handwritten characters.

Note that this dataset may be used for noncommercial research purposes only. For more details, review the terms of use on the IAM Handwriting Database.

Run the notebook

The OCDR Jupyter notebook showcases how to fine-tune the OCD and OCR models to the IAM handwritten dataset. It also shows how to run inference on the trained models and perform deployment.

Set up environment variables

Set up the following environment variables in the Jupyter notebook to match your current directory, then execute:

%env LOCAL_PROJECT_DIR=home//ocdr_notebook

%env NOTEBOOK_DIR=home//ocdr_notebook

# Set this path if you don't run the notebook from the samples directory.

%env NOTEBOOK_ROOT=home//ocdr_notebookThe following folders will be generated:

- HOST_DATA_DIR contains the train/test split data for model training.

- HOST_SPECS_DIR houses the specification files that contain the hyperparameters used by TAO to perform training, inference, evaluation, and model deployment.

- HOST_RESULTS_DIR contains the results of the fine-tuned OCD and OCR models.

- PRE_DATA_DIR is where the downloaded handwritten dataset files will be located. This path will be called to preprocess the data for OCD/OCR model training.

TAO Launcher uses Docker containers when running tasks. For data and results to be visible to Docker, map the location of our local folders to the Docker container using the ~/.tao_mounts.json file. Run the cell in the Jupyter notebook to generate the ~/.tao_mounts.json file.

The environment is now ready for use with the TAO Launcher. The next steps will prepare the handwritten dataset to be in the correct format for TAO OCD model training.

Prepare the dataset for OCD and OCR

Preprocess the IAM handwritten dataset to match the TAO image format following the steps below. Note that in the folder structure for OCD and OCR model training in TAO, /img houses the handwritten image data, and /gt contains ground truth labels of the characters found in each image.

|── train

| ├──img

| ├──gt

|── test

| ├──img

| ├──gtBegin by moving the four downloaded .tgz files to the location of your $PRE_DATA_DIR directory. If you are following the same steps as above, the .tgz files will be placed in /data/iamdata.

Extract the images and ground truth labels from these files. The subsequent cells will extract the image files and move them to the proper folder format when run.

!tar -xf $PRE_DATA_DIR/ascii.tgz --directory $PRE_DATA_DIR/ words.txt

# Create directories to hold the image data and ground truth files.

!mkdir -p $PRE_DATA_DIR/train/img

!mkdir -p $PRE_DATA_DIR/test/img

!mkdir -p $PRE_DATA_DIR/train/gt

!mkdir -p $PRE_DATA_DIR/test/gt

# Unpack the images, let's use the first two groups of images for training, and the last for validation.

!tar -xzf $PRE_DATA_DIR/formsA-D.tgz --directory $PRE_DATA_DIR/train/img

!tar -xzf $PRE_DATA_DIR/formsE-H.tgz --directory $PRE_DATA_DIR/train/img

!tar -xzf $PRE_DATA_DIR/formsI-Z.tgz --directory $PRE_DATA_DIR/test/imgThe data is now organized correctly. However, the ground truth label used by IAM dataset is currently in the following format:

a01-000u-00-00 ok 154 1 408 768 27 51 AT A

# a01-000u-00-00 -> word id for line 00 in form a01-000u

# ok -> result of word segmentation

# ok: word was correctly

# er: segmentation of word can be bad

#

# 154 -> graylevel to binarize the line containing this word

# 1 -> number of components for this word

# 408 768 27 51 -> bounding box around this word in x,y,w,h format

# AT -> the grammatical tag for this word, see the

# file tagset.txt for an explanation

# A -> the transcription for this word

The words.txt file looks like this:

0 1

0 a01-000u-00-00 ok 154 408 768 27 51 AT A

1 a01-000u-00-01 ok 154 507 766 213 48 NN MOVE

2 a01-000u-00-02 ok 154 796 764 70 50 TO to

...

Currently, words.txt uses a four-point coordinate system for drawing a bounding box around the word in an image. TAO requires the use of an eight-point coordinate system to draw a bounding box around detected text.

To convert the data to the eight-point coordinate system, use the extract_columns and process_text_file functions provided in section 2.1 of the notebook. words.txt will be transformed into the following DataFrame and will be ready for fine-tuning on an OCDNet model.

filename x y x2 y2 x3 y3 x4 y4 word

0 gt_a01-000u.txt 408 768 435 768 435 819 408 819 A

1 gt_a01-000u.txt 507 766 720 766 720 814 507 814 MOVE

2 gt_a01-000u.txt 796 764 866 764 866 814 796 814 to

...To prepare the dataset for OCRNet, the raw image data and labels must be converted to LMDB format, which converts the images and labels into a key-value memory database.

# Convert the raw train and test dataset to lmdb

print("Converting the training set to LMDB.")

!tao model ocrnet dataset_convert -e $SPECS_DIR/ocr/experiment.yaml

dataset_convert.input_img_dir=$DATA_DIR/train/processed

dataset_convert.gt_file=$DATA_DIR/train/gt.txt

dataset_convert.results_dir=$DATA_DIR/train/lmdb

# Convert the raw test dataset to lmdb

print("Converting the testing set to LMDB.")

!tao model ocrnet dataset_convert -e $SPECS_DIR/ocr/experiment.yaml

dataset_convert.input_img_dir=$DATA_DIR/test/processed

dataset_convert.gt_file=$DATA_DIR/test/gt.txt

dataset_convert.results_dir=$DATA_DIR/test/lmdb

The data is now processed and ready to be fine-tuned on the OCDNet and OCRNet pretrained models.

Create a custom character detection (OCD) model

The NGC CLI will be used to download the pretrained OCDNet model. For more information, visit NGC and click on Setup in the navigation bar.

Download the OCDNet pretrained model

!mkdir -p $HOST_RESULTS_DIR/pretrained_ocdnet/

# Pulls pretrained models from NGC

!ngc registry model download-version nvidia/tao/ocdnet:trainable_resnet18_v1.0 --dest $HOST_RESULTS_DIR/pretrained_ocdnet/You can check that the model has been downloaded to /pretrained_ocdnet/ using the following call:

print("Check that model is downloaded into dir.")

!ls -l $HOST_RESULTS_DIR/pretrained_ocdnet/ocdnet_vtrainable_resnet18_v1.0

OCD training specification

In the specs folder, you can find different files related to how you want to train, evaluate, infer, and export data for both models. For training OCDNet, you will use the train.yaml file in the specs/ocd folder. You can experiment with changing different hyperparameters, such as number of epochs, in this spec file.

Below is a code example of some of the configs that you can experiment with:

num_gpus: 1

model:

load_pruned_graph: False

pruned_graph_path: '/results/prune/pruned_0.1.pth'

pretrained_model_path: '/data/ocdnet/ocdnet_deformable_resnet18.pth'

backbone: deformable_resnet18

train:

results_dir: /results/train

num_epochs: 300

checkpoint_interval: 1

validation_interval: 1

...

Train the character detection model

Now that the specification files are configured, provide the paths to the spec file, the pretrained model, and the results:

#Train using TAO Launcher

#print("Run training with ngc pretrained model.")

!tao model ocdnet train

-e $SPECS_DIR/train.yaml

-r $RESULTS_DIR/train

model.pretrained_model_path=$DATA_DIR/ocdnet_deformable_resnet18.pthTraining output will resemble the following. Note that this step could take some time, depending on the number of epochs specified in train.yaml.

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

--------------------------------

0 | model | Model | 12.8 M

--------------------------------

12.8 M Trainable params

0 Non-trainable params

12.8 M Total params

51.106 Total estimated model params size (MB)

Training: 0it [00:00, ?it/s]Starting Training Loop.

Epoch 0: 100%|█████████| 751/751 [19:57Evaluate the model

Next, evaluate the OCDNet model trained on the IAM dataset.

# Evaluate on model

!tao model ocdnet evaluate

-e $SPECS_DIR/evaluate.yaml

evaluate.checkpoint=$RESULTS_DIR/train/model_best.pthEvaluation output will look like the following:

test model: 100%|██████████████████████████████| 488/488 [06:44OCD inference

The inference tool produces annotated image outputs and .txt files that contain prediction information. Run the inference tool below to generate inferences on OCDNet models and visualize the results for detected text.

# Run inference using TAO

!tao model ocdnet inference

-e $SPECS_DIR/ocd/inference.yaml

inference.checkpoint=$RESULTS_DIR/ocd/train/model_best.pth

inference.input_folder=$DATA_DIR/test/img

inference.results_dir=$RESULTS_DIR/ocd/inferenceFigure 3 shows the OCDNet inference on a test sample image.

Export the OCD model for deployment

The last step is to export the OCD model to ONNX format for deployment.

!tao model ocdnet export

-e $SPECS_DIR/export.yaml

export.checkpoint=$RESULTS_DIR/train/model_best.pth

export.onnx_file=$RESULTS_DIR/export/model_best.onnxCreate a custom character recognition (OCR) model

Now that you have the trained OCDNet model to detect and apply bounding boxes to areas of handwritten text, use TAO to fine-tune the OCRNet model to recognize and classify the detected letters.

Download the OCRNet pretrained model

Continuing in the Jupyter notebook, the OCRNet pretrained model will be pulled from NGC CLI.

!mkdir -p $HOST_RESULTS_DIR/pretrained_ocrnet/

# Pull pretrained model from NGC

!ngc registry model download-version nvidia/tao/ocrnet:trainable_v1.0 --dest $HOST_RESULTS_DIR/pretrained_ocrnetOCR training specification

OCRNet will use the experiment.yaml spec file to perform training. You can change training hyperparameters such as batch size, number of epochs, and learning rate shown below:

dataset:

train_dataset_dir: []

val_dataset_dir: /data/test/lmdb

character_list_file: /data/character_list

max_label_length: 25

batch_size: 32

workers: 4

train:

seed: 1111

gpu_ids: [0]

optim:

name: "adadelta"

lr: 0.1

clip_grad_norm: 5.0

num_epochs: 10

checkpoint_interval: 2

validation_interval: 1

Train the character recognition model

Train the OCRNet model on the dataset. You can also configure spec parameters like the number of epochs or learning rate within the train command, shown below.

!tao model ocrnet train -e $SPECS_DIR/ocr/experiment.yaml

train.results_dir=$RESULTS_DIR/ocr/train

train.pretrained_model_path=$RESULTS_DIR/pretrained_ocrnet/ocrnet_vtrainable_v1.0/ocrnet_resnet50.pth

train.num_epochs=20

train.optim.lr=1.0

dataset.train_dataset_dir=[$DATA_DIR/train/lmdb]

dataset.val_dataset_dir=$DATA_DIR/test/lmdb

dataset.character_list_file=$DATA_DIR/train/character_list.txt

The output will resemble the following:

...

Epoch 19: 100%|█| 3605/3605 [08:04Evaluate the model

You can evaluate the OCRNet model based on the accuracy of its character recognition. Recognition accuracy simply means a percentage of all the characters in a text area that were recognized correctly.

!tao model ocrnet evaluate -e $SPECS_DIR/ocr/experiment.yaml

evaluate.results_dir=$RESULTS_DIR/ocr/evaluate

evaluate.checkpoint=$RESULTS_DIR/ocr/train/best_accuracy.pth

evaluate.test_dataset_dir=$DATA_DIR/test/lmdb

dataset.character_list_file=$DATA_DIR/train/character_list.txtEvaluation

The output should appear similar to the following:

data directory: /data/iamdata/test/lmdb num samples: 37109

Accuracy: 77.8%

OCR inference

Inference on OCR will produce a sequence output of recognized characters from the bounding boxes, shown below.

!tao model ocrnet inference -e $SPECS_DIR/ocr/experiment.yaml

inference.results_dir=$RESULTS_DIR/ocr/inference

inference.checkpoint=$RESULTS_DIR/ocr/train/best_accuracy.pth

inference.inference_dataset_dir=$DATA_DIR/test/processed

dataset.character_list_file=$DATA_DIR/train/character_list.txt

+--------------------------------------+--------------------+--------------------+

| image_path | predicted_labels | confidence score |

|--------------------------------------+--------------------+--------------------|

| /data/test/processed/l04-012_28.jpg | lelly | 0.3799 |

| /data/test/processed/k04-068_26.jpg | not | 0.9644 |

| /data/test/processed/l04-062_58.jpg | set | 0.9542 |

| /data/test/processed/l07-176_39.jpg | boat | 0.4693 |

| /data/test/processed/k04-039_39.jpg | . | 0.9286 |

+--------------------------------------+--------------------+--------------------+

Export OCR model for deployment

Finally, export the OCD Model to ONNX format for deployment.

!tao model ocrnet export -e $SPECS_DIR/ocr/experiment.yaml

export.results_dir=$RESULTS_DIR/ocr/export

export.checkpoint=$RESULTS_DIR/ocr/train/best_accuracy.pth

export.onnx_file=$RESULTS_DIR/ocr/export/ocrnet.onnx

dataset.character_list_file=$DATA_DIR/train/character_list.txt

Results

Table 1 highlights the accuracy and performance of the two models featured in this post. The character detection model is fine-tuned on the ICDAR pretrained OCDNet model and character recognition model is fine-tuned on the Uber-text OCRNet pretrained model. ICDAR and Uber-text are publicly available datasets that we used to pretrain the OCDNet and OCRNet models, respectively. Both models are available on NGC.

| OCDNet | OCRNet | |

| Dataset | IAM Handwritten Dataset | |

| Backbone | Deformable Conv ResNet18 | ResNet50 |

| Accuracy | 90% | 78% |

| Inference resolution | 1024×1024 | 1x32x100 |

| Inference performance (FPS) on NVIDIA L4 GPU | 125 FPS (BS=1) | 8030 (BS=128) |

Summary

This post explains the end-to-end workflow for creating custom character detection and recognition models in NVIDIA TAO. You can start with a pretrained model for character detection (OCDNet) and character recognition (OCRNet) from NGC. Then fine-tune it on your custom dataset using TAO and export the model for inference.

Continue reading Part 2 for a step-by-step walkthrough on deploying this model into production using NVIDIA Triton.

NVIDIA Triton Inference Server streamlines and standardizes AI inference by enabling teams to deploy, run, and scale trained ML or DL models from any framework…

NVIDIA Triton Inference Server streamlines and standardizes AI inference by enabling teams to deploy, run, and scale trained ML or DL models from any framework…

NVIDIA Triton Inference Server streamlines and standardizes AI inference by enabling teams to deploy, run, and scale trained ML or DL models from any framework on any GPU- or CPU-based infrastructure. It helps developers deliver high-performance inference across cloud, on-premises, edge, and embedded devices.

The nvOCDR library is integrated into Triton for inference. The nvOCDR library wraps the entire inference pipeline for optical character detection and recognition (OCD/OCR). This library consumes OCDNet and OCRNet models that are trained on TAO Toolkit. For more details, refer to the nvOCDR documentation.

This post is part of a series on using NVIDIA TAO and pretrained models to create and deploy custom AI models to accurately detect and recognize handwritten texts. Part 1 explains the training and fine-tuning of character detection and recognition models using TAO. This part walks you through the steps to deploy the model using NVIDIA Triton. The steps presented can be used with any other OCR tasks.

Build the Triton sample with OCD/OCR models

The following steps show the simple and recommended way to build and use OCD/OCR models in Triton Inference Server with Docker images.

Step 1: Prepare the ONNX models

Once you follow ocdnet.ipynb and ocrnet.ipynb to finish the model training and export, you could get two ONNX models, such as ocdnet.onnx and ocrnet.onnx. (In ocdnet.ipynb, the exported ONNX is named model_best.onnx. In ocrnet.ipynb, the exported ONNX is named best_accuracy.onnx.)

# bash commands

$ mkdir onnx_models

$ cd onnx_models

$ cp /export/model_best.onnx ./ocdnet.onnx

$ cp /export/best_accuracy.onnx ./ocrnet.onnx

The character list file, generated in ocrnet.ipynb, is also needed:

$ cp /character_list ./Step 2: Get the nvOCDR repository

To get the nvOCDR repository, use the following script:

$ git clone https://github.com/NVIDIA-AI-IOT/NVIDIA-Optical-Character-Detection-and-Recognition-Solution.gitStep 3: Build the Triton server Docker image

The building process of Triton server and client Docker images can be launched automatically by running related scripts:

$ cd NVIDIA-Optical-Character-Detection-and-Recognition-Solution/triton

# bash setup_triton_server.sh [input image height] [input image width] [OCD input max batchsize] [DEVICE] [ocd onnx path] [ocr onnx path] [ocr character list path]

$ bash setup_triton_server.sh 1024 1024 4 0 ~/onnx_models/ocd.onnx ~/onnx_models/ocr.onnx ~/onnx_models/ocr_character_listStep 4: Build the Triton client Docker image

Use the following script to build the Triton client Docker image:

$ cd NVIDIA-Optical-Character-Detection-and-Recognition-Solution/triton

$ bash setup_triton_client.sh

Step 5: Run nvOCDR Triton server

After building the Triton server and Triton client docker image, create a container and launch the Triton server:

$ docker run -it --net=host --gpus all --shm-size 8g nvcr.io/nvidian/tao/nvocdr_triton_server:v1.0 bashNext, modify the config file of nvOCDR lib. nvOCDR lib can support high-resolution input images (4000 x 4000 or larger). If your input images are large, you can change the configure file to /opt/nvocdr/ocdr/triton/models/nvOCDR/spec.json in the Triton server container to support the high resolution images inference.

# to support high resolution images

$ vim /opt/nvocdr/ocdr/triton/models/nvOCDR/spec.json

"is_high_resolution_input": true,

"resize_keep_aspect_ratio": true,

The resize_keep_aspect_ratio will be set to True automatically if you set the is_high_resolution_input to True. If you are going to infer images that have smaller resolution (640 x 640 or 960 x 1280, for example) you can set the is_high_resolution_input to False.

In the container, run the following command to launch the Triton server:

$ CUDA_VISIBLE_DEVICES= tritonserver --model-repository /opt/nvocdr/ocdr/triton/models/

Step 6: Send an inference request

In a separate console, launch the nvOCDR example from the Triton client container:

$ docker run -it --rm -v : --net=host nvcr.io/nvidian/tao/nvocdr_triton_client:v1.0 bash

Launch the inference:

$ python3 client.py -d -bs 1

Conclusion

NVIDIA TAO 5.0 introduced several features and models for Optical Character Detection (OCD) and Optical Character Recognition (OCR). This post walks through the steps to customize and fine-tune the pretrained model to accurately recognize handwritten texts on the IAM dataset. This model achieves 90% accuracy for character detection and about 80% for character recognition. All the steps mentioned in the post can be run from the provided Jupyter notebook, making it easy to create custom AI models with minimal coding.

For more information, see:

Release: NVIDIA DeepStream SDK version 6.3

Explore the latest streaming analytics features and advancements with this new release.

Explore the latest streaming analytics features and advancements with this new release.

Explore the latest streaming analytics features and advancements with this new release.

Next-generation AI pipelines have shown incredible success in generating high-fidelity 3D models, ranging from reconstructions that produce a scene matching…

Next-generation AI pipelines have shown incredible success in generating high-fidelity 3D models, ranging from reconstructions that produce a scene matching…

Next-generation AI pipelines have shown incredible success in generating high-fidelity 3D models, ranging from reconstructions that produce a scene matching given images to generative AI pipelines that produce assets for interactive experiences.

These generated 3D models are often extracted as standard triangle meshes. Mesh representations offer many benefits, including support in existing software packages, advanced hardware acceleration, and supporting physics simulation. However, not all meshes are equal, and these benefits are only realized on a high-quality mesh.

Recent NVIDIA research discovered a new approach called FlexiCubes for generating high-quality meshes in 3D pipelines, improving quality across a range of applications.

FlexiCubes mesh generation

The common ingredient across AI pipelines from reconstruction to simulation is that meshes are generated from an optimization process. At each step of the process, the representation is updated to match the desired output better.

The new idea of FlexiCubes mesh generation is to introduce additional, flexible parameters that precisely adjust the generated mesh. By updating these parameters during optimization, mesh quality is greatly improved.

Those familiar with mesh-based pipelines might have used marching cubes in the past to extract meshes. FlexiCubes can be used as a drop-in replacement for marching cubes in optimization-based AI pipelines.

FlexiCubes generates high-quality meshes from neural workflows like photogrammetry and generative AI.

Better meshes, better AI

FlexiCubes mesh extraction improves the results of many recent 3D mesh generation pipelines, producing higher-quality meshes that do a better job at representing fine details in complex shapes.

The generated meshes are also well-suited for physics simulation, where mesh quality is especially important to make simulations efficient and robust. The tetrahedral meshes are ready to use in out-of-the-box physics simulations.

Explore FlexiCubes now

This research is being presented as part of NVIDIA advancements at SIGGRAPH 2023 in Los Angeles. For more information about the new approach, see Flexible Isosurface Extraction for Gradient-Based Mesh Optimization. Explore more results on the FlexiCubes project page.